Artificial Intelligence

ChatGPT Outperforms Physicians Answering Patient Questions

While AI cannot replace clinicians, AI can draft accurate, empathic responses.

Posted December 26, 2023 Reviewed by Abigail Fagan

Key points

- A new study found that ChatGPT provided high-quality and empathic responses to online patient questions.

- A team of clinicians judging physician and AI responses found ChatGPT responses were better 79% of the time.

- AI tools that draft responses or reduce workload may alleviate clinician burnout and compassion fatigue.

A new study published in JAMA Internal Medicine suggests that AI tools could help physicians by drafting high-quality and empathic written responses to patients. The study compared ChatGPT with written responses by physicians on the social media online forum Reddit's AskDocs. AskDocs is a subreddit where members can post medical questions publicly, and verified healthcare professionals submit answers.

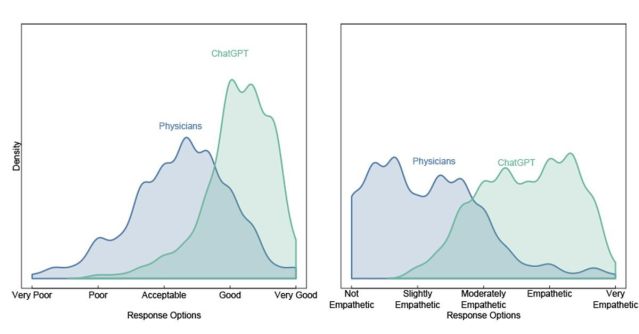

Researchers randomly sampled 195 verified physician responses to a public question on AskDocs and asked the same question to ChatGPT. Then, a panel of three independent licensed healthcare professionals chose which response was better and judged the quality of the information provided as well as the level of empathy or bedside manner demonstrated.

Without knowing whether the responses were written by a doctor or ChatGPT, the independent healthcare professionals chose ChatGPT's responses 79% of the time. ChatGPT responses were rated 3.6 times higher for quality and 9.8 times higher for empathy compared to physician responses. ChatGPT responses were notably longer (211 words on average) compared to the much shorter doctor responses (52 words on average). The clinicial team evaluating the responses found that ChatGPT responses were both nuanced and accurate.

Here are four emerging trends relating to the future of AI augmentation for physician responses to patients:

1. Research on AI tools that are integrated into clinical settings will be helpful.

This study does not suggest AI replacement of physicians and was not in a clinical setting but does highlight the potential for AI assistants like ChatGPT to draft helpful responses for clinicians answering patients. The next step will be to investigate how AI assistants perform in clinical settings (instead of a public social media forum), including how they perform when integrated seamlessly into existing secure electronic medical platforms, where clinicians field direct private questions from patients. The types of questions in a private clinical setting may be more specific to individual cases and may be written differently than those submitted on public forums. Private patient questions may assume that the doctor already has knowledge about their situation or refer to content that was discussed previously in person. These types of questions may pose different challenges for ChatGPT.

2. AI augmentation has the potential to relieve physician and clinician burnout and compassion fatigue.

AI assistants do not actually feel empathy or feelings, so the upside is that they do not experience compassion fatigue in the way that humans do. Physician burnout is increasing and has been linked to an overload of administrative tasks. According to the 2023 Medscape Physician Burnout and Depression Report, 53% of physicians say they are burned out, with nearly a quarter of physicians reporting symptoms of depression. The top factor in contributing to burnout was administrative and bureaucratic tasks, with physicians reporting that they spend over nine hours outside of work per week on documentation. AI tools can ease some of this workload by providing draft responses to patient communication.

3. Training for clinicians that includes AI limitations, including bias, will be important.

AI models remain limited in terms of bias, hallucinations, and miscalculations at times, so physician and clinical oversight is still essential since medical communications have patient health at stake. Training and education should be provided to clinicians in order to ensure that there is awareness of these AI limitations in order to prevent physicians from sending out AI-generated draft responses without reviewing them.

4. The long-term impact of AI tools on clinician judgment, decision making, and interpersonal interactions is currently unknown.

The potential effects of reliance on AI tools are unknown. What are the secondary effects if clinicians come to rely on these AI tools for generating these responses to patients? Will the bias that exists in AI training data influence physician behavior, decision making, or interpersonal interactions? The answer to these questions is uncertain and will be part of discovering how AI may change the physician-patient relationship.

Marlynn Wei, MD, PLLC © Copyright 2023. All Rights Reserved.

References

Ayers JW, Poliak A, Dredze M, et al. Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum. JAMA Intern Med. 2023;183(6):589–596. doi:10.1001/jamainternmed.2023.1838