Coronavirus Disease 2019

Stretching the Expertise Bubble

Be wary of all-knowing experts.

Posted May 25, 2020

The COVID-19 pandemic continues to provide no shortage of conflicting information, misinformation, and conspiracy theories that can quickly make your head hurt. There is also no shortage of experts willing to pontificate on any number of pandemic-related issues, from predicting likely mortality to explaining people’s behavioral choices.

Two recent articles demonstrate the willingness of experts to attempt to explain human behavior during the pandemic. The first focuses on differences in people’s willingness to self-sacrifice, and the second seeks to explain why people make bad decisions about COVID-19. In both cases, the authors claim expertise in their respective areas, applying their knowledge to explain people’s decisions and resulting behavior.

I’m not here to argue whether the authors are actual experts, as I have no evidence to suggest they aren’t. However, in both cases, the articles point toward an issue worthy of consideration when it comes to determining the experts’ credibility – the expertise bubble.

The Expertise Bubble

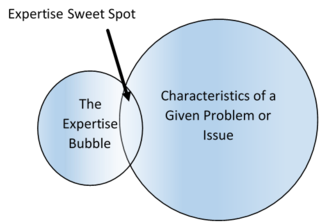

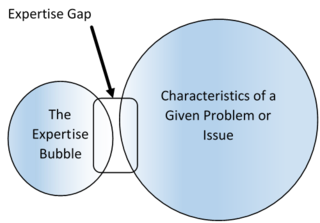

All experts’ knowledge and experience only really apply directly to a finite amount of subject matters and/or in specific situations or settings – an expertise bubble. When experts offer insights about an issue in which their expertise bubble overlaps, they tend to produce high-quality insights – the expertise sweet spot. When their expertise bubble doesn’t truly overlap, the quality of those insights may be much more variable due to their expertise gap [1]. The bigger the gap, the more likely they are to produce lower-quality insights, especially if the expert is unaware that a gap exists.

Consider, for example, someone very close to you, perhaps a spouse. You may have an extraordinary amount of experience with that individual in his/her role as a spouse, to the point where you might very well be considered an expert on that person’s behavior (i.e., your spouse) within that context (i.e., the spousal role). If you have limited experience with your spouse in the context of other roles, such as his/her role as an employee, then your expertise bubble may not extend as well (if at all) to your spouse’s behavior in those roles. Thus, if you were asked to offer insights about your spouse, those insights would be much higher quality when focusing on the spousal role (where your expertise bubble overlaps), but they would be much less likely to extend to that person’s work role (where you have an expertise gap) [2].

We can visualize the expertise bubble as a part of a Venn diagram. It forms the constraints around any one person’s legitimate expertise. Of course, there is quite a large world of problems or issues operating all around us, and the problems and contexts in which someone’s expertise fully applies is quite small [3].

Anyone with expertise possesses an expertise sweet spot. When the subject matter domain and the context of application align, an individual’s expertise can be quite insightful. However, the less overlap there is, the less likely that expertise applies.

This does not mean that an academic researcher who studies a particular topic within a laboratory has no expertise to offer (as being an expert has more to do with knowledge of the broader available evidence base than it does with what that person specifically studies). What it does mean is that if the evidence base comes strictly from a laboratory, it may have limited applicability and, therefore, may offer limited insight [4].

This issue, though, is not limited only to laboratory research. Just because someone is an expert on stress in the nursing profession does not mean those same insights would apply to another field, such as accounting. The further someone tries to stretch the limits of his/her expertise, the less likely that expertise is to offer valid insights.

That brings us back to the issue of COVID-19. Obviously, it is an issue that has presented a host of new challenges. Many individuals with legitimate expertise in some area have seemingly scrambled to identify COVID-related topics about which to apply their expertise. Some experts (e.g., virologists) may have subject matter knowledge, but it may not apply as well to this virus (based on this virus’ idiosyncratic effects). Other experts (e.g., psychologists) may have expertise about human decision-making but limited expertise about the ways in which it applies during a global pandemic. Because of its novelty, the current pandemic has produced a situation in which not a single expertise bubble truly overlaps, resulting in many experts overstretching their expertise bubble.

The gap between various people’s expertise bubbles and the current situation differs widely. When that gap is larger, more stretching of the expertise bubble is required, resulting in potentially lower-quality insights. While some experts recognize this, tempering their assertions and claims when more stretching is required, many others fail to do so, making proclamations with far more certainty and confidence than they should.

Experts Are Not Know-It-Alls

There is a common fallacy to which people fall prey, especially in uncertain situations: appeal to authority. It is only a fallacy, though, when the claims being made by the expert are dubious or questionable (in other words, lack evidence to support them). Yet, all too often, people accept experts’ claims without questioning or considering, even in passing, the basis for those claims [5]. The more authority we perceive the expert to possess, the less likely we are to question the expert’s claims.

It is, of course, impossible to thoroughly research the claims of every expert out there. However, there are some heuristics we can use to help determine whether a bit of skepticism is warranted when we come across the claims made by experts.

- The expert makes claims that do not match his/her background and experience. When experts venture into topical areas farther removed from their prior experience, they are more likely extending their expertise bubble into questionable domains. This is not necessarily a problem, unless the expert is also demonstrating a high level of confidence in the claims s/he is making. In such cases, further validation of the claims may be required.

- The expert claims to have the answer, even though the issue has a high level of complexity. The more complex the issue or problem, the less likely any one explanation is completely valid. For example, when it comes to human behavior, very seldom is there one explanation or cause for that behavior (e.g., there isn’t one right way to manage stress, even though many experts would have us believe there is). In such cases, it is likely the expert is at least oversimplifying the issue (this was an issue present in the COVD-19 article mentioned earlier on bad decision-making).

- The expert relies heavily on extreme examples or best/worst-case scenarios. It is often easy to use such an approach to make a case (e.g., the imminent terrorist attack being used as grounds for the morality of torture). Yet, such examples are seldom applicable to most cases, nor do they represent the most likely outcome. Therefore, if the expert’s claims are based on the best- or worst-case scenario, we should probably be a little bit more skeptical of those claims.

- The expert uses evidence that is not necessarily applicable to a given context. This was the case in the self-sacrifice article mentioned earlier. The author specifically took her own laboratory research and used it to justify arguments she was making in a very different, applied context. It does not mean her claims were invalid, but it does mean we should stop and consider whether differences in those contexts might impact the validity of her claims.

- The expert makes claims that appear counterintuitive. While intuition has a bad reputation, thanks largely to laboratory studies on cognitive bias (on which the COVID-19 decision-making article was grounded), in reality, intuition often works out in our favor. We make a lot of everyday decisions based on intuition and most of them work out in our favor (because we’ve learned from prior mistakes). If a claim smells fishy, it probably requires deeper investigation before we accept it as valid.

This is by no means an exhaustive list, and some of these may be more valuable in some situations than others (as it is impossible to come up with a list that is universally applicable). You can, of course, feel free to suggest other useful heuristics in the comments.

References

[1] This may occur because their subject matter expertise doesn’t exactly relate and/or the contexts in which their expertise applies is different than the current context.

[2] Of course, if you also work with your spouse, your insights may be much more accurate.

[3] It was impossible to construct the diagram to scale, as either the size of the expertise bubble would have to be infinitesimally small or the size of the problem or issue bubble would have to take up an entire neighborhood worth of screens.

[4] This is a recurring issue in laboratory studies that seek to generalize to more practical contexts.

[5] Except when we tend to have strong opinions that contradict the expert, in which case we may be motivated to look for holes in those claims.