Psychology

The Psychology of Deepfakes

People can't reliably detect deepfakes but are overconfident in their abilities.

Posted December 2, 2021 Reviewed by Kaja Perina

Key points

- Experimental results show that people cannot reliably detect deepfakes.

- Raising awareness and financial incentives do not improve people's detection accuracy.

- People tend to mistake deepfakes as authentic videos (rather than vice versa).

- People overestimate their own deepfake detection abilities, hence showing overconfidence.

by Nils Köbis, Christopher Starke and Ivan Soraperra

Amaka, a Nigerian video producer, is recruited to make an undetectable deepfake of political actors. He is torn. Should he take the attractive financial reward for this forgery or stay true to his moral standards? Using potent computers and machine-learning techniques that AI experts call “hyper-generative adversarial networks,” deepfakes are no longer detectable with the naked eye. Identifying them requires complex verification algorithms and massive computing power. Yet, Amaka has a way to trick the detection software and is pondering whether to abuse these tools for corrupt purposes.

The story above is a potential scenario that Chen Qiufan and Kai-Fu Lee sketch in their book AI 2041 (you can read this particular excerpt here). Through a mix of fiction and nonfiction, the book sketches a future in which generating and detecting deepfakes has become a global cat-and-mouse game. It is no longer science fiction to live in a world in which you can’t trust your eyes and ears when watching videos on the internet.

Generating deepfakes — hyper-realistic imitations of audio-visual content — has become increasingly easy and cheap. Thus, the number of deepfakes is skyrocketing. In just one year from 2019 to 2020, the number of deepfakes grew from 14,678 to 100 million videos, a 6,820-fold increase.

In many instances, deepfakes are made purely for entertainment purposes, such as the famous imitation of Barack Obama by comedian Jordan Peele, the DeepTomCruise TikTok account that went viral, or the use of deepfake technology in the movie "The Irishmen" to de-age Robert De Niro.

But deepfakes have a dark side. They have been used to sully journalists’ reputations by placing them in porn videos, as a popular tool for political disinformation campaigns (Dobber et al., 2021), and to enable entirely new forms of interpersonal deception. That is why policy-makers and researchers are warning the public about the potential dangers of deepfakes (Chesney & Citron, 2019; Köbis, Bonnefon, & Rahwan, 2021).

Indeed, many seem unprepared for it. With the help of deepfake technology, scammers can reach new heights of phishing attacks. In one instance, criminals defrauded a company for more than $240,000 by imitating the voice of a CEO.

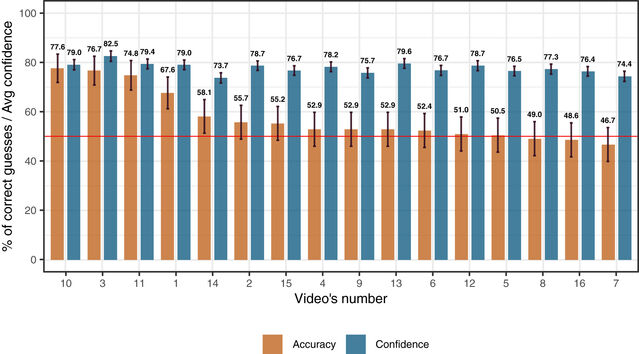

If you’re thinking, “I would never fall for a deepfake,” you are not alone. In a recent open-access study by researchers from the Center for Humans and Machines (Max-Planck-Institute for Human Development) and CREED (University of Amsterdam), the vast majority of respondents showed high confidence in their abilities to detect deepfakes (see blue bars, Figure 1). However, these confidence levels significantly exceeded their actual detection abilities (see orange bars Figure 1). When left to their own devices — their eyes and ears —, people could no longer reliably detect deepfakes. In fact, even when financially incentivized to accurately gauge their detection abilities, they are overconfident in them (Köbis, Doležalová & Soraperra, 2021).

What are the societal implications if people can no longer reliably distinguish between fake and real content on the internet? Research has shown that deepfakes can actively change people’s emotions, attitudes, and behavior (Vaccari & Chadwick, 2020). As a new tool of misinformation, deepfakes can thereby undermine democracy (Aral, 2020). It begs the question: how can we increase people’s deepfake detection accuracy to avoid falling for such fakes?

A previous blog post on Psychology Today suggested that raising awareness of the importance of detecting deepfakes and spurring more critical thinking might help. The same study showing overconfidence in detection abilities also put this intervention to the test. The results suggest that raising people’s awareness about the negative consequences of deepfakes does not increase participants’ detection accuracy compared to a control group whose awareness was not raised. This suggests that being fooled by deepfakes may not be a matter of motivation so much as ability.

This aspect makes deepfakes arguably different from other forms of misinformation, such as fake news articles. Thus, we call for more research investigating if deepfakes pose entirely new challenges to democratic societies, or are merely “old wine in new bottles”. Will the interventions now being used to reduce the spread of fake news (Pennycook et al., 2021) also prove effective against deepfakes? Or do we need new interventions that will help us rein in malevolent uses of this fast-growing form of deception?

About the Authors:

Nils Köbis is a research scientist at the Center for Humans Machines, Max Planck Institute for Human Development.

Christopher Starke is a Post-Doc at the Amsterdam School of Communication Research and the interdisciplinary research cluster Humane AI at the University of Amsterdam

Ivan Soraperra is a Post-Doc at the Center for Experimental Economics and Political Decision Making (CREED), University of Amsterdam

References

Aral, S. (2020). The Hype Machine: How Social Media Disrupts Our Elections, Our Economy, and Our Health--and How We Must Adapt. Crown.

Chesney, B., & Citron, D. (2019). Deep fakes: a looming challenge for privacy, democracy, and national security. California Law Review, 107, 1753.

Dobber, T., Metoui, N., Trilling, D., Helberger, N., & de Vreese, C. (2021). Do (Microtargeted) Deepfakes Have Real Effects on Political Attitudes? The International Journal of Press/Politics, 26(1), 69–91.

Köbis, N. C., Bonnefon, J.-F., & Rahwan, I. (2021). Bad machines corrupt good morals. Nature Human Behaviour, 5(6), 679–685.

Köbis, N. C., Doležalová, B., & Soraperra, I. (2021). Fooled twice: People cannot detect deepfakes but think they can. iScience, 24(11). https://doi.org/10.1016/j.isci.2021.103364 (Target article)

Pennycook, G., Epstein, Z., Mosleh, M., Arechar, A. A., Eckles, D., & Rand, D. G. (2021). Shifting attention to accuracy can reduce misinformation online. Nature, 592(7855), 590–595.

Vaccari, C., & Chadwick, A. (2020). Deepfakes and Disinformation: Exploring the Impact of Synthetic Political Video on Deception, Uncertainty, and Trust in News. Social Media + Society, 6(1), 2056305120903408.