Artificial Intelligence

Are AI Chatbots the Therapists of the Future?

New research suggests chatbots can help deliver certain types of therapy.

Posted January 17, 2023 Reviewed by Vanessa Lancaster

Key points

- AI chatbots are promising for skills-based coaching and cognitive behavioral therapy, but delivering other forms of treatment could be harder.

- Chatbot therapists could help provide therapy that is scalable, widely accessible, convenient, and affordable, but they would have limitations.

- The effectiveness of certain types of therapy may rely on a human element that chatbots are unable to provide.

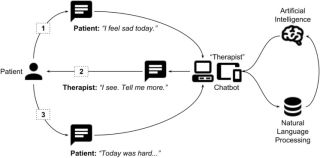

Could artificial intelligence chatbots be the therapists of the future? ChatGPT, a text-generating conversational chatbot made using OpenAI’s powerful third-generation language processing model GPT-3, has reignited this decades-old question.

GPT-3, one of several language models, was introduced in 2020, and its predecessors years earlier. It garnered more widespread attention when OpenAI released a public preview of ChatGPT as part of its research phase. GPT-3 is a third-generation generative pre-trained transformer, a neural network machine learning model trained on massive amounts of conversational text from the internet and refined with training from human reviewers.

GPT-3 has been used in many ways across industries: to write a play produced in the U.K., creating a text-based adventure game, build apps for non-coders, or generate phishing emails as part of a study on harmful use cases. In 2021, a game developer created a chatbot that emulated his late fiancé until OpenAI shut down the project.

AI chatbots are promising for certain types of therapy that are more structured, concrete, and skills-based (e.g., cognitive behavioral therapy, dialectical behavioral therapy, or health coaching).

Research has shown that chatbots can teach people skills and coach them to stop smoking, eat healthier, and exercise more. One chatbot SlimMe AI with artificial empathy, helped people lose weight. During the COVID pandemic, the World Health Organization developed virtual humans and chatbots to help people stop smoking. Many companies have created chatbots for support, including Woebot, made in 2017, based on cognitive behavioral therapy. Other chatbots deliver guided meditation or monitor one's mood.

However, certain types of therapy, like psychodynamic, relational, or humanistic therapy, could be trickier to deliver via chatbots because it is unclear how effective these therapies can be without the human element.

Potential Advantages to Chatbot Therapists

- Scalability, accessibility, affordability. Virtual chatbot therapy, if done effectively, could help bring mental health services to more people, on their own time and in their own homes.

- People can be less self-conscious and more forthcoming to a chatbot. Some studies found that people can feel more comfortable disclosing private or embarrassing information to chatbots.

- Standardized, uniform, and trackable delivery of care. Chatbots can offer a standardized and more predictable set of responses, and these interactions can be reviewed and analyzed later.

- Multiple modalities. Chatbots can be trained to offer many therapy styles beyond what an individual human therapist might offer. An algorithm could help determine what type of therapy would work best for each case.

- Personalization of therapy. ChatGPT generates conversational text in response to text prompts and can remember previous prompts, making it possible to become a personalized therapist.

- Access to broad psychoeducation resources. Chatbots could draw from and connect clients to large-scale digitally available resources, including websites, books, or online tools.

- Augmentation or collaboration with human therapists. Chatbots could augment therapy in real-time by offering feedback or suggestions, such as improving empathy.

Potential Limitations and Challenges of Chatbot Therapists

Chatbot therapists face barriers that are specific to human-AI interaction.

- Authenticity and empathy. What are human attitudes toward chatbots, and will they be a barrier to healing? Will people miss the human connection in therapy? Even if chatbots could offer empathic language and the right words, this alone may not suffice. Research has shown that people prefer human-human interaction in certain emotional situations, such as venting or expressing frustration or anger. A 2021 study found that people were more comfortable with a human over a chatbot depending on how they felt: When people were angry, they were less satisfied with a chatbot.

People may not feel as understood or heard when they know it is not an actual human at the end of the conversation. The "active ingredient" of therapy could rely on the human-to-human connection-- a human bearing witness to one's difficulties or suffering. AI replacement will likely not work for all situations.

- Timing and nuanced interactions. Many therapy styles require features beyond empathy, including a well-timed balance of challenge and support. Chatbots are limited to text responses and cannot provide expression through eye contact and body language. This may be possible with AI-powered "virtual human" or "human avatar" therapists, but it is unknown whether virtual humans can provides the same level of comfort and trust.

- Difficulty with accountability and retention rates. People may be likelier to show up and be accountable to human therapists than chatbots. User engagement is a big challenge with mental health apps. Estimates show that only 4% of users who download a mental health app continue using the app after 15 days, and only 3 percent continue after 30 days. Will people show up regularly to their chatbot therapist?

- Complex, high-risk situations such as suicide assessment and crisis management would benefit from human judgment and oversight. In high-risk cases, AI augmentation with human oversight is safer than replacement by AI. There are open ethical and legal questions regarding the liability of faulty AI-- who will be responsible if a chatbot therapist fails to assess or manage an urgent crisis appropriately or provides wrong guidance? Will the AI be trained to flag and alert professionals to situations with potential imminent risk of harm to self or others? Does relying on a chatbot therapist delay or deter people from seeking the help they need?

- Increased need for user data security, privacy, transparency, and informed consent. Mental health data requires a high level of protection and confidentiality. Many mental health apps are not forthcoming about what happens to user data, including when data is used for research. Transparency, security, and clear informed consent will be key features of any chatbot platform.

- Potential hidden bias. It is important to be vigilant of underlying biases in training data of these chatbots and to find ways to mitigate them.

As human-AI interaction become part of daily life, further research is needed to see whether chatbot therapists can effectively provide therapy beyond behavioral coaching. Studies comparing human versus chatbot across various therapy styles will shed light on the potential advantages and limitations of chatbot therapists. At this time, AI may help improve or augment therapy but likely not replace it.

Marlynn Wei, MD, PLLC Copyright © 2023. All rights reserved.

To find a therapist near you, visit the Psychology Today Therapy Directory.

Read more: