Artificial Intelligence

Instability Phenomenon Discovered in AI Image Reconstruction

Study reveals risk of using deep learning for medical image reconstruction.

Posted May 14, 2020

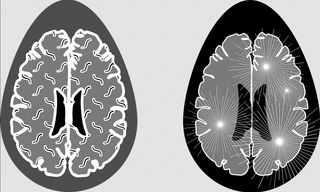

Artificial intelligence (AI) has made considerable strides in recent years, mainly due to the pattern recognition capabilities of deep learning used for computer vision, speech recognition, virtual assistants, autonomous vehicles, handwriting recognition, machine translations, text generation, and more purposes. However, there is a troubling weak spot emerging when it comes to using AI and deep learning for medical image reconstruction. On Monday, a new joint study led by the University of Cambridge and Simon Fraser University discovered an instability phenomenon when using artificial intelligence machine learning for image reconstructions that could lead to inaccurate medical diagnoses.

Led by researchers Dr. Anders Hansen at Cambridge's Department of Applied Mathematics and Theoretical Physics and Dr. Ben Adcock at Simon Fraser University, the global team of scientists Clarice Poon, Francesco Renna, and Vegard Antun published their study on May 11, 2020 in the Proceedings of the National Academy of Sciences of the United States of America (PNAS).

Artificial intelligence deep learning can be used to enhance image quality such as MRI scans by converting a low-resolution image into a higher one. Deep learning is a form of machine learning that is a deep neural network with many layers of processing. It learns from training data. In contrast, existing methods of image reconstruction apply math theory without any learning from prior data.

According to the researchers, AI deep learning has achieved performance that surpasses humans in the areas of computer vision and classification of images; however, the methods are “highly unstable.”

“An image of a cat may be classified correctly; however, a tiny change, invisible to the human eye, may cause the algorithm to change its classification label from cat to fire truck or another label far from the original,” wrote the researchers in the study.

Whether this instability also applies when using deep learning for image reconstruction was the focus of this study. The researchers tested six different deep learning neural networks (AUTOMAP, DAGAN, Deep MRI, Ell 50, Med 50, and MRI-VN) in order to detect the instability phenomenon in medical imaging that includes CT, NMR, and MRI scans. AUTOMAP, DAGAN, Deep MRI, and MRI-VN are neural networks for MRI scans. Ell 50 is a neural network used for any Radon transform-based inverse problem, including CT scans.

For the tests, the researcher deliberately perturbed images to see how gradual changes in perturbation result in image errors or artifacts in the various neural networks. The researchers discovered that small perturbations result in a “myriad of different artifacts” that may be difficult to detect as nonphysical. This calls into question what types of artifacts are produced by trained neural networks and the relationship of the instability to the training data, subsampling patterns, and overall network architecture. The instabilities ranged from subtle distortions and blurring to the complete loss of details. The researchers discovered that a small structural change may be lost in the reconstruction image, and that tiny perturbations in the image and sampling domain may produce acute artifacts in the reconstructed image.

Interestingly, the researchers also found that introducing more samples to neural networks may result in worse performance—the opposite of what one would reasonably expect. Some convolutional neural networks enable the changing of the amount of sampling. In this study, this feature is available in all of the neural networks except AUTOMAP. When the samples increased in the DAGAN, Ell 50, and Med 50 neural networks, the quality of the image reconstruction decreased. Whereas when more samples were introduced in the VN-MRI neural network, the quality stagnated but did not decay in performance. Only the Deep MRI neural network’s quality was similar to state-of-the-art non-machine learning methods as the number of samples increased.

The researchers discovered that deep learning is often an unstable technique when used for image reconstruction, which may result in false positives and false negatives. By testing a variety of deep learning networks for different types of medical imaging reconstruction, the scientists showed that instabilities were common occurrences and that the instability phenomenon will be a challenging and important problem to solve as health care increasingly becomes more digitized in the future.

Copyright © 2020 Cami Rosso All rights reserved.