Neuroscience

Neuroscience Research Faulted for Widespread Inaccuracies

Selective reporting and inflated effect sizes mar even high-impact studies

Posted September 9, 2016

We’ve all seen the headlines: “This Is Your Brain on Politics”; “This Is Your Brain after a Breakup”; “Neural Correlate” for Religion, Greed, or Narcissism “Found.”

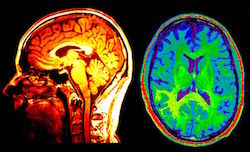

Much of the authority tied to such enticing but dubious claims rests with fMRI scans of the brain (short for “functional magnetic resonance imaging”), which depict areas of the organ as receiving more oxygen due to heightened activity—images that are then interpreted by software and again by researchers, often from painfully small sample sizes, as giving us credible insights into behavior X or emotion Y. The misleading newspaper headline “‘Hate Circuit’ Found in Brain” stemmed for instance from a 2008 study, “Neural Correlates of Hate,” which involved brain scans of a small sample of people shown photos of their exes, colleagues, and controversial politicians. According to PubMed, more than 40,000 scholarly articles published in the last twenty years draw assumptions and inferences about the brain from such scans. But how reliable are their conclusions? And how good is the software that reads and interprets them?

Scientists investigating the scans have long voiced concerns about the software’s internal assumptions, which can in turn distort the conclusions that brain activity appears to present, so generating false positives. The latter was rather comically demonstrated by neuroscientists at Dartmouth College in 2009, as Justin Karter reports, when they put a dead Atlantic salmon in the machine and “showed it a series of photographs depicting human individuals in social situations.” The data produced by the fMRI made it seem as though “a dead salmon perceiving humans can tell their emotional state.” Yet with thousands of articles still appearing monthly that draw sometimes-major inferences from fMRI software, an assumption has prevailed that the false-positive rate was low—in the region of 5 percent.

A new analysis of the software, published open-access in the Proceedings of the National Academy of Sciences, now calls that assumption and the entire field into question. Rather like the scenario of that dead Atlantic salmon, though on a massive scale, the study concluded that the methods used in fMRI research frequently create an illusion of brain activity where there is none—by their calculation, up to 70% of the time. Worse, the researchers—Anders Eklund in Sweden and Thomas Nichols and Hans Knutsson in the UK—found that some “40% of a sample of 241 recent fMRI papers did not report correcting for multiple comparisons, meaning that many group results in the fMRI literature suffer even worse false-positive rates than [we] found.”

Given this widespread unreliability of the scans relative to the frequently overstated importance attached to them, the latest blistering critique of medical and neuroscientific research by Stanford scientist John Ioannidis and colleague Denes Szucs looks very well-timed. In an extensive online analysis of the past five years of research published in 18 major psychology and cognitive neuroscience journals, including the high-impact Nature Neuroscience, Ioannidis and Szucs found that the studies suffered repeatedly from inflated effect sizes and selective reporting owing to their “unacceptably low level” power (referring to their statistical size, robustness, and reproducibility). Additionally, that “overall power has not improved during the past half century.”

Does that mean we’ll be spared dozens more articles promising to have revealed the “neural correlate” to envy, grief, or unhappiness? Don’t bank on it. Still, as Ioannidis and Szucs aptly note, given the tendency of the media to lend enormous credence to neuroscientific "findings," “the power failure of the cognitive neuroscience literature is even more notable as neuroimaging (‘brain-based’) data is often perceived as ‘hard’ evidence lending special authority to claims even when they are clearly spurious.”

christopherlane.org Follow me on Twitter @christophlane