Artificial Intelligence

Can AI Detect Sexual Orientation from Photos?

A new Stanford experiment says yes, but it's more complicated...

Posted September 20, 2017

A new study from Stanford claims to be able to identify whether you are gay or straight just by analyzing a photograph of your face. Not only that—it claims to determine sexual orientation more accurately than people do.

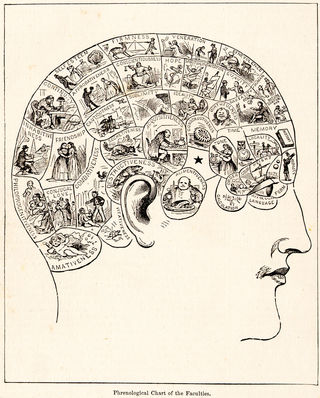

On one hand, this is in line with the progress of Artificial Intelligence (or AI, for short) that is able to detect personal attributes from online data. On the other hand, it has uncomfortable privacy implications and throwbacks to phrenology.

First, let's observe the study. It analyzed photos from one dating site. It looked at white men and women who identified as either gay or straight. This excluded people of color, bisexuals, transgender people, and anyone not on the site. The algorithm examined facial features and photo features:

The research found that gay men and women tended to have “gender-atypical” features, expressions and “grooming styles,” essentially meaning gay men appeared more feminine and vice versa. The data also identified certain trends, including that gay men had narrower jaws, longer noses, and larger foreheads than straight men, and that gay women had larger jaws and smaller foreheads compared to straight women. (summary from The Guardian)

So is there some genetic link that makes gay people appear physically different from straight people? Some AI researchers may want to think so because the algorithm suggests it, but there is no way this study gets close to that conclusion. So many people were excluded from this study. What it really captures are beauty standards for this particular online dating site.

However, if people believe the algorithm can detect sexual orientation, it opens the door for surveillance and incorrect assumptions. Will we start running people through the algorithm and making conclusions about them? What if that's done in places like Chechnya where gays are actively being executed or jailed?

What this project highlights is the shaky ethical grounds that AI researchers are treading on. I am a computer scientist who creates AI that finds out things about people. However, I wouldn't personally run a study like this. It really speaks to the dataset the researchers have, rather than the trait they studied, but it has broad societal implications if people believe the results. The real worry about AI should not be that it is so good that we face a Skynet-style takeover. Rather, it is that people with power will make incorrect assumptions about what AI can do and they will use it to make life-altering decisions about people who have no recourse and never consented to its use.

References

Kosinski, Michal, and Yilun Wang. "Deep neural networks are more accurate than humans at detecting sexual orientation from facial images." (2017).