Replication Crisis

What Do You Know? The Pros and Cons of a Scientific Approach

Part 2: Is the scientific method the best way to establish knowledge?

Updated September 14, 2023 Reviewed by Gary Drevitch

In Part 1 of this series, I discussed the illusion of knowledge in the context of COVID-19 and explained how much of what we think we know is actually belief, taken as truth, because it came from a source in which we have faith.

---

Philosophers as far back as Plato have defined knowledge as "justified true belief (JTB)." However, skeptics and Pyrrhonists have challenged this notion for centuries, reasoning that the same evidence one person considers valid in justifying a belief as "true," another may consider biased or incomplete (David McClean, personal communication, 2020).

Against this backdrop, Enlightenment-era thinkers, including René Descartes and Charles Sanders Peirce, have promoted the scientific method as the best means for acquiring justifiable evidence to establish beliefs as true knowledge. In his influential 1877 essay, The Fixation of Belief, Peirce refined Aristotle's approach and extolled the virtues of the scientific method over other means of knowing, including blindly accepting facts from authority figures and relying on pure reasoning to establish knowledge without testing one's conclusions in the real world.

Indeed, the scientific method, with its insistence on direct observation and the objective testing of hypotheses, has been a major advance for our civilization, allowing us to catapult over superstitions and other belief systems that were either invalid or unreliable. Furthermore, it is still the best system for helping our species progressively advance toward truth. However, the scientific method, if not the entire scientific process, is not without its limitations in its ability to yield justifiable evidence to establish knowledge.

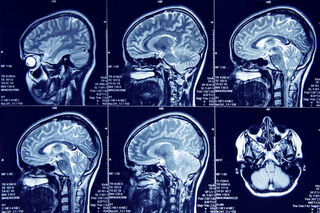

Over the past decade, we have learned that many of the scientific findings we have taken as fact have been retracted, either due to error or fraud (Brainard & You, 2018), and as microbiologist Dr. Elisabeth Bik notes in a New York Times op-ed, advancing technology is only making things worse. In the field of psychology, specifically, we have been coming to terms with our own reckoning, known as the "replication crisis," since 2011 (Pashler & Wagenmakers, 2012). Though slightly less publicized, a replication crisis in the field of neuroradiology may end up having more serious consequences. In 2016, researchers from Sweeden (Ecklund et al., 2016) discovered a statistical anomaly that likely invalidated 40,000 fMRI studies of neurology over a 15 year period.

Part of the problem with science is that as we try to study more sophisticated phenomena, we need more sophisticated equipment, which removes us further and further from direct observation and requires that we place our scientific faith in machines and other people's work. As Bec Crew (2016) points out in a summary of Ecklund et al's findings, "when scientists are interpreting data from an fMRI machine, they’re not looking at the actual brain... what they're looking at is an image of the brain divided into tiny 'voxels', then interpreted by a computer program."

Indeed, even mathematics, the purest of the STEM fields, seems to be suffering from a crisis of confidence, as the validity of countless proofs that form the foundation of modern mathematics has recently been called into question. Mathematician Kevin Buzzard told attendees at a 2019 conference that "the greatest proofs have become so complex that practically no human on earth can understand all of their details, let alone verify them," and he fears that many proofs widely considered to be true are wrong (Mordechai Rorvig, 2019). In paraphrasing Buzzard, journalist Mordechai Rorvig explains that "new proofs by professional mathematicians tend to rely on a whole host of prior results that have already been published and understood...but there are many cases where the prior proofs used to build new proofs are clearly not understood." In philosophy, this is called the problem of infinite regress: where each current article of knowledge is dependent on some previous article of knowledge that is blindly taken as true or cannot be proven true, ad infinitum.

Getting back to science, let's assume a medical researcher, Dr. Feelbetter, wants to run an experiment to determine whether a lower dose of the antibiotic azithromycin would be equally effective as the standard 500 mg dose for treating acute sinusitis (i.e., sinus infections), but with fewer side effects. So she designs a randomized, double-blind study comparing a 250 mg dose to the 500 mg dose and also to a placebo-control.

In every experiment, the principal investigator has control over all of the parameters of the study and is forced to make subjective decisions about every single aspect of the investigation. In this particular experiment, Dr. Feelbetter has an endless list of decisions to make, including:

a) Who will serve as research participants? (Adults? Children? Men? Women? Members of a specific ethnic group susceptible to sinus infections? etc.)

b) How will "acute sinusitis" be defined, measured and diagnosed?

c) How will the drug, azithromycin, be administered? (Tablet? Liquid suspension? IV?)

d) How will the effectiveness of the drug be assessed? (X-ray scans of the nose? Physician exam? Patient self-report?)

e) Which statistical procedures will be used to analyze the data, and which variables, from an infinite set, will be statistically controlled in the analyses?

This is but a small fraction of the types of decisions that researchers need to make in scientific experiments, and the reality is that tremendous subjectivity goes into each of these decisions. How do we know whether Dr. Feelbetter, or any of the scientists we trust to conduct the research our lives depend on, made the right decisions in each of these areas, or resisted the temptation to engage in fraudulent practices?

One of the benefits of getting a Ph.D. in psychology is that we are trained not only as clinicians but as scientists; and as scientists, we are required to regularly present our research to peers in the scientific community at academic conferences and weekly brown bag meetings. Presenting one's research at such venues can be a terrifying experience because it is commonplace for other scientists to tear your research apart when they disagree with your methodology or your statistics. This is how things have always been in science and this reality led Max Planck, pioneer of quantum physics, to famously say:

"A new scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die, and a new generation grows up that is familiar with it."

Contrary to what many people think, most issues in science are not settled - a point made by NYU physicist Steven Koonin in his book about climate change, Unsettled. If climate change is too abstract for you, consider instead the uncertainty in the scientific community about whether masks are effective in stopping the spread of COVID.

I mention these things, not because I seek to attack science but because in order to defend it against pseudoscience and conspiracy theories it is first necessary to create a context of realistic expectations for scientific inquiry. I personally have faith in the majority of scientists and the majority of research findings published in peer-reviewed journals because, in my own work, this practice has served me well. But I must concede that while I have faith in the majority of peer-reviewed research findings, I don't know about them in the same way I know about the effects of gravity on my body when I jump in the air and come crashing back down to Earth (a point that was made a bit more comically by the slackers on It's Always Sunny in Philadelphia).

Furthermore, I must also confess that my experiences in science have made me aware of the limits of our ability to know things, even when using the scientific method, and these experiences have bolstered my faith in many other things that cannot be proven by science. In the end, I believe that we cannot hope to attain knowledge, we can only approach it, and our best efforts in knowing are supported by our direct experiences (i.e., what William James called "radical empiricism"), validated by the experiences of others, with investigations from multiple perspectives.

-----

I invite you to read Part 3 of my What Do You Know? series which focuses on how postmodernist thinking has eroded our confidence in what we know and this has been exploited by those with a range of intentions.

References

Brainard, J. & You, J. (2018). What a massive database of retracted papers reveals about science publishing’s ‘death penalty.’ Science Magazine, October 18, 2018.

Pashler, H. & Wagenmakers, E. J. (2012). "Editors' Introduction to the Special Section on Replicability in Psychological Science: A Crisis of Confidence?". Perspectives on Psychological Science. 7 (6): 528–530.

Eklund, A., Nichols, T.E. & Knutsson, H. (2016). Cluster failure: Why fMRI inferences for spatial extent have inflated false-positive rates

PNAS, 113 (28) 7900-7905.

Rorvig, M. (2019). Number Theorist Fears All Published Math Is Wrong. Vice News, Sep 26 2019, https://www.vice.com/en_us/article/8xwm54/number-theorist-fears-all-published-math-is-wrong-actually

Crew, B. (2016). A Bug in FMRI Software Could Invalidate 15 Years of Brain Research. Science Alert, July 6, 2016, https://www.sciencealert.com/a-bug-in-fmri-software-could-invalidate-decades-of-brain-research-scientists-discover