Growth Mindset

Do Professors' Fixed Mindsets Harm Women in STEM?

"Incredible results" may be too improbable to be believed.

Posted September 7, 2021 Reviewed by Chloe Williams

Key points

- A new study purports to show that STEM professors who convey a fixed mindset undermine women's performance more so than men's.

- There are several limitations in the study that call this result into question, such as implausibly large effect sizes.

- An experimental manipulation in the study altered many independent variables, making it unclear if the results are due to mindset differences.

Merriam-Webster's online dictionary defines incredible as:

- too extraordinary and improbable to be believed

- amazing, extraordinary

Psychologists have a long history of producing "incredible results" that they have framed in the second sense, but which usually turned out to be "incredible" in the first sense. For peer-reviewed articles demonstrating this, go here or here; for readable posts and essays on this, go here or here.

This gets us to:

"Professors Who Signal a Fixed Mindset About Ability Undermine Women's Performance in STEM"

That is the title of an article that recently was accepted for publication in Social Psychological and Personality Science (available here). The paper reports two studies, an experiment and a survey. In the authors' words: "these findings suggest that STEM professors who communicate fixed mindset beliefs create a context of stereotype threat in their courses, undermining women’s—but not men’s—performance."

Maybe they do, but in the rest of this post, I explain why there are reasons to doubt.

The Impossibly Large Effects

Nick Brown first pointed out the implausibility of some of the main findings on Twitter. The red flag he caught were absurdly large effect sizes. The average effect size in social psychology is about d=.4, which itself means approximately a correlation of .20 between two variables. On page 5, the authors of the recent paper wrote, "the effect of the professor’s fixed (vs. growth) mindset beliefs was nearly twice as large for women (d = 12.19) than it was for men (d =6.10).

To put this in some perspective, "A very large effect size (r = .40 or greater [about d=0.8) in the context of psychological research is likely to be a gross overestimate that will rarely be found in a large sample or in a replication" (Funder & Ozer, 2019). If d=.8 is likely to be a "gross overestimate, the only possible descriptor of d's of 6 and 12 is "incredible" (in the first meaning). This would be kind of like a study finding a height difference between men and women of four feet, rather than four inches.

What Is Going On?

Effect sizes expressed as d are mean differences between groups divided by standard deviations.

I am going to guess that most readers of this post have a pretty good understanding of what means or averages are. Standard deviations may be more of a mixed bag. They are a measure of how much the people in each condition differ from each other (some may have scores of 2, others 3, yet others 4 or 5 or more; the standard deviation puts a number on how much of this variability there is). If you are familiar with standard deviations, great. But if not, you can get the gist of the problem without being a stats professor.

In short, they used the wrong standard deviations, ones that are way too small. This is knowable because the standard deviation they reported implies an incredible (in the first sense) set of results. For example, for women, the reported means for gender stereotype endorsement were 2.06 and 3.95, respectively, in the Growth vs. Fixed Mindset conditions (higher score=more stereotype endorsement). But the standard deviations were only .15 and .16, respectively. They reported in Table 3 a 95 percent confidence interval for the Growth condition of 1.76, 2.35. This means that 95 percent of scores fell between 1.76 and 2.35.

This is beyond implausible. The response options for this ranged from 1 to 6, in whole numbers. Their 95 percent confidence interval means that 95 percent of the responses were 2.0. They had 133 women spread over the two mindset conditions, so, even though they did not report the number of people (N) per condition, we can presume it was somewhere around 65 (unless they also failed to randomly assign participants, and I will give them the benefit of the doubt here). This means that about 62 of their respondents all had the exact same response of 2. This is about what one would expect if respondents were instructed to all give "2" as an answer to the question (a few would not pay attention and give a different response anyway).

Statistical Significance

A slew of the key results are right around p=.05. In lay terms, "statistical significance" (p<.05) has long been used as a gatekeeper allowing researchers to claim, "you can really take our results seriously." This arbitrary threshold has been: 1. rejected by many statisticians and methodologists (e.g., Wasserstein et al, 2019); and 2. shown to correspond to results that are considerably less likely to replicate than results with lower p-values (Aarts et al, 2015).

So what did this mindset study find? Because the study emphasizes mindset effects on women, they needed to show that women were affected more than men by their manipulation. This is tested by the statistical interaction between participant sex and their mindset manipulation. Those p-values, across two studies and three dependent variables were reported in Table 2 and were: .054, .001, .048, .785, .234, and .003. The strength of this evidence for supporting statements (such as the title) leaves more than a little to be desired.

The Strange Manipulation

The first study had a very strange experimental manipulation (the second was a survey without an experimental manipulation). In the first study, the researchers claimed to have manipulated whether the professor communicated a fixed or growth mindset via the syllabus.

Fortunately, however, the authors publicly posted their materials. So I examined the syllabi for the fixed and growth mindset manipulations. In a strong experiment, researchers manipulate one thing and one thing only between conditions. For example, one group might practice 40 minutes a day, and the other group not at all (to test for practice effects); or one group might read resumes of identical job applicants who differ only in their sex (to test for gender bias).

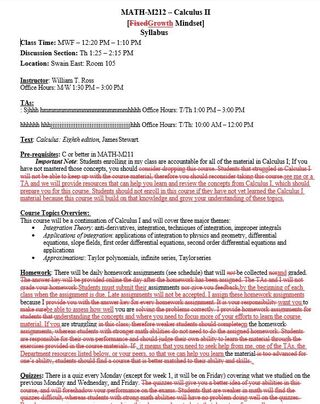

But that is not what I discovered in this mindset study. Instead, the syllabi were vastly different on everything from grading to how much assistance and feedback the professor offered, and much more. The differences seemed so extensive that I used Microsoft Word's "Compare" feature to examine more precisely how similar or different the syllabi actually were. Changes show up in red. This is shown here:

The only important thing here is how much red there is, which shows how vastly different the two syllabi are. This is important because they did not report testing anything conveyed by these syllabi other than whether it communicated fixed vs. growth mindset, or endorsement of social stereotypes. It is possible that the syllabi also conveyed professorial warmth/coldness, interest in students and teaching, and even ease of getting an A in the course (and probably many more things). This is important because, even if the results of the study are completely sound as reported, it is not at all clear that any downstream effects of the syllabi on their dependent variables were produced by fixed vs. growth mindset, rather than one or more of the (many) other things communicated by the syllabi. Feel free to replicate this yourself; their materials are publicly available online.

Other Research

Of course, this is only a single paper reporting two studies. However, canonical conclusions, aka "received wisdom," is built on the foundation of such studies. Indeed, both a recent meta-analysis (Sisk et al 2018) and national experiment (Yeager et al 2019) found statistically significant effects plausibly interpretable as trivially above zero. Until stronger evidence comes in supporting it, this seems to be much ado about hardly anything.

Bottom Lines

1. How it's possible that four authors plus at least two reviewers and an editor caught none of this is a pressing question if one cares about the validity of articles published in psychology journals that wish to claim scientific credibility.

2. Whether any of the findings in this article are credible is probably unknowable until its findings are replicated by independent teams of scientists.

3. Peer review does not provide the validity guarantee that it is often cracked up as providing.

References

Aarts et al (2015). Estimating the reproducibility of psychological science. Science, 349, 943.

Canning et al (2021, online version). Professors who signal a fixed mindset about ability undermine women's performance in STEM. Social Psychological and Personality Science.

Funder & Ozer (2019). Evaluating effect size in psychological research: Sense and nonsense. Advances in Methods and Practices in Psychology, 2, 156-168.

Sisk et al (2018). To what extent and under what circumstances are growth mind-sets important to academic achievement? Two meta-analyses. Psychological Science, 29, 549-571.

Wasserstein et al (2019). Moving to a world beyond "p<.05." The American Statistician, 73, 1-19.

Yeager et al (2019). A national experiment reveals where a growth mindset improves achievement. Nature, 573, 364-369.