SSRIs

How to Create Scientific Myths Without Really Trying

Suppressed findings, selective reporting, and citation biases.

Posted January 28, 2020 Reviewed by Jessica Schrader

How does scientific psychology create false myths, certainty out of uncertain data?

Wait. This can't be. I must be wildly overstating something. False? Myths? It's science!

There were experiments! They had "statistically significant findings"! There are a zillion published studies; they can't all be wrong. Can they?

It's the wrong set of questions and objections. It is not that all the studies are "wrong." It's that the conclusions can become unhinged from the evidence.

It is possible because of selectivity in reporting and describing results. You can think of it as "data-laundering," an empirical cousin of "idea laundering," which refers to the ways in which some academic fields have created entire intellectual eco-systems (journals, conferences, courses) that package their political values and goals as "scholarship."

Data-laundering is the related process by which data high in uncertainty is transformed to justify clear and compelling conclusions. How is that possible? De Vries et al. showed how:

De Vries et al. (2018) found over 100 trials examining the effectiveness of antidepressant interventions and basically found that the published, peer-reviewed scientific literature was little better than pure myth. What is going on here?

First, a disclaimer: Nothing here constitutes an evaluation of any particular anti-depression intervention; if you are currently on an anti-depressant or may be prescribed one, it is up to you to discuss this with your doctor and to do your own due diligence regarding the strength of the scientific evidence on its effectiveness and safety. Good questions are always:

1. Doctor, can you point me to the scientific evidence regarding this medication's effectiveness?

2. What is known about negative side effects?

I strongly recommend this guest blog by health psychologist Richard Contrada, Be a Well-Informed, Active Self-Manager of Your Medical Care, which goes into some depth on how a skeptical attitude towards medical interventions enhances one's ability to distinguish good interventions from bad ones. It can possibly even save your life.

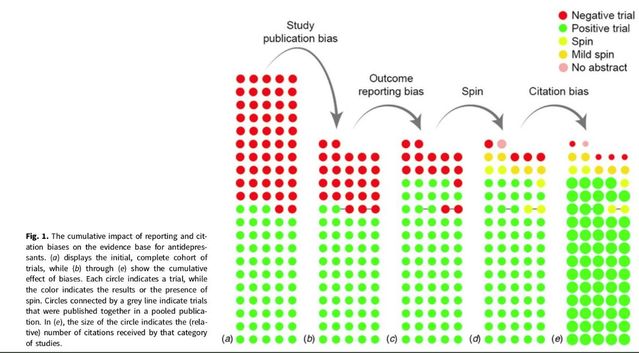

Back to De Vries et al's study. The first column on the left (below) shows the raw studies. About half the trials were negative (red, meaning either no effect or an effect in the wrong direction, e.g., the intervention did more harm than good). About half were positive (green, the intervention seemed to alleviate depression).

The second column from the left shows which got published (publication bias). You can see visually that even though the studies were split almost 50-50 regarding what they found, about two-thirds of the studies that got published had positive results, and only about one-third of those getting negative results got published.

The third column shows "outcome reporting bias." That refers to selectivity, or slant, with respect to what got reported.

So, to use a hypothetical example that I made up just to explain this point, let's say some study had three outcomes: One showed that the intervention made things worse, one showed no effect, and one showed it made things better. Overall, there was no effect (negative study). But if the researchers only report the positive result, through the magic of selective reporting, the study can appear to have produced a positive result. Most studies are now "positive."

The fourth column from the left refers to spin. Even if the paper honestly reported mixed or weak outcomes, the abstract or discussion characterized the findings as effective. Nearly all studies are now "positive."

The final column is citation bias. Even after all that? There is still more bias. Studies "finding" (or at least spun as finding) positive effects are cited nearly three times as much as studies finding negative effects!

By the end? We have "scientific" literature literally filled with celebrations of the effectiveness of these anti-depression interventions built on actual scientific literature composed of half-failures. And that, gentle reader, is how scientific myths are created using "normal" scientific processes. Do these problems go well beyond antidepressant research? For now, no one knows for sure, but:

1. There is no reason to think these processes are restricted to anti-depression research, i.e., I'd certainly adopt "applies widely" as a working hypothesis that I'd tentatively hold pending disconfirmation.

2. This article, subtitled "How Ugly Initial Results Metamorphosize Into Beautiful Articles," showed that in business/management research, dissertations reporting mixed and unclear findings often became transformed into clear, compelling narratives by the time they got published, by the simple magic of the selective reporting of results.

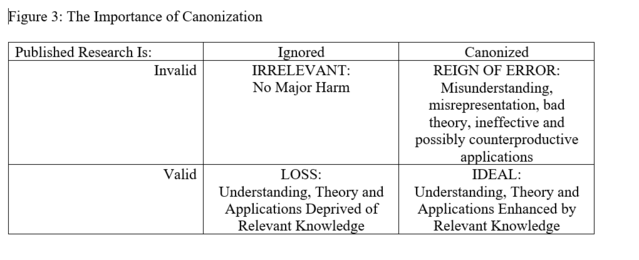

3. In a forthcoming paper, we call this the "canonization problem." Canonization refers to the ideas that become widely accepted as true, especially by other scientists. This figure is from that paper:

The situation here, with both the antidepressant and management research, is related but slightly different. It is not that the underlying research is "invalid." It is that that full scope of findings is mixed, but that the mixed nature of those findings does not make it into what gets canonized. You still get a "reign of error" because the science seems to justify a full-throated embrace of some finding (e.g., "the antidepressants work, eureka!"), whereas that conclusion is false: The underlying research does not justify an enthusiastic embrace of the studied antidepressants.

This sort of problem—unjustifiably canonizing certain findings that are not justified by the underlying science—is something I have blogged about repeatedly, such as here, here, here, here, and here.

No, Virginia, I do not think this is remotely restricted to antidepressant research.