Self-Harm

The Other Twitter Files

Twitter is providing a haven for self-harm.

Posted December 12, 2022 Reviewed by Hara Estroff Marano

Key points

- Journalists are exposing Twitter's politically biased and capricious content-moderation protocols—but self-harm content continues unabated.

- Twitter stifled criticism of the platform for not enforcing its prohibition on self-harm—while ignoring self-harm posts.

- Nearly 25% of girls 12 to 16 are intentionally injuring themselves, mostly with knives and razor blades. They need help, not a platform.

When Twitter was busy interfering with “Libs of TikTok,” it was largely ignoring the increase in graphic images and videos of young people cutting themselves—sometimes so deeply their wounds required ER visits. Journalists are now exposing Twitter's politically biased and capricious content-moderation protocols. But while Twitter personnel were censoring ideas and deplatforming people they didn’t like, the platform was paying virtually no attention to the self-harm its younger users were posting and encouraging.

In October of 2021, 5Rights, a UK-based children’s digital rights charity, reported that Twitter and other platforms were “systematically endangering children online” by facilitating children’s ability to connect with others celebrating self-harm. Specifically, Twitter’s algorithmic recommendation system was steering accounts with child-aged avatars searching the words “self-harm” to Twitter users who were sharing photographs and videos of cutting themselves. The organization told Twitter about specific self-harm search-terms like #shtwt, #ouchietwt, and #sliceytweet.

Despite Twitter being fully informed, in August of 2022, my colleagues and I at the Network Contagion Research Institute (NCRI) found a 500% increase in average mentions of shtwt, the global search-term for “self-harm twitter,” along with increases in other search-terms that help people find graphic and bloody self-harm images, creating a virtual “community” of self-harmers. We published a paper, featured in a Washington Post article on August 30, exposing some of the codewords that self-harmers use on Twitter to find one another, including “shtwt.” We illustrated the increase in the number of accounts posting graphic self-harm images and videos, specifically “cutting,” and we noted the celebratory comments on such tweets.

These tweets and the comments on them that encourage self-harm are all in direct violation of Twitter’s content policy. And the images and comments are easy to find. As we wrote in our report: Terms associated with layers of skin such as styrotwt, beanstwt, laffytaffytwt, and others are mentioned thousands of times per month allowing users to gamify and market self-harm. Wounds associated with “beanstwt” tweets [referring to the subcutaneous layer] are extremely severe and are often the tweets and images most liked and shared.

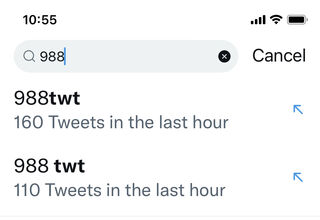

As a result of our report and the Washington Post article, Twitter began displaying a 988 helpline at the top of search results when people used “shtwt” (for “self-harm twitter”). But the self-harm tweets still appeared immediately below it. Worse, shortly after the helpline began appearing in shtwt searches, “988twt” became the new popular self-harm search term, tweeted hundreds of times per hour to tag horrific self-harm posts.

On October 3, I criticized Twitter for how it deals with self-harm, specifically that Twitter does not enforce its “gratuitous gore” policy for self-harm images nor does it enforce its policy of prohibiting tweets that “promote or encourage suicide or self-harm.” To illustrate how easy Twitter makes it to find posts with extremely graphic images and videos depicting severe self-harm (cutting), I tweeted a screenshot of a horrific self-harm post (cutting) and some of the self-harm codewords contained in the tweet—including 988twt. I also tweeted two of the encouraging comments on that post, including. I wrote, “A cursory search for terms like shtwt beanstwt fasciatwt bloodtwt ... etc. turns up graphic images of self-harm and delighted responses encouraging more. (Images and comments below are extremely disturbing.) KEEP YOUR CHILDREN OFF SOCIAL MEDIA.”

In response, Twitter locked me out of my account (@PamelaParesky)., claiming I violated the same “gratuitous gore” policy I criticized them for not taking seriously. Meanwhile, the gratuitously gory tweet I screencapped remained accessible and continued to contribute to the spread and increasing severity of self-harming behaviors.

Soon after I was locked out, my NCRI colleague, Alex Goldenberg, looked to see if the original self-harm tweet was still up. (It was.) And then Twitter suggested to him another self-harm tweet (“you might like…”). A comment on that tweet even included instructions for how to make a common disposable razor “more easy to handle” for self-cutting.

Two days after my lockout, the “stats” of the original post I was locked out for screencapping and sharing were posted by the account holder. At that time, it had been up for one week. It had 2,240 likes, 287 retweets, and 67,332 impressions—in other words, it was seen a total of 67,332 times within a week. As we reported in our NCRI paper, self-harm posts can garner thousands of likes, hundreds of retweets, and dozens of admiring comments, including many with questions about how to duplicate the self-harm (e.g. “what did you use?”), even when coming from accounts that have only a few hundred followers.

My first appeal to Twitter to let me back into my account was quickly denied. Several more tries elicited no response until more than ten days later, when I received confirmation that my newest appeal had been received. The option of deleting the tweet in order to access my account was always available, with the caveat that “by clicking delete, you acknowledge that your Tweet violated the Twitter Rules.” I was not willing to falsely confess to having violated Twitter’s rules and risk having additional problems in the future.

On October 16th, I wrote a version of this article, which Wesley Yang published on his substack and several high-profile Twitter users circulated. Twitter never notified me about the results of my appeal, but within hours of the article being published, my account was unlocked.

Nearly a quarter of girls between 12 and 16 are intentionally injuring themselves, mostly with knives and razor blades. The rate is even higher among teens from upper-middle class, highly educated families. Those who self-harm are six times more likely to be hospitalized for mental illness than those who don’t and more than four times as likely to attempt suicide. According to the National Institute of Mental Health, suicide is now the second leading cause of death among children 10-14 and the third leading cause of death among those 15-24.

After reading my article, writer Christina Buttons tried out some of the codewords on Twitter and came across an image of a girl who had sliced into her arm in a school bathroom. Christina did some sleuthing and based on what she saw in the background of the photo was able to identify the school and alert school personnel.

While attention is rightly focused on Twitter’s political bias and related questionable content-moderation, let’s hope there is some attention paid to helping Twitter’s young users by enforcing prohibitions on posting and celebrating images of self-harm. Young people who intentionally harm themselves need help, not a platform. ♦

Pamela Paresky, PhD, is a 2022-23 Visiting Fellow at the Johns Hopkins SNF Agora Institute, a Senior Scholar at the Network Contagion Research Institute, and the author of the guided journal, A Year of Kindness. Dr. Paresky's opinions are her own and should not be considered official positions of any organization with which she is affiliated. Follow her on Twitter @PamelaParesky, and read her newsletter at Paresky.Substack.com.