Artificial Intelligence

AI Can Increase the Importance of Human Expertise

Defaulting to AI-driven outputs can lead to unintended consequences.

Posted July 5, 2023 Reviewed by Davia Sills

Key points

- The increasing availability of AI-driven tools makes it possible to accelerate the decision-making process.

- Many of these decisions carry high consequences, and merely defaulting to the AI can pose severe risks.

- The presence of human expertise can provide some oversight over AI-driven decisions.

Prior to the internet (for those of us who remember), it was a lot more difficult to find information. If you wanted to research some topic, especially a complex one, it could be quite an arduous endeavor. It often required relying on traditional sources, such as books, academic journals, or personal connections with knowledgeable individuals. As such, individuals with specialized knowledge or skills were highly valued because they possessed information not readily accessible to everyone.

The internet revolutionized the availability and dissemination of information. It made vast amounts of knowledge accessible to anyone with an internet connection, which has only grown with ever-increasing internet speeds. Search engines and online databases made it possible for people to quickly find information on almost any topic, reducing the need for reliance on the expertise held by a select few.

But the internet also brought challenges in determining the credibility and accuracy of information. Those with insufficient expertise to discriminate what was credible from what was not could easily research their way into questionable conclusions (e.g., the Earth is flat, vaccines cause autism). And we saw a rise in people claiming they had “done their own research” to justify their views, regardless of the legitimacy of the views they endorsed.

While the internet made information more accessible, it also amplified the importance of expertise in helping individuals make sense of the overwhelming amount of available information. Expertise allowed for deeper understanding, analysis, and synthesis of knowledge, enabling individuals to make more informed decisions and navigate complex subjects more effectively.

Fast-forward to today and the increasing availability of AI-driven tools for research and decision-making. While many of these tools are very confined in terms of the scope of their capabilities, the introduction of broader AI, like Bard and ChatGPT, makes it possible for people to by-pass the process of researching a topic and building an argument and head straight to the conclusion or decision. The dangers of this, though, have been on full display recently, such as when a professor incorrectly flunked all his students for cheating (because ChatGPT told him they had) or the lawyer who used ChatGPT for legal research only to find that the cases he had cited didn’t exist (he was subsequently sanctioned).

But even in more limited-scope AI, we’re seeing AI make recommendations, such as who to lay off or whether someone should be denied opioids due to likely misuse (both of which I wrote about here). Such uses depend on the assumption that the AI’s output relies on sound data and logic to derive that output, which is often unverifiable (Papazoglou, 2023).

All this brings me back to the title of this post. Though AI makes it easier to outsource decision-making, there is an enormous amount of variability in the quality of the output any AI will produce. And that leaves us with a choice to make. We can either default to the AI’s output, or we can treat the AI’s output merely as a source of data (perhaps even an important source) in the decision-making process. The presence of legitimate human expertise makes that second choice much more feasible.

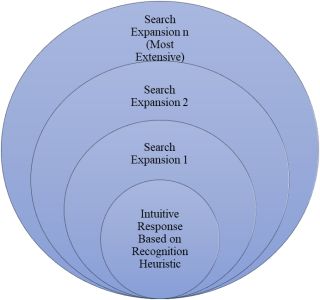

The presence of human expertise makes it possible to assess the intuitive plausibility and validity of the output produced by an AI. Though intuition often gets denigrated in research and practitioner writings about decision-making [1], intuition is the means through which most experts access their knowledge [2] (Julmi, 2019; Simon, 1987). As I wrote about when discussing the potential adverse effects of AI constraints on human decision-making, decision situations typically result in an intuitive response (Figure 1). The intuitive response of legitimate experts is substantially more likely to be valid than the intuitive response of non-experts. As such, experts are in a better position to evaluate the quality of the outputs produced by an AI and to adjust when necessary.

So, whether we’re talking about layoffs, opioid prescriptions, healthcare, financial investments, or any other complex decision, the lack of human expertise to evaluate the quality of what the AI produces can lead to a blanket acceptance of that output, whether it is a free-form argument produced by ChatGPT or the recommendation to deny a patient an opioid prescription. But no algorithm is ever going to be accurate 100 percent of the time. There will always be some degree of error involved. Human expertise, therefore, provides a check on an AI’s outputs because it’s humans, not the AI, that experience the repercussions of the decisions that are made.

A benign example involves the use of GPS, an AI-driven decision tool. For most trips, GPS will provide a reasonable route to get someone from Point A to Point B and do so accurately. It will also offer an estimated time of arrival (ETA), which, if things go as planned, is also likely to be reasonably accurate.

But what it cannot accurately account for are possible slowdowns that are unplanned, the exact length of an expected slowdown (such as in a work zone) [3], or how long it will take to find parking. If any of these factors become relevant, the ETA is likely to be less accurate (how much less will vary). So, while the driver can rely on GPS to help plan the route and determine a time to depart, the driver’s own expertise—in the form of experience with the route to be taken and the area where the endpoint lies—will be relevant in deciding whether to accept the GPS’s predetermined route, alter the route in transit, and plan extra time for parking [4]. The GPS provides input into the driver’s decisions, but it does not make those decisions for the driver. And those drivers with more expertise will be better able to recognize when to consider factors beyond the input of the GPS.

By no means am I suggesting that expert human judgment is infallible. As I’ve written in the past, expertise has its limits. But expert judgment permits the consideration of potential contingent or situation-specific factors that may be relevant to a given decision situation. And those factors are often relevant in determining the acceptability of various decision options, the trade-offs that may accompany them, and the potential for unintended consequences—none of which are easily predicted by an algorithm.

But expertise can also guide the search expansion process laid out in Figure 1. When an AI produces a potentially valid output that requires further verification, experts are generally in a better position to identify where to go to seek that verification. So human expertise isn’t just useful for intuitively evaluating or refining the AI’s output; it is also useful for guiding the search for further evidence or information to test, confirm, or elaborate on that output when needed.

Birhane and Raji (2023) argued that when large language models, like ChatGPT or Bard, make predictive mistakes, the consequences can be quite serious [5]. But the same can apply to any AI-driven tool. If human decision-makers lack the expertise that permits informed questioning of the AI output—whether that output is a string of code, an internal training document, or a home loan recommendation—faulty decisions become more likely. And sometimes, faulty decisions come with high-stakes consequences. To put all our proverbial eggs into the AI basket and default to the AI for such decisions is a recipe for high-stakes errors. And while human expertise comes with its own limitations, it can provide some guardrails to reduce the likelihood of such errors.

References

Footnotes

[1] This is due to the myth propagated for years in science and practice about analytical thinking being universally better than intuitive decision-making.

[2] The idea is that what used to require more conscious analytical thinking becomes easier to less consciously access as people gain experience applying what they know. Future experiences then allow easier access of past learning because those experiences share similarities to past situations.

[3] I don’t know about others, but a GPS-predicted 5-minute construction slowdown is often wildly underestimated.

[4] There are times where the time of arrival is important (e.g., doctor’s appointments, job interviews), and times where this will be less of an issue.

[5] After all, their outputs rely on nothing more than predictive models. And this is not confined to just recent LLMs; this has been documented for years.