Neuroscience

Belated Happy Birthday AlphaZero

Machine Intelligence and Cognitive Psychological Science

Posted February 18, 2019

Let’s Meet AlphaZero

AlphaZero is a machine learning program developed by DeepMind that has acquired insight into creative chess play on its own through deep learning without any human guidance. It is now the strongest chess, Japanese chess (shogi), and Go player in the world, consistently beating the best human players and the best chess engines. Why would I wish it a happy birthday? I mean why would I congratulate it regarding its birth? The short answer is that it is because I recently learned that AlphaZero achieved insight during October 2017 and thereby became truly intelligent in my view. Hence my belated happy birthday wish.

My use of the term insight was prompted by the following New York Times reporting on 12/26/18: “Most unnerving was that AlphaZero seemed to express insight. It played like no computer ever has, intuitively and beautifully, with a romantic, attacking style. It played gambits and took risks”. The Google “define:insight” command returns the following primary definition: “the capacity to gain an accurate and deep intuitive understanding of a person or thing.” Synonyms for insight include: intuition, perception, awareness, discernment, understanding, comprehension, apprehension, appreciation, acumen, and astuteness. A secondary definition of insight is “a deep understanding of a person or thing.” These are qualities of a truly intelligent entity.

Matthew Sandler and Natasha Regan are both English chess masters. They have written a book entitled Game Changer, published by New in Chess, wherein they analyze the insights into the games of chess and Go that AlphaZero developed on its own without any human guidance. They said that AlphaZero discovered well-known openings and strategies while learning to play chess by itself. They said that it also developed new, impressive creative long-term strategies because it was not constrained by conventional wisdom imparted by human programmers. Here we have grand masters admiring insights that AlphaZero achieved on its own. One might argue that AlphaZero was able to achieve new insights precisely because conventional human wisdom was withheld, thereby freeing AlphaZero from human bias. The ability to achieve insight is a truly intelligent human characteristic. The “birth” of such a revolutionary and remarkable intelligence deserves respectful recognition. Hence, I wish AlphaZero a belated happy birthday!

Generalizability

Generalizability is a test of validity. Generalizability has long been a major problem for traditional rule-based artificial intelligence (AI) programs. Their achievements have been limited to very specific tasks. Rule-based programs are highly specialized and can only do what they were specifically programmed to do. They do not generalize to related similar tasks because they cannot learn on their own. They wait for humans to provide them with additional new rules.

By having taught itself chess, shogi, and Go, AlphaZero has demonstrated that it can discover new knowledge on its own through reinforcement learning. This ability to generalize by learning on its own is a remarkable achievement. Learning on its own is a hallmark of true intelligence. This ability arguably establishes the superiority of brain-based AI over rule-based AI. Further details are available.

Another DeepMind project, a program called AlphaFold, uses the deep learning brain-based neural network AI approach to solve an extraordinarily complex problem that has so far eluded scientists. I refer to understanding how proteins fold. How proteins fold inside the body into three-dimensional structures determines how they will bind to other molecules, including new medicines. That knowledge is the key to understanding and predicting the effects that new medicines will have. AlphaFold is now making progress in understanding this process.

The remainder of this blog contrasts mind-based psychological models with brain-based psychological models before providing a few basic network principles that enable us to better understand how deep learning connectionist machines like AlphaZero and AlphaFold work.

Brain-Based vs. Mind-Based Models

Mind-Based Models

Psychology began as a branch of natural philosophy where mind-based explanations of human behavior were derived from introspection. Traditional cognitive psychologists have continued this practice with their view that people learn and behave because the mind follows rules that govern symbol manipulation. Evidence that this theory is wrong can be obtained by asking experts about the rules that they follow when they work. Experts generally do not acknowledge or report that they follow rules of any sort as they work. They may have followed rules when they were novices, but they moved beyond rule-following as they became expert. But many cognitive psychologists continue to act as if people always follow rules when they think and behave. To act as if something is true when it is not may be professionally convenient, but doing so has not been very successful as we shall see next.

The rule-based symbol manipulation approach characterized the initial efforts to create artificial intelligence. For example, computers were once programmed with very many rules for playing chess, but they never did very well. Computers were programmed with very many rules for identifying people from photographs or videos, but they did even less well and they could not do so in real time. The limitations of the rule-based approach are manifest but many cognitive psychologists continue to explain human behavior in terms of rules and rule-following because they have always done so and because it supports their computer metaphor. They understand the brain to act like computer hardware and the mind to operate like computer software in which rules are like computer programs that govern how people think, feel, and behave.

These same cognitive psychologists admire the achievements of deep learning AI systems such as AlphaZero, but they do not trust them because they cannot understand how they think because they do not generate symbols or form and follow rules as we normally understand them. Hence, AlphaZero cannot communicate with these cognitive psychologists in ways that they understand. The problem here is that AlphaZero learns like the brain does, not like the mind is said to. A new way of understanding how AlphaZero thinks that is relevant to understanding how the brain works is required. This new approach can also be used to understand cognitive psychology based on neural network models.

Brain-Based Models

Connectionist neural network models, also known as neural networks, deep learning, and machine intelligence, underlie and explain how Alpha Zero works. These models take a brain-based approach to explaining cognitive psychology. They have been seriously studied since McClelland and Rumelhart and Rumelhart and McClelland published their seminal works in 1986. In 2014, I published a brain-based connectionist neural network explanatory approach to psychology in my book entitled Cognitive Neuroscience and Psychotherapy: Network Principles for a Unified Theory. It reflects developments over the intervening decades since 1986.

Neural network models consist of three or more layers of interconnected processing nodes that have many of the same functional properties that real neurons have. For example, each artificial neuron receives inputs from many other artificial neurons just as real neurons do. Each artificial neuron sums these inputs and generates an output if the sum of their inputs exceeds a threshold amount just like real neurons do.

Artificial neurons are connected to each other by simulated synapses called connection weights. These weights are initially set to small random values. Learning and memory occur by gradually adjusting these weights over learning trials. The final result is a network where processing nodes are interconnected with optimal weights for the tasks under consideration. Connection weights are so central to the functionality of connectionist neural network models that the term connectionist is often omitted. Connectionist neural network systems may act as if they are following rules but they never formulate or follow rules as we normally understand them and they certainly do not generate symbols. Additional details are provided in the next section.

Understanding Brain-Based Models

I find that the best way to understand the neural network systems that are used by AlphaZero and other deep learning artificial intelligences is to understand the principles that govern them. These network principles can also be understood as neural network properties. I now discuss four of these principle/properties. There are others but these four are fundamental and should get you started. See Tryon (2012, 2014) for further information.

Principle/Property 1: Architecture

The neural architecture of real brains is important to their function. For example, the cerebellum has special circuitry that enables it to rapidly control our muscles so that we can walk, run, and play sports. Likewise, the architecture of artificial neural networks is important to how they function. For example, neural networks that have just two layers, called Perceptrons, cannot solve certain logical problems. Networks with three or more layers can solve all logical problems. Mathematical proof exists that multilayer neural networks can potentially solve all types of problems. See Hornik, Stinchcombe and White (1989, 1990) for mathematical proof of this claim.

Principle/Property 2: Network Cascade: Unconscious Processing

Activations generated by artificial neurons cascade across artificial neural networks in a manner described below that reflects how activations generated by real neurons cascade across real brain networks. Most brain processing occurs unconsciously. The famous iceberg analogy accurately reflects these events. The ninety percent of an iceberg that is under water represents, and is proportional to, unconscious brain processing. The ten percent of an iceberg that is above water represents, and is proportional to, conscious brain processing. See Cohen, Dunbar, and McClelland (1990) for further details.

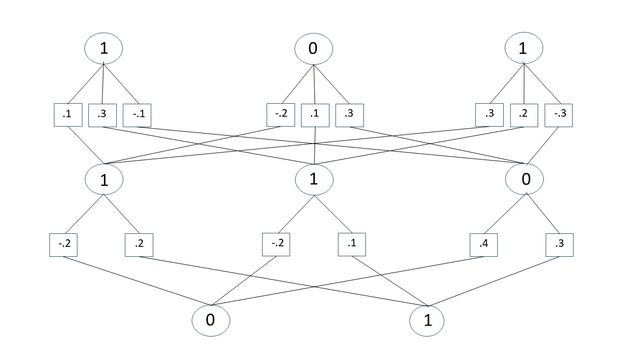

The following figure illustrates how the network cascade works. It is a very simple network but needs to be to fit on this page. The top layer of three circles simulates three input neurons. They can be understood as sensory neurons. The number “1” inside the circle indicates that the simulated neuron is active. The number “0” indicates that the simulated neuron is inactive. Together, they define the three inputs to this system as: 1, 0, 1.

The “on” ,“off” status of simulated neurons in the next two layers are computed rather than assigned. The second layer of three sets of three boxes simulates synapses that connect the simulated neurons in the top, input layer, with the three additional simulated neurons in the third layer. The left-hand set of three boxes in the second row represent the simulated synapses that connect the left hand simulated neuron in the top row with all three simulated neurons in the third row. The middle set of three boxes in the second row connect the middle simulated neuron in the top row with all three simulated neurons in the third row. The right-hand set of three boxes in the second row represent the simulated synapses that connect the right hand simulated neuron in the top row with all three simulated neurons in the third row. Positive entries simulate excitation. Negative entries simulate inhibition. These values are called connection weights because they specify the strength of the connection between two simulated neurons. The current values can be thought of in one of two ways. One possibility is that they are the first values assigned randomly at startup. Another possibility is that they reflect the state of the network at an arbitrary processing step.

The on = 1, off = 0 states of the three simulated neurons in the third layer are computed rather than assigned. I now detail the relevant calculations. Notice that each of the three simulated neurons in the third row has three inputs; one from each of the simulated neurons in the first row. These inputs equal the state of the sending neuron, 1 if active, 0 if inactive, times the connection weight as follows. Inputs to the left hand neuron in the third layer equal 1(.1) + 0(-.2) + 1 (.3) = .4. This result is compared to a threshold which in this case is zero but could be some other value. If the sum of the inputs exceeds zero, is positive, as it is in this case, then the receiving simulated neuron, the left hand one in this case, becomes active or remains active if it was previously active, as indicated by the 1 inside the circle representing the left hand simulated neuron in the third layer. Because zero times anything is zero, the sum of multiple inputs equals the sum of the connection weights associated with active simulated sending neurons.

The inputs to the middle simulated neuron in the third row are 1(.3) + 0(.1) + 1(.2) = .5 which being positive activates this simulated neuron as indicated by the number 1 in its circle. The inputs to the righthand simulated neuron in the third row are 1(-.1) + 0(.3) + 1(-.3) = -.4 which being negative deactivates this simulated neuron, turns it off if it was previously on, as indicated by the number 0 in its circle.

The status of the two simulated computed neurons in the fifth row are controlled by the computed states of the three neurons in the third row and the simulated synapses, connection weights, in the boxes in the fourth row. The left hand simulated neuron in the fifth row becomes inactive because the sum of its inputs of 1(-.2) + 1(-.2) + 0(.4) = -.4 is negative and therefore below the threshold of zero. The right hand simulated neuron in the fifth row becomes active because the sum of its inputs of 1(.2) + 1(.1) + 0(.3) = .3 is positive and therefore exceeds the threshold of zero.

Activation of simulated neurons in the top, input, layer is said to cascade across the simulated synapses to the remaining simulated neurons. This process is automatic and deterministic.

Principle 3/Property: Experience Dependent Plasticity

The network will always compute the same result if everything is left as is. No development will occur. The network will compute a different result if the input values are changed. But, the network will not learn to do better if the connection weights remain the same. Learning requires that the connection weights be changed. The amount of change is determined by equations that simulate the effects of experience-dependent synaptic plasticity biological mechanisms that modify real synapses among real neurons when we learn and form memories.

Changing the connection weights means that the network computes a new response to the old stimulus input values. The connection weights are changed in accordance with gradient descent methods that essentially guarantee an incrementally better network response.

I wish to provide three take home points here. The first point that I wish to emphasize is that learning and memory are fundamental to all psychology because psychology would not exist if we could not learn and form memories through synaptic modification. The second point that I wish to emphasize is that all aspects of our psychology are contained in what Seung (2012) calls our connectome; the complete collection of our synapses. The third point that I wish to emphasize is that experience-dependent plasticity mechanisms enable our experiences to physically change our brains and therefore alter the ways that we think, feel, and behave. There is nothing mental or magical about this process.

Principle 4/Property: Reinforcement Learning

Behavioral psychologists such as B. F. Skinner explained that behavior is strengthened, becomes more probable, through reinforcement by positive or negative consequences that follow behavior. He could not explain the physical processes of synaptic change that enabled behavior to change so he simply acknowledged change by claiming that the conditioned rat survived as a changed rat. He realized that experience changes the brain but could not be more informative because the biology of learning and memory was in its infancy at that time.

Reinforcement learning is now much better understood. It is an incremental process that makes no sense from a mind-based cognitive perspective where learning and memory involve following rules to manipulate symbols. Symbols are not generated a little bit at a time. Nor does it make sense that symbols might change a little bit at a time or that their meaning might be modified a little bit at a time. Therefore, it seemed that reinforcement learning was unable to explain how cognition works.

But, reinforcement learning makes a great deal of sense from the brain-based connectionist neural network perspective outlined above in which connection weights among neurons begin at random levels and are gradually adjusted through learning so that they converge to optimal values through an incremental process of change known as gradient descent.

AlphaZero developed its superior cognitive skills through the incremental process of reinforcement learning. This achievement shows that traditional cognitive psychologists were wrong to discount reinforcement learning as a valid explanation for the development of cognitive processes.

Reinforcement learning is a form of evolution because it depends critically on variation and selection. Successes and failures jointly shape future behavior. Skinner consistently maintained that animal and human behavior evolves ontogenetically (over one’s life time) as well as phylogenetically (over many generations). Reinforcement learning is an effective way for connectionist AI systems to learn from experience on their own. Reinforcement learning effectively solves problems that are too complex to program solutions for. For example, it is the method used to teach cars to drive themselves.

Conclusions

AlphaZero is a brain-based super artificial intelligence that is capable of insight, thus making it much more human-like than traditional AI machines. It can generalize its learning in ways that traditional rule-based artificial intelligences cannot. It rapidly adjusts its simulated synapses through reinforcement learning. It does not generate symbols or formulate and follow rules as ordinarily understood. Hence, neural network intelligences such as AlphaZero and AlphaFold cannot help traditional cognitive psychologists to understand how they work. A neural network orientation is required to do that. The four neural network principles/properties discussed above can help us to better understand artificial intelligences such as AlphaZero.

The success of AlphaZero tells us at least two things. First, it provides empirical proof that reinforcement learning is sufficient to explain the acquisition of complex cognitive skills including the ability to achieve insight. Second, it supports the validity of brain-based models over mind-based models. This constitutes a major paradigm shift in cognitive psychology.

Happy Birthday AlphaZero!

References

Cohen, J. D., Dunbar, K., & McClelland, J. L. (1990). On the control of automatic processes: A parallel distributed processing account of the Stroop effect. Psychological Review, 97, 332-361. doi: 10.1037//0033-295X.97.3.332

Hornik, K., Stinchcombe, M., & White, H. (1989). Multilayer feed-forward networks are universal approximators. Neural Networks, 2, 359-366. doi: 10.1016/0893-6080(89)90020-8

Hornik, K., Stinchcombe, M., & White, H. (1990). Universal approximation of an unknown mapping and its derivatives using multilayer feedforward networks. Neural Networks, 3, 551-560. doi 10.1016/0893-6080(90)90005-6

McClelland, J. L., Rumelhart, D. E., & the PDP Research Group (1986). Parallel distributed processing: Explorations in the microstructure of cognition, Vol. 2: Psychological and biological models. Cambridge, MA: MIT Press.

Rumelhart, D. E., McClelland, J. L., & the PDP Research Group (1986). Parallel distributed processing: Explorations in the microstructure of cognition, Vol. 1: Foundations. Cambridge, MA: MIT Press.

Seung, S. (2012). Connectome: How the brain’s wiring makes us who we are. Boston: Houghton Mifflin Harcourt.

Tryon, W. W. (2012). A connectionist network approach to psychological science: Core and corollary principles. Review of General Psychology, 16, 305-317. doi: 10.1037/a0027135

Tryon, W. W. (2014). Cognitive neuroscience and psychotherapy: Network Principles for a Unified Theory. New York: Academic Press.