Anger

Dogs, MRIs, and Emotions

How dog brains give rise to dog emotions and similarities to humans

Posted October 26, 2013

A few weeks ago, the New York Times published my opinion piece titled, “Dogs are People, Too,” which was based on my new book, How Dogs Love Us. The article seems to have polarized many people in the dog- and animal-worlds. So much so, that most of the usual booksellers will not even stock my book. In fact, I will give a signed copy to the first person who sends me a photo of the book stocked in a bricks-and-mortar store.

Two points in my op-ed, in particular, seem to be the greatest cause for debate: 1) can we read emotions from MRIs? 2) is evidence of canine (or animal) emotion sufficient for personhood? For several thoughtful critiques and rebuttals to these points see blogs by: Hal Herzog (and the other side), Jason Goldman, Marc Bekoff, Patricia McConnell, and Jeff Skopek.

Today, I will address the first question of MRIs and emotions.

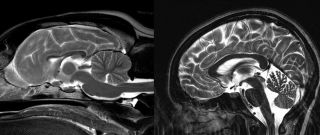

Dog brain and human brain

Reverse Inference

Although neuro-backlash is much in vogue these days, it is worth examining where much of this criticism came from. The story begins with Russ Poldrack’s 2005 paper on “reverse inference.” Russ wanted to know how certain we could be in saying that a blob of activity in some part of the brain meant that a particular cognitive process was going on. Using a database of neuroimaging papers, Russ scanned the papers for keywords like “memory”, “reward”, “language” etc. Then, he cross-referenced these keywords against the coordinates in the brain reported in the paper. As a test case, he chose language. Based on 150 years of research, neurologists take it for granted that an area in the left frontal lobe, called Broca’s area, is responsible for the output of language. This makes sense because a stroke to this region severely impairs a person’s ability to speak.

Surprisingly, Russ found only a weak correlation between fMRI activity in Broca’s area and the probability that the experiment involved language. From this, he concluded that we must be very careful in inferring a particular cognitive process from brain activity alone. In retrospect, the weak correlation occurred because Broca’s area does more than language.

But what about other brain regions and other cognitive processes? At the time, I asked Russ this question, but he didn’t know because he hadn’t scanned the database for other processes. But I, being a reward-guy, was immediately interested in the caudate and the possibility that caudate activity could be used as a readout of positive affective processes. So, in a paper with my friend, Dan Ariely, we repeated Russ’s analysis on a subpart of the caudate (called the nucleus accumbens) and found that activity in this region was highly predictive of a reward process going on. In fact, this has become a foundational principle of most of neuroeconomics and neuromarketing. It is for this reason that activity in this part of the brain can predict preferences for food, music, and beauty – even purchasing decisions.

Emotions in the Brain

So what about emotions? There is still a lot of debate about what an emotion is. Humans have a rich language for emotion, but even if you take something as basic and universal as love, you quickly realize the vast nuances that that word contains. There are so many different types of love that the word itself is inadequate.

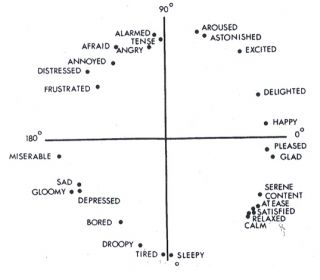

Circumplex model of emotions

To proceed scientifically, we must set aside such subtleties. It helps to break emotion down to fundamental components: valence and arousal. Valence is simply goodness or badness, while arousal describes the level of excitement, ranging from calm to maximum excitement. Many human emotions can be plotted on a graph as a function of the combination of valence and arousal. Because the graph forms a circle, it is called the circumplex model of emotion. Positive emotions are plotted to the right, while negative ones are on the left. In the vertical direction, high arousal emotions are at the top while low arousal ones are at the bottom.

Many psychologists have argued that the two-factor model is too simplistic. However, it provides an excellent starting point for studying the parts of the brain that give rise to the different emotions. As it turns out, the caudate nucleus is closely associated with positive valence, while the limbic system (e.g. the amygdala) is associated with arousal. By examining the relationship of activity in these different brain systems to the emotions experienced by human subjects, we can build an emotional brain map. Because dogs have caudates and limbic systems that look almost the same as ours, such a map could be applied to dog brains to help determine what a dog is feeling.

In the upper left portion of the circumplex are the emotions with high arousal and negative valence. The usual behavioral manifestation of these emotions is avoidance or retreat from whatever caused them. However, the close proximity of emotions like fear, anger, and frustration in the circumplex make that quadrant difficult to map in the brain. Despite having similar levels of valence and arousal, those emotions feel quite different from one another. Although much is known about the fear system of the brain, almost nothing is known about rage or frustration. The upper left quadrant will remain uncharted territory in the Dog Project because of the ethical problems with inducing those types of emotions in dogs.

In contrast, the upper right quadrant of the circumplex model is well understood and seems like an excellent place to begin mapping the dog brain. These are the emotions that are maximally enjoyable: very good and very exciting. These positive emotions are also associated with a specific behavior seen in all animals. If something is good and exciting, every animal—dog, rat, human—will approach it. Jaak Panksepp calls this the seeking system. In the brain, we know that approach behavior, as well as the corresponding positive emotions, is associated with activity in a subpart of the caudate called the nucleus accumbens. In humans, when we observe activation in this region we can deduce that the person is experiencing a positive emotion and very likely wants whatever is making them excited (see above for the reverse inference aspect).

Is That All?

Tigger, superstar of MRI-dogs.

Surely there is more to emotions than the caudate nucleus. However, when it comes to human emotions, we also have to distinguish between the experience of emotion and the appraisal of emotion. We all know what it is like to experience emotions such as fear, joy, and anger, for example. But, in an fMRI experiment, subjects must do more than experience emotion. They must also report what they are feeling. (Remember, emotions are internal, and can only be accessed by verbal report or behavior). And this is where the neuroimaging literature becomes muddied. When we study emotion in the scanner, are we studying the experience of emotion or the individual’s assessment of what they are feeling? Typically, the conscious appraisal of something – be it an emotional or cognitive state – requires a fair bit of hardware in the brain. Thinking about thinking brings online prefrontal cortex, sensory cortex if you’re thinking about sensations, and language regions if you’re subvocalizing. It gets messy to interpret. Because a dog probably doesn’t engage in a lot of appraisal, his brain activity may be easier to interpret than a human’s.

As the field of neuroimaging moves away from “blobology,” increasingly sophisticated algorithms are proving their power to use fMRI data to categorize mental states. Jack Gallant, at UC Berkeley, has been incredibly successful in pushing brain-decoding further. In fact, I wrote about his work in this blog several years ago. Jack’s work has been focused on decoding visual scenes in the brain, but I had a firsthand taste of its potential when I volunteered to help a colleague at Emory.

In 2006, Stephen LaConte, who is now at Virginia Tech, was developing an algorithm to classify brainstates in realtime. His goal was to develop an MRI-based brain-machine interface. By streaming fMRI data to a computer, he wanted to see if the data was of high enough fidelity to control a machine (like a robot). As a first test, Stephen wanted to see if a person could move a cursor across a computer screen simply by thinking about it. I volunteered to be a guinea pig for his experiment.

Now, you might think that the best way to move a cursor is to visualize the cursor moving. In fact, that is what most of his subjects did. And the technique was pretty effective, with the algorithm working about 75-80% of the time. But as I lay in the MRI, waiting for the sequence to begin, I realized that Stephen’s algorithm didn’t care what I was thinking about. So, I decided to think happy thoughts to move the cursor left, and sad thoughts to move it right. And it worked (83% accuracy). That convinced me that although emotional states may have complex representations in the brain, they are distinct enough that they can be measured in realtime with fMRI.

Animal Emotions

Even if we can crudely decode emotions in the human brain, does that mean we can generalize to dog brains? It depends on the types of emotions we’re talking about. You don’t need an MRI to know that dogs (and other animals) experience emotions. Charles Darwin said as much 150 years ago. Because of the continuity of evolution, human emotions must have evolved from processes shared with other animals. Something as basic as the desire to approach stuff that is good for well-being, or to avoid things that are dangerous, is necessary to all animal life. Darwin called these animal processes emotions, and so would I.

Patricia and Kady get ready for an MRI session

MRIs don’t prove that animals have emotions – we already know they do. But MRIs can show us how and where in the brain these emotions occur. And the closer we look, the more we see in common with our own brains.

For more information, see my book, How Dogs Love Us: A Neuroscientist and His Adopted Dog Decode the Canine Brain.