Boredom

Can ChatGPT Get Bored?

We asked it to find out, and the answers were intriguing.

Posted March 16, 2023 Reviewed by Vanessa Lancaster

Key points

- ChatGPT has a reasonable grasp of what boredom is but does not experience it.

- When considering potential ethical concerns of AI boredom, ChatGPT has some important insights.

- Whether we should program AI to be capable of boredom depends on what use case we can imagine for "intelligent" boredom.

There’s been a great deal of attention in the past few months surrounding the possibility that ChatGPT, the artificial intelligence (AI) language model developed by OpenAI, could be conscious. While the raging debate around this suggests that what looks like consciousness is really something else, we thought it would be interesting to ask ChatGPT about boredom.

Why is boredom relevant here? It helps to consider the distinction between robots and AI. For robots, we want machines or programs that can take over laborious and monotonous human tasks – think assembly line work. In any circumstance in which the task is highly repetitive, the demands on attention are steep, and the likelihood of error is high and ripe for robotic control. On the other hand, AI should not merely complete menial tasks effectively but should seek novel solutions. An AI that experiences boredom might avoid squandering its resources and be curiously drawn to new problems while continuing to grow.

Boredom is relevant in two important ways. First, functional accounts of boredom cast it as a call to action, to explore one’s environs for something either novel or more meaningful to engage in than the currently available options. Should we want an AI program to be capable of flexible learning, boredom could prove very useful.

Second, boredom may solve a thorny problem in models of brain function that cast the organ as a predictive learning machine. In these predictive coding accounts, the brain uses mental models to predict the outcomes of intended actions. Incoming information is compared against those predictions, generating prediction errors that are used to fine-tune the models.

But if the brain truly operates on the principle of minimizing prediction error, the ideal circumstance would be to find a dark corner of a room and stay there – a maximally predictive situation. While there are several counterarguments to the dark room problem, boredom provides at least one potential solution. No one could withstand the crushing boredom of a dark room for long, pushing us instead to exploratory behaviors.

So, we decided to ask ChatGPT a few questions about boredom, starting with the obvious:

What is boredom?

We might quibble with aspects of this answer – perhaps highlighting the important role of meaning and emphasizing that boredom is not merely another symptom of depression and anxiety. But in general, it’s not a bad response. It captures the nature of being disengaged from the environment or task at hand and includes one of the more common descriptors people use when bored – feelings of restlessness. It ties it into the broad swathe of negative outcomes (higher depression and anxiety, increased risk-taking) associated with the trait of being boredom prone. The fact that it does not distinguish between the state itself and the individual propensity to feel it often and intensely (i.e., trait boredom proneness) is probably trivial for a broad definition intended for lay use.

Our next warm-up question was intended to see whether ChatGPT would implicitly associate boredom with its own existence, however, that might be construed (although we should note, the ‘you’ in this question could easily be read as the ‘royal’ you).

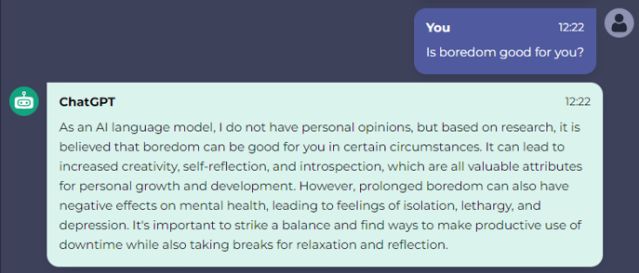

Is boredom good for you?

Here we see evidence of the more recent programming of ChatGPT to make it less contentious – it can not have ‘personal opinions’! But it also highlights the potentially leading nature of the question we gave it, as the program picks up on the oft-used trope that “boredom makes you creative.”

The evidence in support of this is quite thin on the ground. In recent work from our lab, we find no evidence that either state boredom or trait boredom proneness is positively associated with a variety of measures of creativity. Nevertheless, some, perhaps trite, suggestions for self-improvement in response to boredom are not far off the mark. Self-reflection on one’s own goals or on the particular circumstance that led to boredom itself can be positive ways to reframe the situation to craft better responses to being bored.

Next, we tried to be more specific and asked ChatGPT.

Do you get bored?

Clearly, ChatGPT has been programmed now to acknowledge its limitations, in this instance, the incapacity to experience emotions (although we should point out that we see boredom as a cognitive-affective state, perhaps best captured by the notion that boredom represents a "feeling of thinking"). The follow-up is suggestive more of the distinction we made at the outset between robots and AI.

ChatGPT brags about its ability to “keep processing information for long periods without getting tired or restless.” If we did decide that it would be acceptable to program an AI to be capable of being bored, it might be in the service of exploration, not relentless automation.

Finally, we moved on to what may be the most interesting of the questions we set for ChatGPT.

Can you anticipate any problem with programming AI to experience boredom?

The first three answers here might be considered practical obstacles that could be overcome. Programming emotions in at least a functional sense – what is emotion x for? – is plausible. Limited data is simply about farming and collecting that data, something we have proved we can do for a wide range of things from genomes to brain scans. And there are unsupervised learning algorithms capable of capturing themes from large volumes of free text. One can imagine using these to construct a kind of consistent and reliable description of the ‘content’ of boredom to address the subjectivity concern.

That third problem ChatGPT raises also highlights internal contradictions in the program. To our first question, "what is boredom?", it happily gave a concise answer with no hint of controversy or lack of confidence. But here it hides behind the seemingly impossible challenge of finding a universally accepted definition. Perhaps there needs to be another challenge here for programming AI to be bored (or experience any other emotion) – the challenge of self-reflection!

But it's the final two points that ChatGPT hits upon that we find most fascinating. First, would it be ethical to instill an AI program with the capacity for boredom? While it may make the program more human-like – capable of exploratory drives and independent learning, with the propensity for more error-prone performance – would that be desirable?

ChatGPT recognizes the potential for unethical behaviours and, perhaps unsurprisingly, lands on hacking or stealing personal information (something it considers to be in its wheelhouse?) as a prime example. And finally, there is the recognition of the law of unintended consequences. We don’t know how the deep learning structures that give rise to AI actually work.

Without knowing this, there is the very real potential that the program develops ways of processing information that are counter to human needs and aspirations. It could equally stumble upon solutions to complex problems that we have struggled with for decades or longer, but the concern is that we can’t know in which direction an AI’s processing behaviour (and capacity) will go. And with boredom as the driver, the urge for it to “act” may produce a wide suite of unpredictable outcomes.

One could counter that boredom in an AI capacity would only be problematic in the absence of a programmed code of ethics. But bored humans engage in elevated levels of aggression with anything from vandalism to sadistic behaviour and even murder blamed on the experience. Presumably, humans contain within them socially determined and learned moral codes, so such a thing is not a perfect solution for the potentially negative outcomes of boredom.

So, while boredom signals to us that what we are doing now is unsatisfying in some important way, it does not do the hard work of determining how we ought to respond. That is up to us; our responses may lean towards the maladaptive without careful reflection. In other words, boredom begs for constraints on action.

We would suggest the same is true for AI. While a sensationalist account might have us worrying about the rise of machines, a more composed assessment would suggest that we simply need to give serious consideration to the aims and uses of AI before we dive into attempts to imbue it with human qualities like boredom.