Adolescence

Why 25 Could Be the New 18

Adolescence should extend into the mid-20s.

Posted July 19, 2021 Reviewed by Devon Frye

Key points

- The age at which teens are considered adults has fluctuated over the centuries, depending on society's needs.

- Teens can be cognitively on par with adults but struggle with emotion regulation and executive functioning.

- Modern demands suggest that extending our conception of adolescence could help today's youths.

In Part 1 of this series, I explain why we should think of adolescence as a period that extends into the mid-20s. In Part 2, I offer specific recommendations.

You don't need a Ph.D. in psychology to see that adolescents are struggling. In fact, the people who see this most clearly are the parents and teachers of our foundering teens. Adolescence, as we've conceptualized it since Piaget, has needed a revision for some time, but it took the COVID pandemic to highlight this more clearly. It is now time for adolescence to enter a new age.

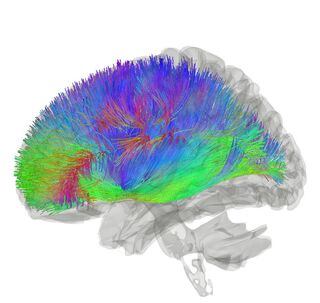

Though we've known for some time children as young as 15 can demonstrate cognitive abilities on par with adults (Brown, 1975; Keating, 2004), contemporary neuroimaging research (Sommerville, 2016, Tamnes et al., 2010) suggests that the human brain continues to develop well into the third decade of life, with the latest development occurring in the prefrontal cortex and striatocortical circuits: brain areas responsible for executive functioning and synthesizing cognitive and emotional inputs for decision-making (Casey et al., 2016; Goldberg, 2001; Sommerville, 2011).

As neuroscientist Leah Sommerville noted in a 2016 New York Times interview (Zimmer, 2016): "Adolescents do about as well as adults on cognition tests, for instance. But if they’re feeling strong emotions, those scores can plummet. The problem seems to be that teenagers have not yet developed a strong brain system that keeps emotions under control."

Age of Adulthood

In doing research for this article, I expected to find evidence showing that the accepted age of adulthood (a.k.a., "the age of majority") was gradually pushed back over the centuries, as life expectancies increased and intellectual work gradually replaced physical work. After all, the legal age of marriage in America was once 12 for girls and 14 for boys (Dahl, 2010), while the age of religious maturity in two of the world's oldest religions—Judaism and Catholicism (i.e., the age of the rituals of Bar Mitzvah and Confirmation)—have been set around age 13 for centuries (Minnerath, 2007; Olitsky, 2000).

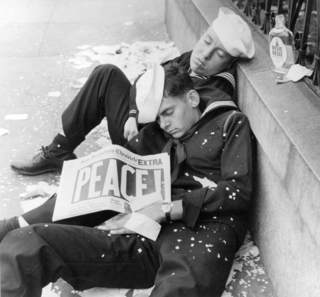

However, I then came across a well-researched law review by Vivian Hamilton (2016) documenting how the accepted age of adulthood has fluctuated back and forth throughout history as a function of the needs of each culture. For instance, the age of majority in America was once 21 but was gradually lowered to 18 in the mid-20th century to accommodate the need for soldiers during WWII.

Even more surprising is that 2,000 years ago, early Roman law set the age of full maturity at 25, which established the minimum age for young men to independently engage in formal acts and contracts without advisement. Furthermore, between the ages of 15 and 25, young Roman men were placed under the temporary guardianship of adults known as Curatores, and "a curator’s approval was required to validate young males’ formal acts or contracts until they reached twenty-five years of age."

Indeed, it appears that our Roman ancestors understood something about adolescence that we could benefit from relearning: kids need more time to develop before we saddle them with the full expectations and responsibilities of adulthood. In ancient Rome, curators served a role for adolescents that mentors, therapists, and guidance counselors currently serve for today's teens, but for a much longer period of time.

As a psychologist who was trained in a university counseling center and specializes in treating undergraduate and graduate students, I understand from experience the important role that Roman curators (and their modern counterparts) played in the lives of young adults in their mid-20s. Leah Sommerville's research—suggesting that adolescents need more time to develop neurological and behavioral mechanisms to prevent their emotions from inhibiting their reasoning abilities—jibes with my experiences treating undergraduate and graduate students in their 20s.

The ubiquitous pressures of social media alone have increased the emotional stakes of everything that today's adolescents try to do, in ways that no previous generation ever experienced. These pressures are even too burdensome for many healthy adults to handle, let alone kids in their teens and 20s who are still waiting for the brain development and experiential learning that will help them establish emotional resilience.

For these reasons, I believe we need to expand our current conception of adolescence as a period that extends past the teen years and into the mid-20s. In short, my experiences—both in treating undergraduate and graduate students and in raising my own teenagers—have informed my perspective, such that we should now think of age 25 as the new 18 in our expectations of what adolescents can psychologically handle.

Click here to check out Part 2 of this article series on extending adolescence, where specific recommendations are provided.

Facebook/LinkedIn image: Mix Tape/Shutterstock

References

Higgins A.; Turnure J. (1984). "Distractibility and concentration of attention in children's development". Child Development. 55 (5): 1799–1810. doi:10.1111/j.1467-8624.1984.tb00422.x.

Schiff A.; Knopf I. (1985). "The effects of task demands on attention allocation in children of different ages". Child Development. 56 (3): 621–630. doi:10.2307/1129752. JSTOR 1129752.

Keating, D. (2004). Cognitive and brain development. In R. Lerner & L. Steinberg (Eds.), Handbook of Adolescent Psychology (2nd ed.). New York: Wiley.

Kali R.V.; Ferrer E. (2007). "Processing speed in childhood and adolescence: Longitudinal models for examining developmental change". Child Development. 78 (6): 1760–1770. doi:10.1111/j.1467-8624.2007.01088.x. PMID 17988319.

Brown, A. (1975). The development of memory: Knowing, knowing about knowing, and knowing how to know. In H. Reese (Ed.), Advances in child development and behavior (Vol. 10). New York: Academic Press.

Publishing, Harvard Health. "The adolescent brain: Beyond raging hormones – Harvard Health".

B. Dahl, Gordon (2010). "Early Teen Marriage and Future Poverty". Demography. 47 (3): 689–718. doi:10.1353/dem.0.0120. PMC 3000061. PMID 20879684.

Ronald Minnerath, "L'ordine dei Sacramenti dell'iniziazione", in L'Osservatore Romano, 23 May 2007

Olitsky, Kerry M. An Encyclopedia of American Synagogue Ritual, Greenwood Press, 2000. ISBN 0-313-30814-4 p. 7.

Tamnes, C.K., Ostby, Y., Fjell, A.M., Westlye, L.T., Due-Tonnessen, T., Wolhovd, K.B. (2010). Brain maturation in adolescence and young adulthood: regional age-related changes in cortical thickness and white matter volume and microstructure. Cerebral Cortex, 20 (3), 524.

Casey, B.J., Glavan, A., Sommerville, L.H. (2016). Beyond simple models of adolescence to an integrated circuit-based account: A commentary. Developmental Cognitive Neuroscience, 17, 128

Zimmer, C. (2016). You’re an Adult. Your Brain, Not So Much. The New York Times, December 21, 2016.

Goldberg, E. (2001). The executive brain: Frontal lobes and the civilized mind. New York: Oxford University Press.

Yeh, F. C., Panesar, S., Fernandes, D., Meola, A., Yoshino, M., Fernandez-Miranda, J. C., ... & Verstynen, T. (2018). Population-averaged atlas of the macroscale human structural connectome and its network topology. NeuroImage, 178, 57-68. - http://brain.labsolver.org/