Artificial Intelligence

Our Modern Pascal's Wager: The AI Uncertainty Matrix

Science and faith have long been rivals. They collide head-on in our AI world.

Posted April 2, 2024 Reviewed by Abigail Fagan

Key points

- Artificial intelligence requires wise and skillful navigation as we sail into our uncertain future.

- Science and faith collide and converge as we try to determine how to safely and effectively use AI.

- "Pascal's Wager" can be a useful thought exercise to help us decide how best to proceed.

Artificial intelligence is already beginning to rapidly change our world in ways that we didn't even consider until OpenAI released ChatGPT on November 30, 2022. The clock has officially started ticking as AI companies feverishly race one another to evolve and proliferate this powerful technology as rapidly as possible. Given that AI will be in everything, this necessarily means that AI will change everything.

As but one example, it is likely we will soon have AI-powered, personalized assistants (e.g., Siri, Alexa, and Cortana) to help us with, well, everything. What will such changes mean for humanity? How will they affect the way we live? Will people fall in love with these personalized AI assistants, as depicted in the prescient sci-fi movie Her? Unless progress completely grinds to a halt for some reason, I suppose we’ll soon find out.

We know that humanity’s relentless drive to pursue progress and make things better doesn’t necessarily mean that all of this “better” is “good” for us. How smart will AI get anyway? Will tech companies' mad rush to evolve and proliferate artificial intelligence result in some kind of game-theory fueled race to the bottom? Will they be a net positive or a net negative for humanity? Will AI create a utopia or dystopia? What’s your confidence level, or perhaps your faith, that humanity has the collective wisdom to use AI skillfully to improve our lives? It would be wise to ask ourselves such questions now.

Pascal's Wager

The emergence of AI, and the often religious zeal that surrounds it, rekindled my thoughts about Pascal's Wager. You might be familiar with it already but, in brief, 17th-century French polymath Blaise Pascal devised this argument in defense of a belief in a traditional, Christian view of God.

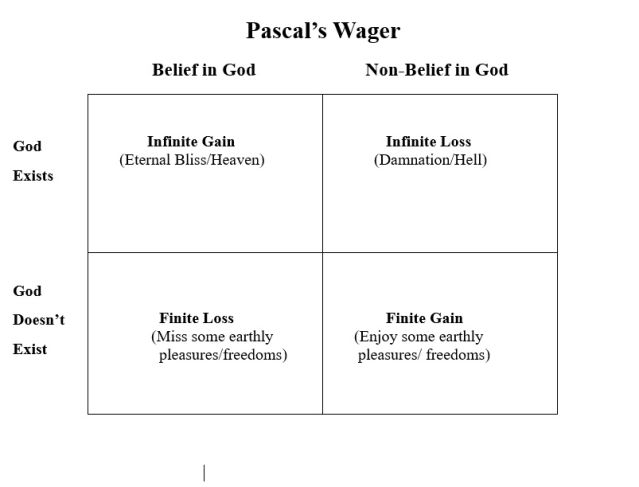

Pascal proposed a belief in this Christian view of God as a rational bet. This is because the potential benefits of believing in God (i.e., eternal happiness in heaven) far outweigh the finite costs of belief (i.e., living a religious life based on faith), especially when compared to the potential infinite loss of not believing if this Christian God does exist (i.e., eternal damnation). In a two by two grid, Pascal's Wager looks like this:

Applying Pascal's Wager to the Rise of Artificial Intelligence

While there are plenty of counterarguments against Pascal's original formulation, it struck me that we are now wrestling with modern versions of his wager. When it comes to AI, we have science and faith colliding in a unique fashion in real time. This is no hypothetical scenario. We are like a ship sailing into an ocean of uncertainty. Do icebergs lie ahead of us in this science fiction world we are creating for ourselves? Are we like passengers aboard the ill-fated Titanic speeding into a sea of AI icebergs? The Titanic's sinking offers a cautionary tale—are we heeding it as we sail into AI's uncertain future? What is your faith that things will somehow, invariably, work out for the best? There are many among us, including myself, who are doubting Thomases that technology, especially AI, will work out well for us.

The AI Uncertainty Matrix: A Modern Version of Pascal’s Wager

A modern version of Pascal’s Wager might be helpful as we consider the potential for increasingly powerful AI systems for both world-changing positives and catastrophic negatives. The dawn of the AI era could be considered an inflection point of sorts because it is perhaps only the second time in human history that science and faith have clashed with similar existential implications. The first time was with the invention of the atomic bomb as captured in Christopher Nolan's brilliant 2023 Oscar contending film, Oppenheimer. Humans were rightly fearful that, for the very first time, we had created a weapon that could wipe out most or all of humanity.

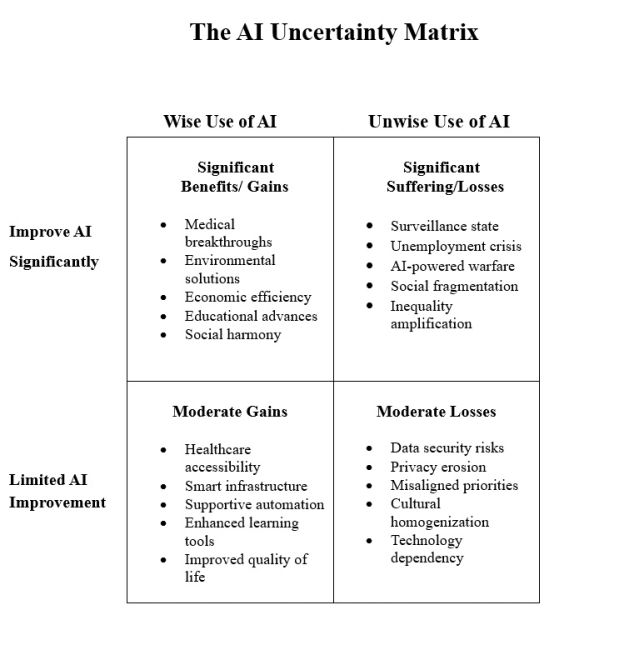

Let’s call ours The AI Uncertainty Matrix, and it would look something like this:

The trajectory of AI's evolution—its power, speed, and the potential hurdles like technical challenges (cost, energy use, reliability), legal matters, and regulatory constraints—raises critical questions. As we head into these uncharted waters, we must each ask ourselves: What is our faith that we will be able to improve AI significantly beyond its current levels?

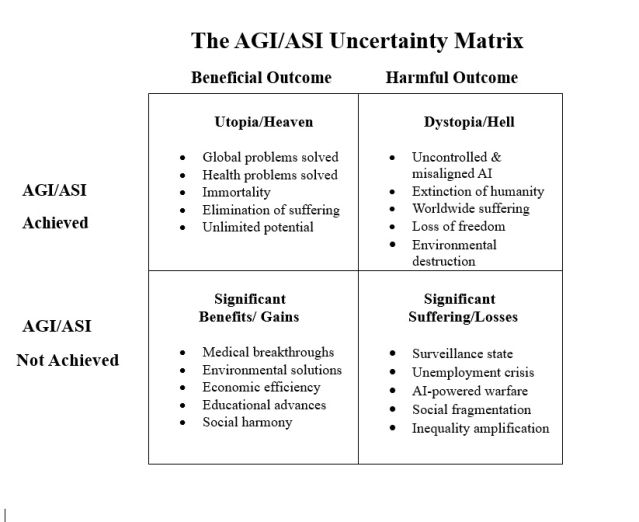

If AI does continue to scale up in power, will it achieve artificial general intelligence, AGI (i.e., AI can perform any cognitive task as well as any human can)? Would the evolution of AI continue to artificial superintelligence – ASI (i.e., AI is not only far superior to any human, but it is also superior to the combined intelligence of all humans)? Such possibilities have been explored within countless science-fiction books, movies, and television shows.

Now, let's apply a modern twist to Pascal’s Wager with these AI scenarios. For the sake of simplicity, we will combine AGI and ASI into The AGI/ASI Uncertainty Matrix, and it would look like this:

In What Do You Place Your Faith?

I don’t know what the future holds. I have no crystal ball. The intellectually honest answer to these questions above should be, “I don’t know.” For me, I have absolute faith in humanity to make AI more powerful. Science created AI and will continue to refine it, as it has with all past technologies. Fueled by capitalism, our free market, the glittering allure of profits, and our innate drives, humans excel at making things better. Combine this with the irresistible temptation of becoming the “Neil Armstrong of technology” by being the first to create AGI, it is safe to bet that AI will continue to evolve.

But what happens if we do achieve these unprecedented levels of advancement? This question invites us to consider not only the potential of AI to transform our world but also the profound responsibilities that come with wielding such power. With the evolution of AI, we must acknowledge that its potential to be beneficial and detrimental increase concurrently. We cannot have one without the other. It's not an either/or situation but a both/and. In a yin-yang sort of way, one cannot have the power of AI to make the world a utopia without the potential of that same power being used to cause dystopia.

Now, what is your faith that humanity has the collective wisdom to use AI wisely and skillfully for the betterment of humanity? What’s your faith that the tech companies, and/or the open-source community, who's racing to build more powerful AI systems, are prioritizing the well-being of the future of humanity over their profits or other motives?

Our Individual, and Collective, Leaps of Faith

We all have to make a leap of faith here about what will happen in the sci-fi world humanity is creating for ourselves. This is where my faith falters. I don’t know about you, but what I’ve seen in humanity does not give me as much faith as I’d like that we have the collective wisdom to use AI skillfully. We have all seen enough in our lifetimes to know that, at least sometimes, humans can be foolish, selfish, greedy, and short-sighted.

Perhaps the greatest leap of faith we must make is in humanity ourselves. One thing about humanity we know is that when we work together, there is virtually nothing we can’t do. As we invariably encounter problems with AI, can humanity overcome our constant fighting and work together to harness its enormous potential for the collective good? Can we overcome what has always been our greatest obstacle — ourselves?

“There is no fate but what we make for ourselves.” This sentiment, expressed by both John and Sarah Connor, from the Terminator series, captures the essence of our collective agency in shaping the future. While my faith is uncertain as to whether humanity will rise to the occasion, I know it is possible. We have the capacity for greatness, including overcoming our differences for our collective good. As John Lennon sang, just “imagine” what we could do if we collectively harnessed the power of AI to improve our world.

Regardless of how AI evolves, if I must place a wager on humanity's future, our best bet is through greater unity. I don’t know whether there is a God, afterlife, or what will happen with AI, but I do believe that we are all profoundly connected in ways that we can never fully grasp in this world. Embracing our inherent interconnectedness gives us the greatest odds of creating the brightest future possible. Together, harnessing our collective wisdom and strength, we can steer the course of AI towards improving our world and ensure that technology amplifies our shared humanity rather than diminishes it.