Cognition

3 Signs You’re a Scientific Thinker

The toolkit skeptics use to evaluate truth from fiction.

Updated March 23, 2024 Reviewed by Jessica Schrader

Key points

- From witch burning to vaccine hesitancy, failure to think scientifically can cost lives.

- Scientific thinkers strive to match their confidence in a belief with the strength of the evidence.

- Pitfalls such as confirmation bias can hinder our ability to think critically about a claim.

“At the foundation of all science is a scientific attitude that combines curiosity, skepticism, and humility.” –Myers and DeWall

Biased reasoning has permeated American history whether to justify slavery, end-of-the-world predictions, the Satanic Panic, or magical thinking—sometimes with dire consequences. Without scientific thinking and guarding against motivated reasoning (i.e., reasoning that is biased to produce emotionally desirable conclusions), we can justify any belief—for better or worse.

For example, Carl Sagan, a prominent champion of scientific thinking, once described how women during the witch trials were treated when suspected of being witches. He said:

It was nearly impossible to provide compelling alibis for accused witches: The rules of evidence had a special character. For example, in more than one case a husband attested that his wife was asleep in his arms at the very moment she was accused of frolicking with the devil at a witch’s Sabbath; but the archbishop patiently explained that a demon had taken the place of the wife. The husbands were not to imagine that their powers of perception could exceed Satan’s powers of deception. The beautiful young women were perforce consigned to the flames.

Any behavior from an accused woman was seen as evidence of witchcraft. This is a classic example of an unfalsifiable claim, that is, an assertion that cannot be disproven. The lack of scientific thinking that dominated this period led to the death of “perhaps hundreds of thousands, perhaps millions” of girls and women.

As technology advances, thinking like a scientist is more important than ever. We are bombarded with fake political news articles, altered images, and miracle weight-loss ads. Falling for pseudoscientific claims can have real-world consequences such as vaccine hesitancy, one of the top 10 threats to global health as classified by the World Health Organization. Thinking like a scientist can help inoculate us from falling for unfalsifiable claims or using motivated reasoning.

3 signs that you think like a scientist

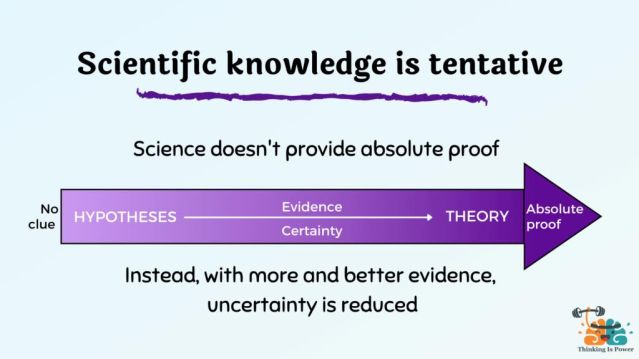

1. You follow the evidence. Philosopher David Hume stated, “A wise man proportions his belief to the evidence.” Scientific thinkers practice intellectual humility. They are happy to change their mind or increase their confidence in a belief as more evidence is found. They likewise lose confidence in a belief when presented with credible counterevidence. A great scientist withholds judgment on claims with little evidence or when ignorant of the evidence. They can admit when they are uncertain.

As Bob Garrett and Gerald Hough stated:

You seldom hear scientists use the words truth and proof, because these terms suggest final answers. Such uncertainty may feel uncomfortable to you, but centuries of experience have shown that certainty about truth can be just as uncomfortable. ‘Certainty’ has an ugly way of stifling the pursuit of knowledge.

Organizational psychologist Adam Grant encourages scientific thinkers to be flexible with beliefs, but hold fast to values. For example, a psychiatrist can be flexible on which depression medication they think is best, while committing to their value of helping patients. Scientific thinkers value curiosity and learning over being right.

2. You know which evidence is trustworthy and which is not. Scientific thinkers are selective in which information is heavily weighed when forming an opinion. They trust experts over entertainers, studies over anecdotes, and experiments over correlational research (see the Hierarchy of Scientific Evidence). Scientific thinkers analyze the credentials and expertise of those making a claim. They also look out for any conflicts of interest such as who is funding or benefiting from the research.

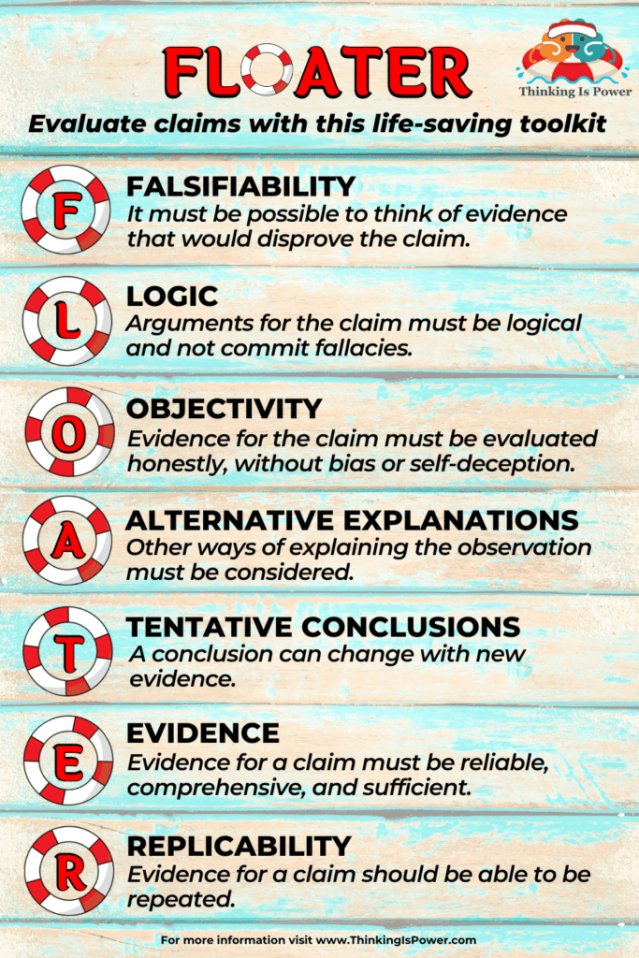

Biology professor Melanie Trecek-King suggests using the acronym FLOATER when evaluating information, which stands for:

- Falsifiability. It must be possible to think of evidence that would disprove the claim.

- Logic. Arguments for the claim must be logical and not commit fallacies.

- Objectivity. Evidence for the claim must be evaluated honestly, without bias or self-deception.

- Alternative Explanations. Other ways of explaining the observation must be considered.

- Tentative Conclusions. A conclusion can change with new evidence.

- Evidence. Evidence for a claim must be reliable, comprehensive, and sufficient.

- Replicability. Evidence for a claim should be able to be repeated.

Scientific thinkers prefer lateral reading to vertical reading when evaluating whether a claim is true. Researchers have found that the more time readers spend on an untrustworthy site, the more likely they are to believe untrustworthy claims. This is true even if the reader spent time analyzing the credibility of the page (vertical reading). Web articles can be deceptive. Instead, great critical thinkers use lateral reading by comparing the claim with known credible sources.

3. You acknowledge your susceptibility to biases and fallacies. In practicing intellectual humility, scientific thinkers acknowledge the many biases and fallacies they may fall for and work to reduce their influence (see Logic and Objectivity above).

As Trecek-King has said:

Humans have long sought to explain the world around us. Our ancestors often attributed natural events, like illnesses, storms, or famines, to the work of supernatural forces, such as witches, demons, angry gods, or the spirits of the dead. We notice patterns, even when they’re not real, and we jump to conclusions based on our biases, emotions, expectations, and desires.

While the human brain is capable of astonishing levels of genius, it’s also remarkably prone to errors. It’s adapted for survival and reproduction, not for helping us determine the efficacy and safety of a vaccine or determining long-term changes in global climate. Personal experiences and emotional anecdotes can easily fool us, despite how convincing they may seem.

Some of the most common biases and fallacies include:

- Confirmation bias: Tendency to search for, favor, and remember information that confirms our beliefs.

- Overconfidence effect: Tendency to overestimate our knowledge and/or abilities.

- Ad hominem: Attempts to discredit an argument by attacking the source.

- Appeal to (false) authority: Claims that something is true based on the position of an assumed authority.

- Appeal to emotions: Attempts to persuade with emotions, such as anger, fear, happiness, or pity, in place of reason or facts.

- Mistaking correlation for causation: Assumes that because events occurred together there must be a causal connection.

- Red herring: Attempts to mislead or distract by referencing irrelevant information.

- Slippery slope: Suggests an action will set off a chain of events leading to an extreme, undesirable outcome.

- Straw man: Misrepresents someone’s argument to make it easier to dismiss. (See A Tool-Kit for Evaluating Claims)

As society continues to change, the importance of critically evaluating evidence is imperative. Whether we are students, educators, therapists, researchers, policymakers, or voters, we can work to improve society by thinking like a scientist.

References