Leadership

A Win for the Scientific Process

Study results publicized on this blog spark scientific controversy.

Posted February 14, 2020 Reviewed by Davia Sills

[Note on edits: Both Armand Chatard and Joe Hilgard, who were mentioned in the article, have emailed me updates. I've therefore updated the text to reflect these comments.]

There was some blowback on a blog post I wrote a few weeks ago. It was reporting on the results of a new study that was posted publicly online on PsyArXiv about an attempt to replicate a highly cited study from several decades ago. The study concerned one particular test of Terror Management Theory, which suggested that U.S. undergraduate students who were made to think about their own death would end up feeling an existential threat that would, in turn, lead them to embrace pro-U.S. and reject anti-U.S. sentiments. The big conclusion from the write-up was that, with a much larger sample size and lots more labs involved, the original finding didn't replicate.

My supervisor started getting emails about the blog post, with one not-to-be-named researcher arguing that it was unfair that I was commenting on the article before it went through peer review. For those not in academia, peer review is the normal process that is used to determine if a research article will get published. The authors send the article to a journal, and a journal editor sends it out to two or more "peers"—other scientists working in the same area—to ask for their opinion on whether the article is good enough to publish. Putting out an article before peer review, and especially commenting on it, was (according to my critic) inappropriate.

So why did the authors post the article early? Because the Credibility Revolution is not just about replicating studies to see how reliable research is, it's also about transparency and openness. PsyArXiv is a preprint service developed at the Society for Improving Psychological Science (SIPS), following in the model of the physics research community's arXiv preprint service.

In the physics community, the journal publication process, which often drags out for years, is seen as too slow to keep up with new developments. Once a researcher has an important new idea to share, they make it publicly available for consumption—and scrutiny. The idea is immediately available, but it's understood that what has been made available is not the final draft. It's close enough to final that the author is willing to release it, but it still might have some "bugs." In fact, allowing other physicists to scrutinize the results publicly (as opposed to just having a couple of peer reviewers read it) might be a better way to catch mistakes.

Biology has a similar model, with a preprint server called BioArXiv. Economics also has a similar sort of system, where economists make "working papers" publicly available—sometimes for years—before they finally get approved and published in an official journal. These working papers get presented at conferences, and part of the function of this two-step process is to see if the broader research community catches any errors.

As it turns out, after the authors of the terror management replication project made their results public, a group of terror management theory proponents scrutinizing the results did find an error! Since the original authors had made all of their data and documentation available, these terror management theory experts were able to double-check the work. When the TMT guys accounted for this error and changed the type of test being conducted, the effect did replicate for people who had access to guidelines by experts!

The re-analysis double-checked the preregistered exclusion rules, which specified that very small studies—those in which less than 40 people were included in each condition—should be excluded.

They also changed the type of test being used from "one-tailed" to "two-tailed." The default assumption in psychology is that statistical tests will be two-tailed, meaning that you are open to the possibility that a difference between two groups could go in either direction (e.g., the effect could be in the expected direction, or it could reverse in the new study). However, prominent methodological researchers have argued that, in cases like that of a replication study, we should only use a one-tailed test, because we only care if the new result matches the pre-specified direction.

The preregistration document does not specify whether the test is one-tailed or two-tailed, and so the original authors used the default two-tailed test. The re-analysis team thought this didn't make as much sense, and so changed the analysis.

This is exactly the purpose of the preprint step in the scientific process. The re-analyzers took issue with my blog post on the preprint and a podcast episode discussing the preprint, and they announced the error they caught in a sassy tweet!

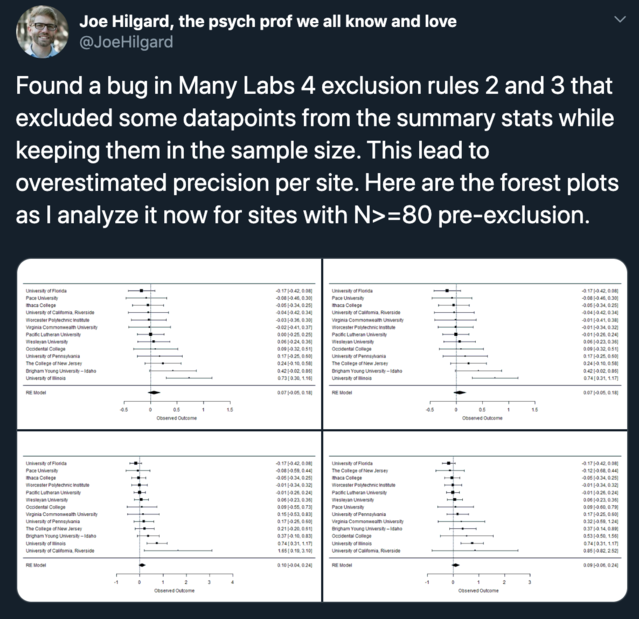

Getting interested, another researcher—one who studies violence in video games and has no particular background or interest in terror management theory—dove into the data himself. Again, he was able to do this because all the materials and data are publicly available. He found that there was a further error in the original analysis code. [Note: I had originally and incorrectly stated this error was on the part of the re-analyzers.] According to Chatard and Hilgard's newest analyses, there is a just-significant effect, but only in the expert labs. This suggests that the conclusions of the ML4 research team were not correct.

So, where does the saga stand now?

1. Researchers published an article reporting preliminary results from a big, coordinated data collection effort. (The Terror Management Effect: looks unreliable)

2. These results were publicized at this blog and in a podcast

3. Another group of researchers re-analyzed the public data, finding that the original authors had made a mistake. (The Terror Management Effect: looks reliable among expert labs)

4. A solo researcher makes a nights-and-weekends project of re-re-analyzing the results, confirming that there were errors in the original results. (The Terror Management Effect: looks reliable among expert labs)

Of course, this is still a developing situation. There may be a re-re-re-analysis that changes the results. [Note: there was a further development! The language above has been changed to reflect this. Thanks to Armand Chatard for reaching out to me to discuss the further analyses, and to Joe Hilgard for getting back to me so quickly to confirm details.]

Does this mean that the original researchers were wrong to post their preprint publicly? No, the process went exactly as intended. They posted a rough draft of their paper to get feedback before (or possibly concurrently with) getting official feedback from a journal. This led to lots of people scrutinizing the results, catching mistakes, and improving the final analysis that will get published in the official publication (when it does finally appear in a scientific journal).

Does this mean that I was wrong to write about the results when they were still in a preliminary stage? No, I consider this blog to be a source of science news—not an official journal. So when news breaks in psychology research, I don't think it's a problem to report on it. However, given the interactions I've had with people, I would do things differently. In particular, I would give a more clear explanation of what a preprint is—a rough draft of new scientific results—and emphasize that results were not yet published in an official journal.

Overall, I am hopeful and energized about how this played out. People always say that the scientific process is self-correcting, and this is a perfect example of what self-correction looks like. It is a group of researchers who care deeply about the details of their work, scrutinizing the results carefully to get the best possible answer, with the results sometimes hinging on these details.

[Note: the text has been updated to reflect new analyses.]

As it turns out, things have turned around again since I posted this yesterday. I'm still hopeful and energized that the scientific process is working. I am not a terror management theory hater; my undergraduate honors project was based on this theory. One of my favorite classes in college was a philosophy class delving deeply into existentialism, the philosophical underpinnings of terror management theory.

I truly believe that fear of death can have large and important effects on people's behaviors. Current evidence suggests that the effect is reliable, but only in groups who used the expert advice. We're getting a better and better grip on when effects in existential psychology do and don't work.