Bias

Should We Really Expect Politicians to “Follow the Science”?

Most politicians accept science that agrees with their politics, not the reverse

Posted January 29, 2021 Reviewed by Devon Frye

Climate change. Vaccination. Organic food. Genetically modified organisms (GMOs). Renewable energy. Nuclear power. These are just some of the issues politicians in the recent past have built into their platforms. For every single one of them, there is insight to be gleaned from looking at the science.

It is also not uncommon for politicians to make claims that their decisions will be “grounded in science” or that they will “only listen to the science.” Lest you think I am speaking hyperbolically, please note Exhibits 1 and 2.

Science Tends to Be Really Messy

In my last post, I argued that science is defined as a (1) systematic, (2) way of building and organizing knowledge, that (3) requires testable explanations and predictions. I further argued that “only works that meet all three required elements count as science; anything that does not is non-science” and that just because a study or set of studies may meet the basic requirements does not mean the science is of high quality.

This, then, leads to some issues with following the science. First, not every thinkpiece, journal article, or published report should be considered science. There are lots of thinkpieces that may offer some interesting arguments, but whether those arguments are grounded in an understanding of the actual science (as opposed to cherry-picking studies or misrepresenting the science to support one’s argument) can be debatable.[1]

Second, not all academic journals convey scientific evidence,[2] nor do all the journals that are scientific necessarily convey high-quality evidence. Third, scientists themselves are not immune from ideologically driven biases, meaning that they may (un)wittingly conduct studies designed to support their preconceived conclusions or interpret the implications of their research.

If you add this all up, it means that the average layperson or politician likely does not know how to determine what the science actually says. Instead, politicians are often left to draw conclusions based on the information conveyed to them, which ultimately means the opportunity for bias to be introduced.

Ideological Biases

We tend to surround ourselves with people who happen to be more like us. While this may sometimes take the form of physical similarities (e.g., age, sex, race), in a lot of cases it takes the form of similarities in how we see the world—the ideological biases we bring to resolving problems and making decisions.

While it is easy to oversimplify categorizations of ideological bias, such as left versus right or conservative versus liberal, most people’s ideological biases are more nuanced than that. AllSides (2020), for example, provides a list of 14 types of ideological biases it considers when categorizing sources in its media bias chart. This list is, by no means, authoritative or exhaustive,[3] but it is undeniable that all people possess some form of ideological bias and these biases don’t necessarily fit neatly into simple categories.

There isn’t anything innately wrong with ideological biases. They become a lens through which people evaluate and weigh various decision options. For example, someone with a strong bias toward being fiscally conservative may be swayed heavily by the financial cost of a new government program, whereas someone less fiscally conservative may be swayed more heavily by the potential benefit of said program. Different biases politicians bring can result in them reaching different conclusions about a given program. It isn’t that either conclusion is necessarily wrong;[4] instead, it may be that decision-makers differed in how they weighted various considerations.

These differences can add value if the differing points of view are given sufficient weight. If there is a lack of differences in important ideological biases brought by decision-makers or multiple positions are not given the opportunity to be expressed and considered, it can easily lead to decisions that will be flawed and increase the likelihood of unintended negative consequences.[5]

We also know these biases can vary from issue to issue. Someone may have an authoritarian bias when it comes to gun ownership but a libertarian bias when it comes to abortion. Some of this variability arises as a result of the activation of specific values related to that issue.[6] One’s bias on the issue of gun control could be influenced by the activation of strong security-focused values, whereas one’s bias on the issue of abortion could be influenced by the activation of strong libertarian-focused values. The strength of those values can then affect the strength of the bias we bring to that issue.

Bias Strength

Bias strength plays an important role in how likely people are to reach a conclusion different than the predicted conclusion. The stronger the bias, the less likely people are to adjust their predicted conclusion in the presence of new information that conflicts with that conclusion. Someone who is determined to avoid any restrictions on gun ownership will be motivated to find flaws in the evidence supporting the potential benefit of some restrictions.

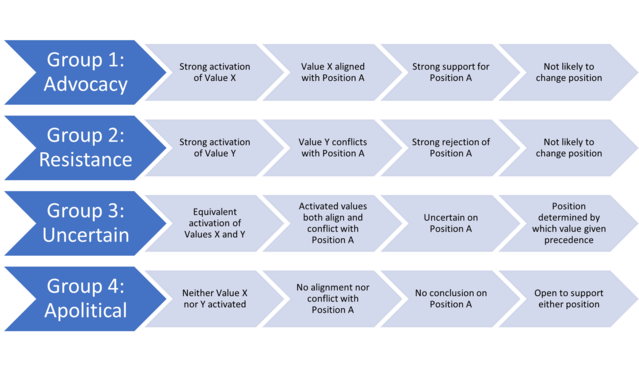

A given issue is not likely to activate strongly held values among all people, and thus people will vary in the amount of bias they bring to that issue. This is why, when it comes to political issues, it is common to find at least four groups. A simple representation of this can be seen in Figure 1,[7] which poses implications for decision making, not just for politicians, but for people in general.

First, Groups 1 and 2 are more likely to have stronger biases that increase the likelihood of reaching the biased or predicted conclusion regarding Position A than are Groups 3 and 4. The stronger the bias that develops among Groups 1 and 2, the less likely members of those groups are to change their position based on new information that contradicts their position, even if that information is of high quality.

Second, it is easy to see how political parties, special interest groups, and other constituencies may play on values X and Y as a way of crafting a platform on an issue. This is readily seen in discussions surrounding gun control, abortion, and other contentious issues.

Finally, how issues are framed can provide important information that helps to activate specific values and their corresponding biases. Sher and McKenzie (2006), for example, demonstrated that whether a glass is considered half full or half empty depends on whether the glass was seen as originally full or originally empty. The same can easily apply to political decision making with much more profound effects.

Together, these implications suggest there are likely to be a lot of pitfalls when trying to “follow the science.”

The Challenge of Following the Science

Let’s start with a little refresher about what we can conclude so far:

- Science tends to be really messy; it’s often difficult to discriminate signal from noise and differentiate good science from bad science from nonscience.

- The stronger we value something, the more likely we are to develop a bias that will affect our decision making.

- The process of science is not itself biased, but scientists are all human; thus, their values may (un)wittingly impact their research, the conclusions they draw from that research, and how that research is framed.

- Politicians possess some strong values that lead them to become allied with various constituencies (i.e., political parties, special interest groups, individuals) with whom they perceive their values are aligned.

- These constituencies will provide evidence that supports a position aligned with the politicians’ strong values.

- Politicians more readily accept evidence that is framed as being aligned with strongly held values and rebuff evidence that is misaligned with it.

- Politicians develop strong biases toward a given position; the stronger the bias, the more likely politicians are to accept supporting evidence and be critical of refutational evidence.[8]

Because politicians are inclined to be more supportive of evidence that is aligned with strong values and discount evidence that is misaligned with it, it is thus nearly impossible for them to “follow the science.”[9] Even when there is scientific consensus on some issue, applying that consensus to laws and regulations involves value judgments—about the costs and benefits of various proposals to action. Science cannot tell us, for example, whether the costs of lockdowns are worth the benefits for controlling a pandemic or how to weigh the trade-offs between changing climate and various proposed initiatives for reducing carbon emissions. How politicians reach conclusions on those topics is as much about their own strongly held values and biases as it is about the actual science. And, in my view, it is misleading to claim otherwise.

References

Footnotes

[1] Peer review is supposed to help with this, but the degree to which it does is also debatable.

[2] Some even believe that the presence of an article in an academic journal means it represents high-quality science.

[3] There are almost always opportunities to identify nuances that complicate any higher-order categories.

[4] What pundits and tweeple often fail to recognize is that their agreement with a decision is may based on the (mis)alignment between their biases and the decision that was made rather than whether it was objectively a correct or incorrect decision.

[5] We have seen this in many decisions in which sufficient attention was not paid to those with different biases, such as the intelligence community’s conclusion that Saddam Hussein had weapons of mass destruction and Kennedy’s decision to approve the Bay of Pigs invasion.

[6] Several taxonomies of values exist, such as those developed by Schwartz, by Rokeach, and by Musek.

[7] Admittedly, we could complicate this by expanding the number of values that are strongly activated. This would lead to substantially more than 4 groups. This regularly occurs when, for example, politicians will endorse one aspect of a bill but not another aspect of it. This likely results from the moderate to strong activation of one or more values that are aligned with the position but also one or more values that conflict with it. Thus, Group 3 might become Group 3A, Group 3B, etc.

[8] This is a big reason why it is important to have representatives of all four groups shown in Figure 1. Without representation, it is much more likely that decisions will be heavily weighted toward confirmatory evidence, with much less attention given to refuting evidence. Furthermore, if an insufficient number of people fall into Groups 2, 3, and 4, there is little need for actual consideration of competing evidence before decisions are made - increasing the likelihood that new laws and regulations will fail to have their intended benefits and may result in unintended consequences.

[9] This can become even more problematic when adding on issues surrounding donor influence and other financial conflicts of interest, but even if those were removed, ideological biases will always be present.