How the Brain Learns Best

As neuroscience unlocks the secrets of learning, it’s more important than ever to take a hard look at screens.

By Jared Cooney Horvath Ph.D., M.Ed., published July 2, 2024 - last reviewed on July 2, 2024

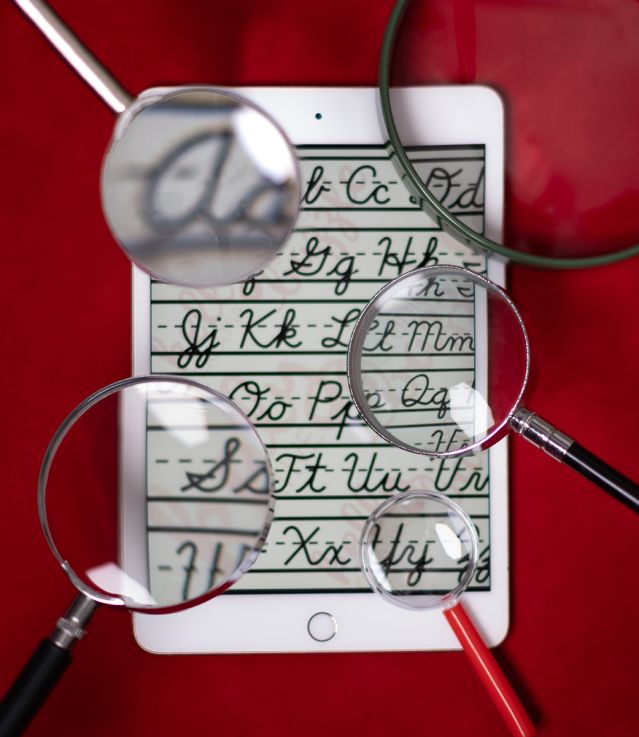

About a year ago, Sweden took a great leap forward by taking a giant step backward: Its education system formally rebalanced the use of digital tools and traditional teaching strategies by embracing such conventional practices as reading physical textbooks and handwriting on paper while progressively decreasing reliance on computers. No less an authority than the Karolinska Institute had found that digital tools impair rather than enhance student learning.

Over the past couple of decades, as schools and just about every other organization enthusiastically adopted digital technology—what Sweden’s minister of education calls “an experiment”—neuroscientists exploring human cognition were consolidating research about how the brain best takes in information.

The findings are instructive for everyone.

1. Learning Requires Empathy.

In 1966, researchers released ELIZA, the world’s first digital psychotherapist. Since then, pundits have mused about the inevitability of digital chatbots replacing human beings in the therapeutic relationship.

Despite five decades of technological advance, corporeal mental health professionals continue to thrive, with estimates placing their positive impact on symptoms of depression and anxiety at three times and five times greater, respectively, than their digital counterparts.

Why has artificial intelligence not lived up to expectations in this context? Because the operative word in therapeutic relationship is relationship. Decades of research consistently show that the relationship between therapist and patient is far and away the greatest predictor of therapeutic success, with some data suggesting that 80 percent of the outcome is attributable to a strong empathetic relationship.

The same holds true for learning. Learning is interpersonal. The specific stimuli used to drive learning are secondary to the person delivering said stimuli. In fact, after analyzing data from thousands of studies, educational researcher John Hattie has reported that a strongly empathetic student-teacher relationship imparts 2.5 times greater impact on learning than does one-on-one instruction alone.

People like Bill Gates and Sal Khan contend that achievement gaps across education can (and will) be closed by employing ChatGPT and other language models as personal tutors. But machines can’t motivate and inspire the way an empathetic relationship can.

What’s required for the development of empathy is the hormone oxytocin, a biological marker of social bonding and relationship formation. For a long time, it was thought that only touch could trigger the oxytocin response—a hug, a hand on the shoulder. But it is now clear that oxytocin release can be triggered via purely psychological means as well. Although the specifics are still unclear, it appears to require the sensation of safety and some kind of care. And one other very specific thing—the sound of the other person’s voice (even over the telephone). Text-based communication does not release oxytocin.

When two individuals release oxytocin simultaneously, their brain activity begins to synchronize. This process, called “neuronal coupling,” is the neurological biomarker of empathy and leads individuals to not only learn from each other but also to literally start thinking like each other.

Imaging studies show that teacher-student neural coupling is associated with learning. The more the student’s brain mirrors the teacher’s brain, the greater the assimilation of information in classroom settings. We can laugh with AI, we can sympathize with AI, we might even develop a crude form of love for AI, but the transpersonal nature of empathy excludes the emergence of this particular cognitive pattern.

This is undoubtedly one of the reasons why 85 percent of tuition-free students and over 50 percent of fee-paying students never complete online learning programs. Without empathy, individuals become passive receivers of information with little impetus to push through the inevitable struggles encountered along the learning process.

2. Creativity Demands Personally Encoded Knowledge.

Everyone agrees that it’s important to have the opportunity to exercise higher-order cognitive skills like critical thinking and creativity. Many are hoping that ChatGPT and other AI, which can access and organize all the world’s knowledge in seconds, can free people from the need to memorize and learn information so that they can focus instead on the cultivation of deeper reasoning abilities.

But in order for individuals to apply higher-order thinking skills to a set of facts, those facts must first be internally embedded within their long-term memory. Long-term memory is the fulcrum of human cognition. Information is largely unusable until it is deeply encoded and organized within a person’s prior knowledge structures. Having access to information does not obviate the need to learn that information if you ever hope to bring your skills to bear upon it.

Consider creativity. I’d like to think I possess this higher-order skill and apply it daily within my job when interpreting data, building experimental paradigms, or developing hypotheses. Unfortunately, if you asked me to apply my creative skills to car repair—something I know absolutely nothing about— there’s little chance I’d demonstrate any meaningful insight. Furthermore, if you allow me access to information about car repair (say, through Wikipedia or online videos), I’d be no closer to bringing my creative abilities to bear; the best I’d be able to do is copy/paste or mimic what I’ve seen.

It is not until the information becomes embodied within my schema for the world that I will begin to meaningfully manipulate, rearrange, and play with this information. This is why learning is often broken up into surface, deep, and transformative steps: Latter processes require traversing former levels.

Whether people use instruction manuals or ChatGPT to access proper finger placement for myriad guitar chords, they still need to learn the chords and develop fluency before jumping into classical composition, which requires deep knowledge of (not mere access to) finger placement. The same holds true for every field of learning. Thinking critically and logically is not possible without background knowledge.

3. It Takes Undivided Attention.

Perhaps the best way to conceive of human attention is as a filter. Much like the way 3-D glasses allow only certain wavelengths of light to reach the retina, attention allows only relevant information to pass into conscious awareness; irrelevant information is blocked.

What, then, determines whether a particular bit of information is relevant?

Like board games, every task we undertake as human beings comes with its own unique set of rules that dictate what actions are required for success. For instance, to read these words successfully, your “reading rule set” dictates that you must move your eyes from left to right, hold each word in memory until the end of the sentence, and use your fingers to flip between pages.

Whenever we engage with a task, the relevant rule set must be loaded into a small area of the brain called the lateral prefrontal cortex (LatPFC). Whatever rule set is being held within this part of the brain will ultimately determine what the attentional filter deems relevant or irrelevant.

It is important to know that the LatPFC can hold onto only one rule set at a time. This is why it is impossible for human beings to multitask; the best we can do is quickly jump back and forth between tasks, swapping out the rule set within the LatPFC each time.

Jumping between tasks, however, incurs three significant costs. The first concerns time. It takes the brain about 0.15 seconds to swap out a rule set, during which time all external information stops being consciously processed and learning slows considerably (a phenomenon called the “attentional blink”).

The second concerns accuracy. Whenever we jump between tasks, there is a brief period of time when the two rule sets blend together and general performance suffers—known as the “psychological refractory period.”

The third concerns memory. Memories are typically processed by the brain’s hippocampus. However, with multitasking, memories are more often processed by the striatum (an area of the brain linked to reflexive processes), ultimately leading to their formation as subconscious memories, which are difficult to access and utilize in the future.

Multitasking is one of the worst things human beings can do for learning and memory. That may be why today’s students are doing poorly on tests of information, composition, and the application of higher-order thinking skills.

A pre-COVID-19 survey exploring how U.S. students ages

8 to 18 utilize digital technologies shows how they spend their time per week:

- 10 hours 44 minutes playing video games

- 10 hours 2 minutes watching television or film clips

- 8 hours 14 minutes scrolling social media

- 7 hours 32 minutes listening to music

- 3 hours 25 minutes doing homework

- 2 hours 5 minutes doing schoolwork

- 1 hour 14 minutes reading for pleasure

- 52.5 minutes creating digital content

- 14 minutes writing for pleasure.

Accounting for the fact that school is in session only 36 weeks per year, the data suggest that students spend nearly 200 hours annually using computers for learning purposes–and more than 2,000 hours using the same exact tool to jump rapidly between scatter-shot media content. Computers are multitasking machines.

Which may be why, when using a computer for homework, learners typically last fewer than 6 minutes before accessing social media, messaging friends, and engaging with other digital distractions. And why, when using a laptop during class, learners typically spend 38 minutes of every hour off-task.

Because, in actuality, computers are so often not used for learning, trying to shoehorn in learning places a very large (and very unnecessary) obstacle between the learner and the desired outcome. In order to effectively learn while using a computer, people must expend an incredible amount of cognitive effort battling impulses that they’ve spent years honing, a battle they lose more often than not.

4. Location. Location. Location.

The hippocampus is the brain’s primary gateway to memory. Essentially, all new information must pass through this neural structure in order to be converted into consciously accessible long-term memory.

Lining the hippocampus are millions of tiny neurons called “place cells.” These cells continuously and subconsciously encode both the spatial layout of whatever objects we are interacting with and our physical relationship to those objects. For instance, if I were to place you in a maze, place cells would map out not only the global pattern of the maze but also your unique location within that pattern as you walked through the maze.

As a result, spatial layout is an integral aspect of all newly formed memories. This is the reason why, when it comes to reading comprehension and retention, hard copy always beats digital.

Print ensures that material is in an unchanging and everlasting three-dimensional location. You may have noticed that after reading from physical media, you can typically recall that a particular passage of interest is “about halfway through the book on the bottom, right-hand page.”

The unvarying location is embedded within our memory and can be used to help trigger relevant content in the future. Digital media have neither an unchanging nor an everlasting spatial organization. When reading a PDF document, words will begin at the bottom of the screen, move to the middle, then disappear out the top. When content has no fixed physical location, we lose a key component of memory and cannot draw upon spatial organization as a cue to recall content in the future.

Modern e-readers allow users to “flip” (rather than scroll) through the “pages.” Although a step in the right direction, this still omits the important third dimension of depth, which allows for the unambiguous triangulation of information.

If your primary purpose for reading is not memory (for instance, if you’re searching for key terms), then digital tools will often prove more effective than print. Furthermore, if you have a physical or mental impairment which necessitates the need of text interactivity, then digital tools may be essential.

However, if your aim is learning and if you have the luxury of selecting between different media, then print it out.

5. Use Flashcards. Seriously.

Committing facts/knowledge to memory is the foundation upon which higher-order thinking skills can emerge. Memorization can occur via play, enactment, quizzes, illustrations, construction, and more. However, flashcards that contain a question on one side and the relevant answer on the other are still one of the fastest, easiest, and strongest tools for explicit memorization.

They stimulate recall. Memory is constructive: The more we internally access or recall a memory, the deeper, more durable, and more accessible that memory becomes in the future. For instance, the reason why I remember Game of Thrones so well is not because I repeatedly watch the show (I’ve seen it only once); it’s because I repeatedly talk about the show—and each time I recall it, my memories become stronger.

With a question-on-front format, flashcards require individuals to internally recall information, thereby leading to deeper memories than simple re-reading or re-viewing of material can foster.

Flashcards also guard against the malleability of memory. Each time we access a memory, we have the ability to tweak, change, or amend it for posterity (a problem that has long plagued eyewitness testimonies in legal settings). One way to combat this effect is to employ immediate feedback. Allowing access to relevant information immediately following recall can ensure it remains accurate. With their answer-on-back format, flashcards supply such feedback so we don’t unwittingly change our memories.

Further, flashcards can abet linking. Within the brain, facts become tied together into large, interconnected webs called “schemata.” With such structures in place, recalling a specific fact allows access to all other connected facts within that schema.

Organizing flashcards into thematic, conceptual, or shared-characteristic groups helps individuals form relevant schemata. For this reason, when using a group of flashcards, do not ditch those cards you get correct; always keep the group together to ensure you are linking relevant ideas.

It’s clear that there is no major shortcut to learning. Some crucial brain processes simply can’t be circumvented. As it has done for the Swedes, neuroscience points us to a range of techniques that have an established base of efficacy.

Video Competition

In order to understand oral speech, the brain relies on the Wernicke/Broca network, a small chain of neurons processing the meaning of auditory words. Unfortunately, the brain has only one of these networks. We can funnel only one voice through this network at a time and comprehend only one speaker at a time.

Surprisingly, when we silently read, the Wernicke/Broca network activates to the same extent as when we listen to someone speak. The brain processes the silent reading voice in exactly the same manner it does an out-loud speaking voice. Accordingly, just as human beings can’t listen to two people speaking simultaneously, neither can we read while listening to someone speak simultaneously.

This is the basis for the often discussed “redundancy effect”: Studies show that learning and memory decrease when people are presented with simultaneous text and speech elements. When captions are present during a video narration, people tend to understand and remember less than those who watch the same video without captions.

There are, of course, special circumstances when combined captions and narration will not clash and can actually improve learning. One is learning a new language. Similarly, captions can boost decoding of narration and aid learning when audio quality is poor or the speaker has an accent that takes great effort to understand. Captions on video can also aid individuals with specific processing disorders.

Jared Cooney Horvath, Ph.D., M.Ed., is a neuroscientist, educator, and author of Stop Talking, Start Influencing: 12 Insights From Brain Science to Make Your Message Stick.

Submit your response to this story to letters@psychologytoday.com.

Pick up a copy of Psychology Today on newsstands now or subscribe to read the rest of this issue.