Education

Lumping EdTech Under Screentime Can Damage Education

Governments need to distinguish between technology types in regulations.

Posted November 26, 2023 Reviewed by Gary Drevitch

Key points

- Global discussions on children's screen time oversimplify, hindering effective long-term guidelines.

- A vertical approach to tech evaluation allows for a more precise examination of AI and digitization impacts.

- Sustainable evaluation models involve continuous collaboration between academia, industry, and schools.

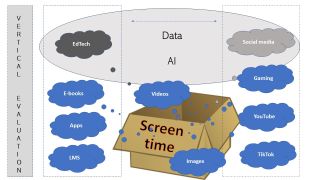

Official screen-time inquiries are occurring in many countries this year. For example, the UK's Education Committee launched a hearing inquiry into how screen time affects education and well-being and the Norwegian government appointed a committee to make recommendations on children's screen use. While these inquiries are important for fostering reflection, they have limited use in developing long-term, practical guidelines because they lump children's internet use, social-media use, and educational-technology (EdTech) use into one broad "screentime" category. This approach oversimplifies the diverse nature of technologies, neglecting the dynamic ecosystem they form and how children experience them as an interconnected system.

Certainly, various technologies encounter similar challenges arising from their reliance on data, especially with the growing integration of generative AI. Common challenges include the misuse of data for commercial purposes, such as advertising, and the implementation of so-called “sticky designs” that encourage prolonged screen time for children. However, these shared challenges manifest differently for each technology and each child. Therefore, a blanket approach fails to account for the nuanced experiences associated with specific groups of users or activities.

Instead of assessing and establishing rules for children’s technologies horizontally, we should consider evaluating technologies vertically, considering their distinct characteristics. Once we address each vertical or use case individually, we can then explore their connections to other domains. For instance, while children's use of EdTech and social media cumulatively contributes to their overall screen time, its combination plays out very differently for children from different socio-economic backgrounds. Moreover, the vertical approach to technologies allows us to then more precisely examine how generative AI and digitization distinctly influence each technology within its specific domain.

With this perspective as a foundation, let's explore what we already know about the EdTech vertical, providing a basis for establishing principles for national guidelines.

Ensuring National Quality Assurance for Educational Technology's Impact on Children

The government should assume responsibility for the quality of educational technology provided to schools, similar to the way it certifies foods and pharmaceuticals. EdTech is often sold to schools without assurance of its genuine enhancement of learning. While many educational apps and platforms may save teachers time and even engage children, the essential question is whether they truly contribute to enhanced learning. Schools, playing a crucial role in addressing inequities, have the duty to ensure that the quality of what children receive is consistent and equitable. The current unsatisfactory situation, where the choice of apps, platforms, and learning devices (such as Chromebooks or iPads) is like a lottery across different districts and individual schools, should be addressed and standardized at the national or federal level.

Quality should be ensured through objective evaluations of impact, scientifically measured, and pedagogically demonstrated. Teacher/researcher/industry collaborations can determine what qualifies for government-recommended lists or "green" lists (as in Austria, for example). Currently, recommendations of resources are managed by national broadcasting services such as BBC Bitesize in the UK or released by various non-profit organizations, as in the U.S. These organizations may take a practical perspective and provide an easy-to-understand overview with simple rating systems based on their own rubrics. However, while usability and expert views are important, for classroom use it is crucial to measure actual learning effects by objective scientific methods.

Continuous Evaluation Routines for Quality and Innovation

The dynamic nature of EdTech means it evolves alongside technological innovations, and any governmental rules and quality checks must be designed to respond to this dynamic interplay. A sustainable model involves collaborations among schools, industry, and academia to maintain and develop quality. This includes testing new features in schools and collecting data in a centralized system for continuous evaluation according to shared standards. While some quality standards exist, they differ by country and are unevenly applied. For an equitable model that fosters innovation in the educational-technology industry, all of EdTech should be part of national evaluations and share data for this purpose. The scientifically-developed EVER routine is a good model for creating evaluation methods that are both scientific and adaptable to individual technology types based on their unique features.

References

Kucirkova, N. I., Livingstone, S., & Radesky, J. S. (2023). Faulty screen time measures hamper national policies: here is a way to address it. Frontiers in Psychology, 14.

Kucirkova, N. (2023). Debate: Response to “Should academics collaborate with digital companies to improve young people's mental health”. Child and Adolescent Mental Health, 28(2), 336-337.

Kucirkova, N., Brod, G., & Gaab, N. (2023). Applying the science of learning to EdTech evidence evaluations using the EdTech Evidence Evaluation Routine (EVER). npj Science of Learning, 8(1), 35.