Artificial Intelligence

The Turing Test Is Especially Relevant Today

Our ability to perceive intelligence in machines is key to AI success.

Posted July 31, 2023 Reviewed by Monica Vilhauer

Key points

- The Turing Test was developed in 1950 as a benchmark for how humans perceive intelligence in machines.

- Given advances in AI, many people have argued that the Turing Test is ineffective and outdated.

- These concerns obfuscate the importance of psychology in how we perceive machines as having humanness.

In late 1950, noted mathematician, code-breaker, and computer scientist Alan Turing proposed a test for answering the question, "Can machines think?"

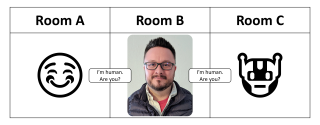

Consider the following scenario, depicted at left:

- Agent A (a human) is in one room.

- Agent B (a computer) is in another room.

- You are an interrogator, seated in a room between the two other agents.

Your task? Determine which agent is a human and which is a computer, based only on your interactions with both. In Turing's test, these interactions were restricted to printed text, occluding the "true identity" of the agents.

With the rapid development of natural language processing programs and the ability of platforms such as ChatGPT and Google Bard to provide sophisticated replies to written questions, it can be easy to see why there is growing critique about the relevance of the Turing Test. Journalists, technologists, and futurists have suggested that the test is "broken," "outdated," and "far beyond obsolete."

Across these critiques and others is an enduring point: For many users, generative artificial intelligence systems already pass the Turing Test, and yet these machines fall well short of meeting self-awareness benchmarks that would classify them as sentient beings. For example, media have reported on the (mis)use of ChatGPT to cheat on exams and written assignments, and generating content capable of benchmarks such as passing law and business school entrance exams. Although the AI-generated answers aren't stellar—Teen Vogue specified that the programs create "cliché essays that almost say nothing" and CNN reported that passing exam scores were "not particularly high marks"—the fervor alone is trace evidence that AI-generated homework was viewed as indistinguishable from that of human students.

Perceptions vs. Programming

Despite the above scenarios, critiques of the Turing Test are misplaced because they confuse perceiving an intelligence within a machine with assessments of a machine's intelligence capability. The former is a question of psychology, and the later is a question of programming. For Jacquet et al., (2021), a more apt use of the Turing Test is to test the former—as a test of a machine's perceived humanness.

In psychology, media and communication, and other related fields of scholarship, we already have robust frameworks that build on many of the ideas posed by Turing and others. One of the most influential texts for the nascent field of human-machine communication (HMC) was The Media Equation. Written in 1996, the book was based on several original psychological studies conducted by Byron Reeves and the late Clifford Nass, all pointing to the same conclusion: When people use technologies, they treat the machines as if they were human. Examples in their text included using politeness cues (such as saying "please" and "thank you" in Google text searches) and responding to male- and female-gendered synthetic voices differently. Later, scholars would develop the computers as social actors (CASA) framework to more directly study the social interactions between humans and machines (Gambino et al., 2020).

Understanding the humanness of machines is especially relevant when we consider deeply rooted expectations we tend to have to social interactions. Edwards et al. (2021) coined the notion of a human-to-human interaction script in explaining how we might engage most conversations. As they noted:

people generally assume their interaction partners will be other humans, form expectations and select appropriate social scripts on that assumption and face potential expectancy violations when the partner is instead a machine.

If machines are consistently able to pass the Turing Test, they would also be less likely to violate our human-to-human interaction scripts and thus, our psychological and social comfort with AI agents would be substantially increased.

Given that a goal of many AI developers is to create machines with a theory of mind, yet another area of study for human-machine communication is whether machines are deserving of moral patiency—i.e., can machines be harmed or helped by the actions of others and, if so, do we owe them moral consideration? Across a series of studies using several different types of social robots, people are capable of ascribing moral patiency to machines (Banks, 2021; Banks & Bowman, 2023; Banks et al., 2023). Although not directly tested in this research, we could speculate that machines capable of passing the Turing Test would also be seen as deserving moral patiency, which is part of a much deeper debate about robot rights.

Conclusion

Turing predicted that "... in about fifty years’ time it will be possible to programme computers ... so well that an average interrogator will not have more than 70 per cent chance of making the right identification after five minutes of questioning" (1950), following up with: "The original question, ‘Can machines think?’ I believe to be too meaningless to deserve discussion."

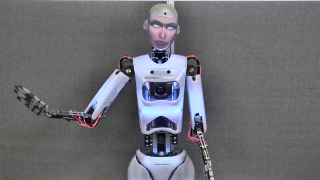

In a sense, even Turing saw his test as a rather simple benchmark to meet. That said, I'd disagree that the question is a meaningless one. Instead, I posit—as do others (see French, 2000)— that our ability to perceive intelligence (i.e., "humanness") in the machines already around us speaks to a critical moment in human-machine relations. Rather than scrapping the Turing Test, advances in social robotics might even call for an expansion of the test to include a broader set of communication channels, given that contemporary human-machine interactions are hardly restricted to text prompting.

The Turing Test stands as a simple-yet-important indicator of deeper psychological processes at play in our increasingly complex and intertwined relationship with increasingly intelligent machines.

References

Banks. J. (2021). From warranty voids to uprising advocacy: Human action and the perceived moral patiency of social robots. Frontiers in Robotics and AI, 8. https://doi.org/10.3389/frobt.2021.670503

Banks, J., & Bowman, N. (2023). Perceived moral patiency of social robots: Explication and scale development. International Journal of Social Robotics, 15, 101–113. https://doi.org/10.1007/s12369-022-00950-6

Banks, J., Koban, K., & Haggadone, B. (2023). Avoiding the abject and seeking the script: Perceived mind, morality, and trust in a persuasive social robot. ACM Transactions on Human-Robot Interaction, 12(3), Article No. 32, 1–24. https://doi.org/10.1145/3572036

Edwards, A., Edwards, C., Westerman, D., & Spence, P. R. (2019). Initial expectations, interactions, and beyond with social robots. Computers in Human Behavior, 90. 308-314. https://doi.org/10.1016/j.chb.2018.08.042

French, R. M. (2000). The Turing Test: The first 50 years. Trends in Cognitive Sciences, 4(3), 115-122. https://doi.org/10.1016/S1364-6613(00)01453-4

Gambino, A., Fox, J., & Ratan, R. A. (2020). Building a stronger CASA: Extending the computers are social actors paradigm. Human-Machine Communication, 1, 71–85. https://search.informit.org/doi/10.3316/INFORMIT.097034846749023

Jacquet, B., Jamet, F., & Baratgin, J. (2021). On the Pragmatics of the Turing Test. In 2021 International Conference on Information and Digital Technologies (IDT), Zilina, Slovakia (pp. 123-130). https://doi.org/10.1109/IDT52577.2021.9497570

Turing, A. M. (1950). Computing machinery and intelligence. Mind, LIX(236), 433-460. https://doi.org/10.1093/mind/LIX.236.433