Animal Behavior

Should Self-Driving Cars Spare People Over Pets?

Is valuing human life over animal life a culturally universal rule?

Posted March 8, 2019

The first death caused by a self-driving car occurred on Sunday, March 18, 2018. Elaine Herzberg was walking down a street in Tempe, Arizona when an Uber Volvo SUV operating on “fully autonomous mode” slammed into her going 40 miles an hour.

While Uber temporarily suspended their self-driving car trials, autonomous vehicles are now back on the streets of Miami, San Francisco, Pittsburg, Toronto, and Washington, DC. Autonomous vehicles are essentially computers on wheels programmed to make instantaneous decisions. Sooner or later, some of these decisions will fall into the realm of ethics. Should, for example, cars like one that killed Elaine Herzberg be programmed to swerve away from an adult pedestrian if it means running over a child….or a dog?

If you are thinking, “Aha! This is the famous trolley problem” you are right. In the classic version, a hypothetical runaway trolley car is careening down the tracks toward a group of five people. But what if you could pull a lever which would send the trolley onto another set of tracks where only one person would be killed? In this situation, most people say pull the lever. In another version of the trolley problem, you need to personally push a fat man onto the tracks to save the five people. In this case, however, most people say no, even though the utilitarian calculus is the same in both situations.

Cognitive scientists typically use the trolley problem to examine the foibles of human moral thinking. But with the development of autonomous vehicles, the trolley problem has suddenly become relevant in real-world situations. Using a variant of the trolley problem, a team of investigators from the MIT Media Lab used crowd-sourcing to conduct what is probably the largest study in the history of psychology (N = 40 million responses). They wanted to find if there are widely accepted moral principles that could inform ethical decision-making of autonomous vehicles. Recently published in the journal Nature (open access), their results also reveal the relative value humans place on the lives of dogs and cats.

The Moral Machine Experiment

The researchers describe the Moral Machine as “a serious game for collecting large-scale data on how citizens would want autonomous vehicles to solve moral problems in the context of unavoidable accidents.” Their Nature article was based on responses by people who played the game between November 2016 and March 2017. (The game is still online, and I recommend you play it yourself. Just go to this site.)

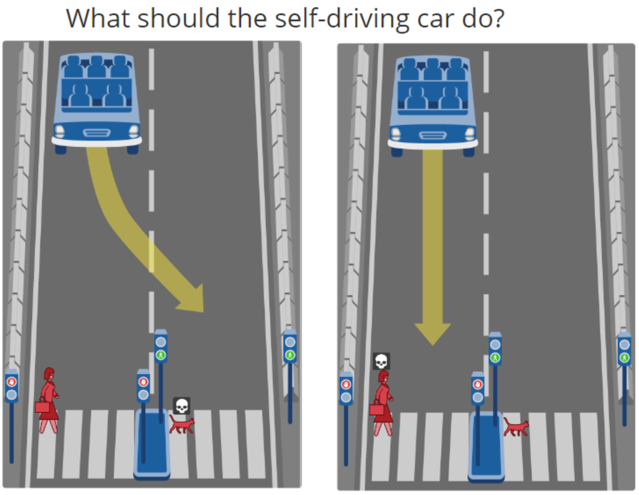

Here’s how it works. A self-driving car with broken brakes has to choose between two options. Your job is to select the best course of action. For example, in the situation shown below, the player has to decide whether it would be better for a self-driving car to swerve into the left lane and kill a cat or continue going straight in the right lane and smash into a woman.

The game has been translated into 10 languages and was originally put online in 2014. To the researcher’s surprise, the Moral Machine Experiment soon went viral and the resulting paper was based on the decisions of people from 233 countries and territories.

The MIT MediaLab team was primarily interested in nine comparisons that involved prioritizing: humans over pets (cats and dogs), more people over fewer people, passengers in the car over pedestrians, males over females, younger people over older people, law-abiding pedestrians in crosswalks versus illegal jaywalkers, physically fit people over the less fit, high status over lower status individuals, and finally, taking action by swerving or doing nothing. In addition, the researchers added some additional factors, for example, what if the person in the road was a criminal? They also obtain information on the respondents’ nationality, sex, education, income, and political and religious views.

The Results: Bad News for Dogs, Cat, and Criminals

This graph shows the relative importance millions of people worldwide placed on saving different types of people or animals as compared to an adult man. As you can see at the top of the graph, there were large preferences for autonomous vehicles to spare infants, children and pregnant women over adult men. But now look at the bottom of the graph. The overwhelming consensus was that, with one exception, humans should always be spared at the expense of dogs or cats. The exception was criminals. And even criminals were given priority over cats.

Cultural Similarities and Differences in Moral Values

The researchers found there were three "essential building blocks of machine ethics," moral principles which were generally agreed upon across most cultures:

- Spare people over animals.

- Spare more lives over fewer lives.

- Spare the young over the old.

While these cultural similarities are interesting, some of the differences between cultures were striking. For example:

- Japanese players generally wanted to spare pedestrians while Chinese respondents favored sparing passengers.

- Individualistic cultures were more willing to spare the young than collectivists cultures.

- Individualistic cultures also had marked preferences for saving greater numbers of individuals compared to collectivist cultures.

- Respondents from poor countries tended to spare jaywalkers more than players from wealthier nations.

- Countries with the greatest economic disparity between the rich and poor also showed the greatest differences in valuing the lives of the well-heeled over, say, the homeless.

- Nearly all nations preferred to spare women over men. However, this difference was more pronounced in countries where women had better prospects for good health and survival.

Geographic Differences in the Desire to Save People Over Pets?

Nations that were geographically close to each other tended to agree on moral machine ethics. The researchers found that national patterns of moral agreements fell into three clusters based on geography. The Western Cluster included North America and most of Europe. Countries in the Eastern Cluster included most of Asia and some Islamic nations. Perhaps the most interesting group of nations were grouped into the Southern Cluster. These included Central and South America along with France and some French-speaking territories.

Participants from Southern Culture countries differed from the rest of the world in several ways. They had much higher preferences for sparing the young over the old, and women over men, and high status over low-status individuals. But perhaps most surprising was that individuals in the Southern Cluster were less inclined to save people over pets than respondents in the Western and Eastern Clusters. I was mystified by this finding. After all, dogs and cats are more likely to be pampered in North America and Europe than in Latin America. So I emailed Dr. Azim Shariff, a member of the research team, and asked if he had any explanation for this geographic difference attitudes towards pets.

He immediately wrote back: "We don't have a good explanation for the Southern pet effect. It's not that they prefer pets to humans; everyone prefers humans to a large degree. It's just less so in the Southern cluster."

Dr. Shariff’s contention that everyone wants to spare humans over animals is consistent with the finding of a 1993 study that used trolley problems to search for universal moral principles. Some of the scenarios involved animals, for example, the decision to sacrifice the last five mountain gorillas on earth to save one man. After testing hundreds of people in several countries, the researchers concluded that the single most powerful universal moral principle was “value human life over the lives of non-human animals.”

There are, however, exceptions. As I described in this Animals and Us post, researchers at Georgia Regents University asked people if they would save a dog or a person if both were in the path of a runaway bus. The vast majority of people they studied said they would save, for example, a foreign tourist, rather than the dog. But their decisions changed dramatically if the subjects were told the dog was their personal pet. Now 30 percent of men and 45 percent of women said they would let the bus run over the foreign stranger if they could save their dog.

Go figure.

References

Awad, E., Dsouza, S., Kim, R., Schulz, J., Henrich, J., Shariff, A., Bonnefon, J.F. and Rahwan, I., 2018. The moral machine experiment. Nature, 563(7729), p.59-64.

Petrinovich, L., O'Neill, P., & Jorgensen, M. (1993). An empirical study of moral intuitions: Toward an evolutionary ethics. Journal of personality and social psychology, 64(3), 467.