Neuroscience

Mo' Data, Mo' Problems

Why neuroscience may not be the right place for larger data collection efforts.

Posted April 29, 2013

Rise of Big Data

If you’ve been paying attention to the news, you’ve probably heard all the hub-bub about the President’s new Brain Research through Advancing Innovative Neurotechnologies (BRAIN) initiative. This $100 million mandate is designed to foster technological developments that will allow us to record from every living neuron in the brain, preferably all at the same time.

“Wait a minute!” I’ve heard some people say, “Isn’t that what we’re already $35 million dollars for with the Human Connectome Project?”

Well no, not really. The Human Connectome Project is designed to map the large, physical connections in the human brain. This is analogous to building a map of all the interstate highways in our head.

The BRAIN initiative, on the other hand, is meant for technologies that will map and record at the neural level. To continue with our roadmap analogy, this is akin to mapping all of the roads, from residential streets to highways, in the brain as well as recording how many people use those roads every millisecond of every day. This is meant to foster more methods like the recently announced CLARITY technique, that allows for seeing the precise connections between individual neurons in the entire brain and also knowing what those neurons are.

But there is a similarity between the two. The Human Connectome Project and BRAIN are both so-called “BIG Data” projects (sometimes the BIG is capitalized just to emphasize that there’s a lot of data that needs to be understood). Big Data is a sexy, if often poorly defined, term that basically translates to: “OMG! I will drown in a sea of ambiguous numbers if I try and tackle that problem. Mommmmmmy!!!!!” *

Now while Big Data is all the rage in fields like computer science and economics, it’s actually an old hat to us neuroscientists. We’ve been swimming in “big data” for the last two decades.

In fact, data is not our problem… understanding it is.

Mo’ Data, Mo’ Problems

One of my idols in graduate school was Russ De Valois, a pioneering researcher in visual neuroscience who I was honored enough to meet as a student. Russ and his wife Karen, also a respected visual scientist, would regularly host a seminar at their house to discuss recent findings in visual neuroscience. (It usually included food and wine, which is the real reason why I initially signed up to go.). At these discussions, Russ would often complain that “[t]he problem with neuroscience isn’t that we don’t have enough data, it’s that we don’t know what to do with it.”

Unfortunately not much has changed in the 10 years since I last heard Russ make that complaint. Let’s take a look at the state of “data” in neuroscience shall we?

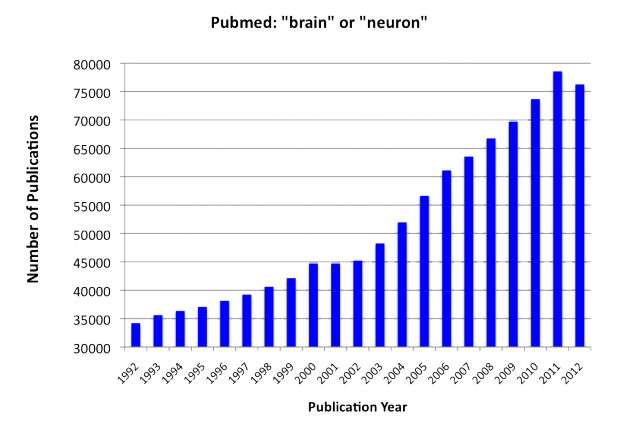

Over the last 20 years the number of published scientific articles that include either the word “brain” or “neuron” has nearly doubled from 34,195 articles in 1992 to 76,251 last year (down from 78,547 in 2011). That’s over a million published scientific articles on the brain over the last 20 years alone!

Graph of the scientific articles listed in PubMed that include either the word "brain" or "neuron".

How do you make sense of all of these empirical findings?

Well that’s where theoretical neuroscience (aka- computational neuroscience) comes into the picture. Theoreticians are tasked with developing rigorous models that try to explain a set of empirical observations. The classic example of theory in neuroscience is Hebbian Learning: “neurons that fire together wire together.” This is still a fundamental concept used in our understanding of how we learn.

Using these theories, we can not only explain data we have seen, but predict new data that we haven’t yet collected. These core theories, not to be confused with hypotheses, afford a holistic understanding of how the brain works at a fundamental level.

Remember how the theory of gravity revolutionized the field of physics and eventually took us to the moon? It’s like that.

Unfortunately, only a small minority of neuroscientists are theoreticians. Actually, calling them a “minority” may overestimate their presence. While the number of articles that use the word “theory” has increased 554% over the last 20 years, they still only reflect 1.07% of the entire literature (only 814 articles contained the words “Brain” and “Theory” in 2012).

Keep in mind, I’m using fairly liberal in their criteria for what counts as a theory paper. This means that many of the studies I’m calling “theoretical” may only be review papers (63 articles in 2012) or articles that talk about empirical data as matching a particular “theory” (say an fMRI study on the Theory of Mind).

Let me boil this down just to bring the point home: Ninety-nine percent of the million-plus published findings in the field of neuroscience consist of new empirical results.

I can’t use a large enough font to capitalize the “BIG” in Big Data.

Neuroscience, the anti-physics

In a lot of ways, neuroscience is the antithesis of particle physics. Particle physics, including quantum physics, is usually awash in a sea of theory with very few islands of data. This is why centralized research centers like Fermilab and CERN are so sought after by nearly the entire field. Unlike MRI machines, most major Universities can’t afford to have a large particle accelerator on campus. So these big, collaborative research programs bring a bounty of data to a field of researchers who are often starved for new empirical information.

But large data collection research initiatives aren’t just useful to fields with limited access to data. The Human Genome Project revolutionized modern biology and medicine by providing a complete map of our genetic blueprints. We are still just beginning to reap the benefits of this successful effort.

The Human Genome Project was useful because the core theory of how sets of deoxyribonucleic acid pairs build proteins and other molecules was already well understood. As soon as they had their DNA road maps, biologists knew how to drive their cars around to get to new places.

Unfortunately, in neuroscience we’re still learning how to drive our cars. As the neurologist Robert Knight is fond of saying, twenty years ago we knew 1% about how the brain works and now we’ve doubled it.

We don’t have core fundamental theories that will allow us to make sense of the data that we will get from large research initiatives like BRAIN and the Human Connectome Project. So we can get our detailed maps of the brain all we want, but it wont make any more sense to us than it does now.

This isn't to say we haven't started to try to tackle this problem. The development of large-scale meta-analytic tools like brainSCANr, NeuroSynth, and The Cognitive Atlas are all very promising attempts to find common patterns across these massive collections of published data. But they're only a start.

If the President and the federal funding agencies really wanted to find cost-effective ways of understanding the human brain they would start by providing more infrastructure for building better theories of how the brain works first.

We already have a mountain of data, we need to know how to scale it before it gets even bigger.

* If you want a much more succinct description of Big Data, read Nate Silver’s book “The Signal and The Noise.”