Artificial Intelligence

New Neuroprosthetic Is an AI Robotics Breakthrough

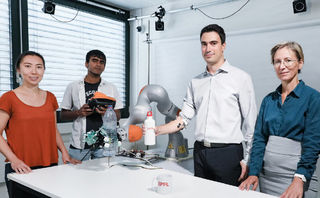

EPFL scientists applies AI to shared human-robot control for higher dexterity.

Posted October 1, 2019 Reviewed by Abigail Fagan

Scientists at EPFL (École polytechnique fédérale de Lausanne) in Switzerland have announced the creation of a world’s first for robotic hand control—a new type of neuroprosthetic that unifies human control with artificial intelligence (AI) automation for greater robot dexterity and published their research in September 2019 in Nature Machine Intelligence.

Neuroprosthetics (neural prosthetics) are artificial devices that stimulate or enhance the nervous system via electrical stimulation to compensate for deficiencies that impact motor skills, cognition, vision, hearing, communication, or sensory skills. Examples of neuroprosthetics include brain-computer interfaces (BCIs), deep brain stimulation, spinal cord stimulators (SCS), bladder control implants, cochlear implants, and cardiac pacemakers.

The worldwide upper limb prosthetics value is expected to exceed 2.3 billion USD by 2025, according to figures from an August 2019 report by Global Market Insight. In 2018, the worldwide market value reached one billion USD based on the same report. An estimated two million Americans are amputees, and there are over 185,000 amputations done annually, according to the National Limb Loss Information Center. Vascular disease accounts for 82 percent of U.S. amputations according to the report.

A myoelectric prosthesis is used to replace amputated body parts with an externally powered artificial limb that is activated by the user’s existing muscles. According to the EPFL research team, the commercial devices available today can give users a high level of autonomy, but the dexterity is nowhere nearly as agile as the intact human hand.

“Commercial devices usually use a two-recording-channel system to control a single degree of freedom; that is, one sEMG channel for flexion and one for extension,” wrote the EPFL researchers in their study. “While intuitive, the system provides little dexterity. People abandon myoelectric prostheses at high rates, in part because they feel that the level of control is insufficient to merit the price and complexity of these devices.”

To address the problem of dexterity with myoelectric prostheses, EPFL researchers took an interdisciplinary approach for this proof-of-concept study by combining the scientific fields of neuroengineering, robotics, and artificial intelligence to semi-automate a part of the motor command for “shared control.”

Silvestro Micera, EPFL’s Bertarelli Foundation Chair in Translational Neuroengineering, and Professor of Bioelectronics at Scuola Superiore Sant’Anna in Italy, views this shared approach for controlling robotic hands can improve the clinical impact and usability for a wide-range of neuroprosthetic purposes such as brain-to-machine interfaces (BMIs) and bionic hands.

“One reason why commercial prostheses more commonly use classifier-based decoders instead of proportional ones is because classifiers more robustly remain in a particular posture,” wrote the researchers. “For grasping, this type of control is ideal to prevent accidental dropping but sacrifices user agency by restricting the number of possible hand postures. Our implementation of shared control allows for both user agency and grasping robustness. In free space, the user has full control over hand movements, which also allows for volitional pre-shaping for grasping.”

In this study, the EPFL researchers focused on the design of the software algorithms—the robotic hardware that was provided by external parties consists of an Allegro Hand mounted on the KUKA IIWA 7 robot, an OptiTrack camera system and TEKSCAN pressure sensors.

The EPFL scientists created a kinematic proportional decoder by creating a multilayer perceptron (MLP) to learn how to interpret the user’s intention in order to translate it into movement of fingers on an artificial hand. A multilayer perceptron is a feedforward artificial neural network that uses backpropagation. MLP is a deep learning method where information moves forward in one direction, versus in a cycle or loop through the artificial neural network.

The algorithm is trained by input data from the user performing a series of hand movements. For faster convergence time, the Levenberg–Marquardt method was used for fitting the network weights instead of gradient descent. The full-model training process was fast and took less than 10 minutes for each of the subjects, making the algorithm practical from a clinical-use perspective.

“For an amputee, it’s actually very hard to contract the muscles many, many different ways to control all of the ways that our fingers move,” said Katie Zhuang at the EPFL Translational Neural Engineering Lab, who was the first author of the research study. “What we do is we put these sensors on their remaining stump, and then record them and try to interpret what the movement signals are. Because these signals can be a bit noisy, what we need is this machine learning algorithm that extracts meaningful activity from those muscles and interprets them into movements. And these movements are what control each finger of the robotic hands.”

Because the machine predictions of the finger movements may not be 100 percent accurate, the EPFL researchers incorporated robotic automation to enable the artificial hand and to automatically start closing around an object once the initial contact is made. If the user wants to release an object, all he or she has to do is attempt to open the hand in order to turn off the robotic controller, and put the user back in control of the hand.

According to Aude Billard who leads EPFL’s Learning Algorithms and Systems Laboratory, the robotic hand is able to react within 400 milliseconds. “Equipped with pressure sensors all along the fingers, it can react and stabilize the object before the brain can actually perceive that the object is slipping,” said Billard.

By applying artificial intelligence to neuroengineering and robotics, the EPFL scientists have demonstrated the new approach of shared control between machine and user intention—an advancement in neuroprosthetic technology.

Copyright © 2019 Cami Rosso All rights reserved.

References

Zhuang, Katie Z., Sommer, Nicolas, Mendez, Vincent, Aryan, Saurav, Formento, Emanuele, D’Anna, Edoardo, Artoni, Fiorenzo, Petrini, Francesco, Granata, Giuseppe, Cannaviello, Giovanni, Raffoul, Wassim, Billard, Aude, Micera, Silvestro. “Shared human–robot proportional control of a dexterous myoelectric prosthesis.” Nature Machine Intelligence. 11 September 2019.