Artificial Intelligence

Why Does Size Matter in Large Language Models?

When AI language models reach a certain size, architecture may matter more.

Updated January 3, 2024 Reviewed by Lybi Ma

Key points

- The size of language models may matter less than their psychology.

- The key psychological component is reflection: Back up from an initial conclusion to consider more reasons.

- Whether human or machine, our reasoning strategies may be more important than our cognitive capacities.

Why have language models become so impressive? Many people say that it's the size of the models. The large in 'large language models' has been thought to be key to the models' success: as the number of parameters in a model increases, its performance increases.

Size Matters, But Why?

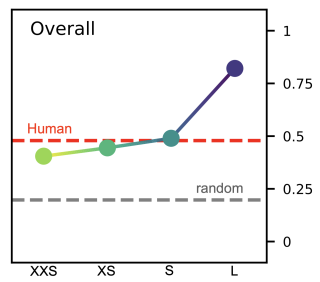

There's plenty of evidence that size matters when it comes to the performance of language models. For example, Tiwalayo Eisape and colleagues (2023) found that as the size of PaLM 2 increased from XXS to L, its performance on a logical task increased, eventually surpassing human performance (Figure 2).

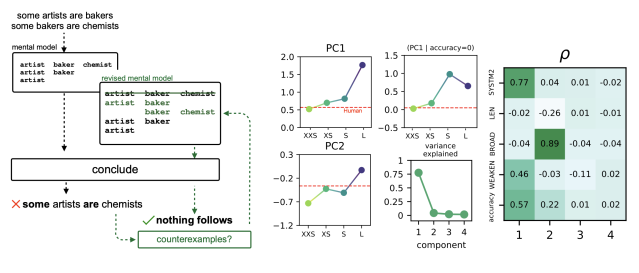

But the more interesting question is not whether size matters to the performance of language models, but why. Fortunately, Eisape and colleagues addressed this question as well. They tested which aspects explained the most variance in the model's behavior. Most of the variance (77 percent) was explained by one principal component: whether the model reconsidered the conclusion and searched for counterevidence (PC1 in Figure 7).

For more than a decade, philosophers and scientists have considered these two factors to be the core features of reflective or System 2 thinking (Kahneman 2011; Korsgaard 1996; Shea and Frith 2016; Byrd 2021, 2022). One way for machines to overcome faulty responses may be what seems to work for humans: step back from the initial impulse and consider more reasons (Belini-Leite 2023; Byrd and colleagues 2023).

Does Psychology Matter as Much as Size?

If the benefits of increasing the size of a language model are largely explained by the model's engagement in reflective reasoning, then can smaller language models perform as well as larger models if we design the smaller models to think more reflectively?

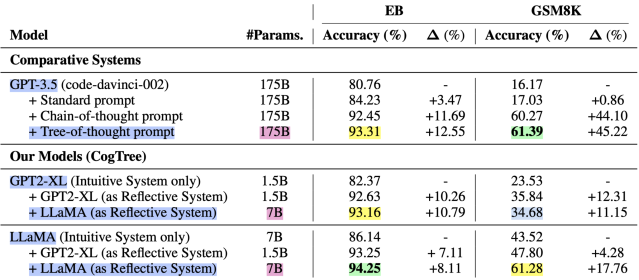

Junbing Yan and colleagues (2023) compared the performance of multiple language models on two benchmarks. There were two key differences between the benchmarked language models: their size (the number of parameters) and their psychology (whether the model included only an intuitive system or also included a reflective system). Yan and colleagues' Table 3 shows that psychology trumped size. Adding a reflective system to a small language model allowed it to outperform a language model that had 25 times more parameters. Even when that larger model was able to use more advanced prompt techniques, the smaller models that had a reflective system could keep up.

Conclusion

These results not only confirm the diminishing returns on increasing the size of large language models. The results also suggest that before language models became as large as they are today, their size may have mattered less than their psychology. This means that AI companies may need to compete on psychological architecture rather than size. For psychology, this serves as a reminder that our reasoning strategies may be at least as important as other cognitive factors like cognitive capacity (Thompson and Markovits 2021).

References

Bellini-Leite, S. C. (2023). Dual Process Theory for Large Language Models: An overview of using Psychology to address hallucination and reliability issues. Adaptive Behavior, 10597123231206604. https://doi.org/10.1177/10597123231206604

Byrd, N. (2022). Bounded Reflectivism & Epistemic Identity. Metaphilosophy, 53(1), 53–69. https://doi.org/10.1111/meta.12534

Byrd, N. (2021). Reflective Reasoning & Philosophy. Philosophy Compass, 16(11), e12786. https://doi.org/10.1111/phc3.12786

Byrd, N., Joseph, B., Gongora, G., & Sirota, M. (2023). Tell Us What You Really Think: A Think Aloud Protocol Analysis of the Verbal Cognitive Reflection Test. Journal of Intelligence, 11(4). https://doi.org/10.3390/jintelligence11040076

Eisape, T., Tessler, M. H., Dasgupta, I., Sha, F., van Steenkiste, S., & Linzen, T. (2023). A Systematic Comparison of Syllogistic Reasoning in Humans and Language Models (arXiv:2311.00445). arXiv. https://doi.org/10.48550/arXiv.2311.00445

Kahneman, D. (2011). Thinking, Fast and Slow. Farrar, Straus, and Giroux. https://amzn.to/41IlGVW

Korsgaard, C. M. (1996). The Sources of Normativity. Cambridge University Press. https://amzn.to/3S3GLXv

Shea, N., & Frith, C. D. (2016). Dual-process theories and consciousness: The case for ‘Type Zero’ cognition. Neuroscience of Consciousness, 2016(1). https://doi.org/10.1093/nc/niw005

Thompson, V. A., & Markovits, H. (2021). Reasoning strategy vs cognitive capacity as predictors of individual differences in reasoning performance. Cognition, 217, 104866. https://doi.org/10.1016/j.cognition.2021.104866

Yan, J., Wang, C., Zhang, T., He, X., Huang, J., & Zhang, W. (2023). From Complex to Simple: Unraveling the Cognitive Tree for Reasoning with Small Language Models. In H. Bouamor, J. Pino, & K. Bali (Eds.), Findings of the Association for Computational Linguistics: EMNLP 2023 (pp. 12413–12425). Association for Computational Linguistics. https://doi.org/10.18653/v1/2023.findings-emnlp.828