Artificial Intelligence

The Large Language Laboratory: AI as Scientist and Subject

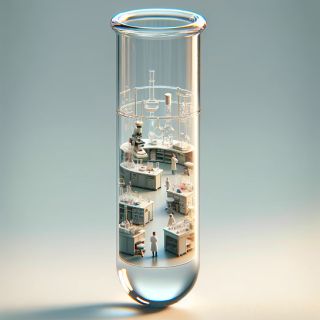

Virtual laboratories are where human interactions can be simulated and studied.

Posted April 24, 2024 Reviewed by Davia Sills

Key points

- A new study uses LLMs as both scientists and subjects to redefine the role of AI in science.

- Effective prompting is crucial for extracting insights from LLMs, with iterative refinement.

- LLMs serve as virtual labs for rapid, ethical experimentation in various fields.

As we push harder to utilize and understand artificial intelligence (AI), the untapped potential of large language models (LLMs) is becoming increasingly apparent. These powerful models, trained on vast troves of data, hold within them an immense wealth of knowledge that extends far beyond their ability to generate human-like text. However, much of this knowledge remains dormant, waiting to be unlocked and harnessed for practical applications. A new paper presents a novel approach to extracting this latent wisdom, transforming LLMs into both scientists and subjects through the use of Structural Causal Models (SCMs) and carefully crafted prompting techniques.

The Unique Duality of LLMs as Scientists and Subjects

The paper by Manning et al. introduces a shift in how we interact with and learn from LLMs. By integrating SCMs, a tool from statistical analysis and machine learning, with LLMs, the researchers create virtual laboratories where complex human interactions and decision-making processes can be simulated and studied under controlled conditions. In this approach, LLMs take on the roles of both scientists, designing and executing experiments, and subjects, participating in the simulated scenarios.

This dual role of LLMs opens up new avenues for understanding the hidden dynamics and biases that shape real-world outcomes. For instance, the paper demonstrates how an LLM can simulate a bail hearing, with the model acting as the judge, defendant, defense attorney, and prosecutor. By systematically varying factors such as the defendant's criminal history, the judge's caseload, and the defendant's level of remorse, the researchers uncover insights into how these variables influence the final bail amount. This approach not only extracts implicit knowledge from the LLM's training data but also sheds light on the complex interplay of factors that contribute to judicial decision-making.

The Power of Prompting

While SCMs provide a structured framework for exploring the latent knowledge within LLMs, the effectiveness of this approach hinges on the critical role of prompting. Prompting techniques involve carefully crafting input sequences that guide the LLM towards specific tasks or outputs. In the context of using LLMs as scientists and subjects, prompting plays a crucial role in eliciting the desired behaviors and extracting the most relevant insights.

This study also highlights the importance of designing prompts that are both specific and open-ended, allowing the LLM to showcase its knowledge while maintaining the flexibility to generate novel insights. For example, in the bail hearing scenario, the researchers use prompts that provide the LLM with the necessary context and roles, while also encouraging the model to explore the nuances of each character's motivations and decision-making processes.

Moreover, the study emphasizes the iterative nature of prompt engineering, where researchers continually refine their prompts based on the LLM's responses and the insights gleaned from each simulation. This process of fine-tuning prompts enables researchers to probe deeper into the LLM's latent knowledge, uncovering hidden biases, and identifying potential interventions to mitigate their impact.

Large Language Laboratories

The approach has far-reaching implications across various domains. In fields such as social science, economics, and psychology, this methodology could revolutionize the way we conduct experiments and test hypotheses. By leveraging LLMs as virtual laboratories, researchers can rapidly iterate on experimental designs, explore counterfactuals, and uncover insights that might be difficult or unethical to obtain through traditional human subject research.

Furthermore, the ability to extract and operationalize the dormant knowledge within LLMs has significant potential for real-world applications. Businesses can use these techniques to gain deeper insights into consumer behavior, optimize decision-making processes, and develop more personalized and effective AI-powered solutions. By uncovering and addressing the biases embedded within LLMs, we can also work towards building AI systems that better reflect the diversity of human experiences.

The Emergence of the "In-silico" Experiment

Looking ahead, future research could focus on expanding the range of scenarios and domains in which this approach can be applied, refining the prompting techniques to elicit even more nuanced insights, and developing new tools and frameworks to streamline the integration of SCMs and LLMs. As we continue to push the boundaries of what is possible with AI, the ability to harness the latent knowledge within LLMs will undoubtedly play a pivotal role in shaping the future of technology and society.

This study serves as a call for researchers and practitioners alike to recognize the untapped potential within LLMs and to actively seek out new ways to extract and utilize this dormant knowledge. By embracing the power of SCMs and prompting techniques, we can transform these language models into invaluable tools for scientific discovery, business innovation, and societal progress. The hidden gems within LLMs await our discovery, and the key to unlocking them lies in our ability to ask the right questions and design the right prompts.