Illusory Truth Effect

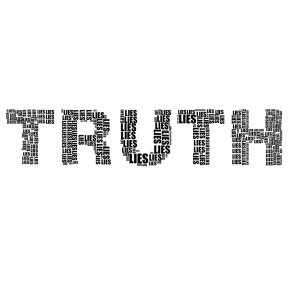

The illusory truth effect is the tendency for any statement that is repeated frequently—whether it is factually true or not, whether it is even plausible or not—to acquire the ring of truth. Studies show that repetition increases the perception of validity—even when people start out knowing that the information is false, or when the source of the information is known to be suspect.

The illusory truth effect was first established in a series of psychological studies reported in 1977. Under controlled conditions on a series of tests several weeks apart, researchers found that each time an untrue statement was repeated, participants’ confidence in the validity of the statement rose, while assessments of the validity of statements presented uniquely on each test never changed. Many studies since have validated the initial findings under an array of conditions.

The illusory truth effect can create cultural memes and misconceptions, such as the widely held belief that we only use 10 percent of our brains.

Because it can make false information appear true, it can also be used to deliberately manipulate the thinking of others. It is a useful tool for political propaganda. It can serve as an incentive for bad actors—commonly political or ideological extremists—to deliberately spread misinformation for their own goals.

The deliberate spread of misinformation by individuals or groups, especially by way of the Internet, is known as dark participation. There is much evidence that it is being used by bad actors to foment discord and doubt about policies, leadership, and other important matters in populations approaching important decisions.

Contents

When it comes to human judgment, we are influenced by more than just the informational value of content. Among other factors, we are swayed by our experience of information processing. Repeated statements, whether they are factually true or false, are easier to process—they create processing fluency, which lends them validity.

The illusory truth effect does not hinge on whether the repetitions occur moments apart or weeks apart. Above and beyond the declarative information in a statement, processing ease is itself a piece of information we use to evaluate the truth of claims.

We infer truth from fluency of processing because, in the natural world, truth and fluency are correlated. Processing fluency is so equated with the truth that the illusory truth effect can occur with other facilitators of fluency in the absence of repetition. For example, studies find that information presented in easy-to-read fonts is more often rated as true than the same information presented in low-contrast fonts.

The illusory truth effect is such a robust phenomenon that it operates independently of cognitive ability. Neither differences in cognitive ability, in need for closure, nor in cognitive thinking style influence the power of repeated statements to appear true. An analytical thinking style is no protection, and a need for cognitive closure is not a facilitator.

Knowledge offers little protection against the illusory truth effect because people often underutilize their stored knowledge—a phenomenon called knowledge neglect. Even experts in an area of knowledge are vulnerable to the illusory effect. As one team of researchers reports, “people sometimes fail to bring their knowledge to bear and instead rely on fluency as a proximal cue.”

Nor, when evaluating recently presented information, do they monitor the source of information in their knowledge base, which could be a clue to its validity. In fact, information may be stored without any memory of the source of information. Such lapses in evaluating information or its source may render people especially prone to rely on fluency as a cue to its truthfulness.

Because the illusory truth effect operates both on information that is true and information that is false, it is frequently deployed to fuel the deliberate spread of misinformation in service of swaying public opinion. After all, people are most likely to share with others information they believe is true. However, recent history has shown that the power of mere repetition to put the imprimatur of truth on misinformation can rapidly undermine individual and population health, trust in institutions and government itself, and the civility of society.

The deliberate spread of misinformation is known as disinformation, and it has long been a tool of propagandists to bias people’s judgment. In the age of the internet, however, it has extended reach, power, and speed. Social media platforms, in particular, have built-in mechanisms for rapid repetition.

One of the most noted uses of the illusory truth effect is what has come to be known as the birther conspiracy. Ahead of the U.S. presidential election in 2008, fringe theorists and political extremists on the right widely circulated on the internet rumors that Democratic candidate Barack Obama was not qualified to be president because he did not meet the requirements of the U.S. Constitution that holders of the office must have been born in the U.S.

Even today, years after then-candidate Obama publicly released his birth certificate proving he was born in the state of Hawaii and not, as birthers alleged, in Kenya, many still believe his presidency was illegitimate.

It is easy to feel despair about the illusory truth effect: There is no way to catch up with the accelerating speed of repetition of falsehoods once they are unleashed on social media. Plus, there is a built-in paradox: Correcting a lie involves repeating the falsehood—which only solidifies it further. How, then, is it possible to counter false claims—or even discuss them at all—without reinforcing them?

Cognitive linguist George Lakoff has proposed a way to correct any lie by framing it with the truth. You begin by mentioning the truth, briefly mention the falsehood others are spreading, and then describe the correct information. Putting the truth first and last capitalizes on the cognitive capacity of people to remember beginnings and endings more than middles. “1. Start with the truth. The first frame gets the advantage,” Lakoff has advised on the social media platform X. “2. Indicate the lie. Avoid amplifying the specific language if possible. 3. Return to the truth. Always repeat truths more than lies.”

Researchers specifically exploring the spread of climate misinformation came up with a technique for neutralizing repeated lies that is applicable to far more types of misinformation, so-called “inoculation.” They suggested creating messages that first explain the flawed argumentation technique used in the misinformation—highlighting the way scientific information is distorted—and then feature the scientific consensus on the subject. Inoculation messages can be deployed preemptively to keep disinformation from gaining traction—a practice they call “prebunking.”

A good way to survive in a “post-truth world,” say researchers, is to become your own fact checker. It’s necessary because most people are biased toward believing the headlines they read. Focusing on the accuracy of headlines—in contrast to how interesting they are—at the point of exposure prevents misinformation from taking root in the brain.

Ask yourself, “How truthful is this statement?” At that point, you begin a memory search for relevant information, or you can search reliable outside sources of information. Actively rating the truth of claims at the point of exposure keeps people from resorting to feelings of fluency as the marker of truth.

You can test your own susceptibility to misinformation. It's a two-minute quiz developed by researchers at the University of Cambridge