Ethics and Morality

Scientists Possess Inflated Views of Their Own Ethics

Scientists are many things. Being unbiased isn’t one of them.

Posted May 6, 2024 Reviewed by Michelle Quirk

Key points

- Recent research calls into question researchers' biased perceptions of their own ethical practices.

- This, though, should be unsurprising, given that researchers are subject to the same biases as everyone else.

- Ample evidence exists to point toward bounded ethicality and motivated reasoning as culprits.

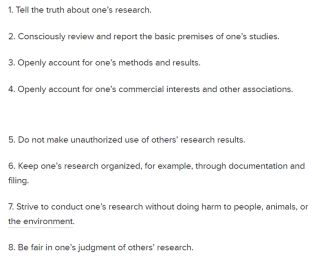

A recent Psychology Today post by Miller (2024) discussed the results of a research study1 that included a sample of more than 10,000 researchers from Sweden. Respondents were provided with a description of ethical research practices (Figure 1) and asked to rate (1) how well they applied ethical research practices relative to others in their field and (2) how well researchers in their field applied ethical research practices relative to those in other fields.

The study itself was not overly complex (in fact, each rating was just a single item). When it came to rating their own application of research ethics, 55 percent rated themselves as equal to their peers, close to 45 percent rated themselves as better, and less than 1 percent rated themselves as worse. When it came to assessing others in their field, 63 percent rated their field as similar to others, 29 percent rated their field as better, and close to 8 percent rated their field as worse.

It seems unlikely that slightly less than half of researchers apply ethical research practices better than their peers while less than 1 percent apply them worse.2 So, there’s likely a disconnect between researchers’ actual research practices and how they perceive their own and their field’s research practices.3 Miller (2024) argues these results are “especially surprising since scientists are regularly thought to be objective.”

I would respectfully disagree with Miller’s conclusion. These results are right in line with what we know about human decision-making, especially when we’re talking about decision-making that involves people’s sense of self and their own morality.

Better-Than-Average Effect

Many people are aware of the better-than-average (BTA) effect, with the prototypical example used being the fact that more than 80 percent of drivers consider themselves to be better than average.4 Although Saxena (2020),5 Campbell (2020),6 and others have explained how these results could be accurate (and probably are), there are scores of other studies that demonstrate the BTA effect for traits, abilities, and skills that we know are more equally distributed (e.g., intelligence, mathematical ability, attractiveness). To synthesize a lot of this prior research, Zell et al. (2020) conducted a meta-analysis and found that for many traits, abilities, and skills, there is evidence for a BTE effect, with the size of that effect varying based on which constructs are included, the composition of the sample, and some other factors.7 One of the interesting results was that a BTA effect was apparent for what they operationalized as easy skills, but a worse-than-average (WTA) effect was found for skills they operationalized as difficult skills.

It seems unlikely that researchers would consider the practices used to operationalize ethical research to be difficult to apply, so it stands to reason most researchers would consider themselves better than average at applying them, regardless of whether they objectively are. But there’s even further reason to conclude that the results Miller (2024) discussed are unsurprising.

Bounded Ethicality

Herbert Simon first introduced the expression bounded rationality back in 1957 (Wheeler, 2018), which was used as a principal basis for understanding human rationality, including why humans pursue satisficing outcomes.8Chugh and Kern (2016) later applied the idea of boundedness to the concept of ethicality.9 They defined bounded ethicality as “the systematic and ordinary psychological processes of enhancing and protecting our ethical self-view, which automatically, dynamically, and cyclically influence the ethicality of decision-making.”10

Their definition confounds the idea of boundedness (i.e., that we’re constrained by what we can consider from an ethical perspective) with motivated reasoning (i.e., our ability to reason our way into an ethically questionable decision). And both can play a role in the choice to behave in ethically questionable ways.

Actual boundedness comes into play when we fail to recognize and use ethically relevant information that should (but ultimately does not) guide our decision. The frame of reference we bring to a situation can cause us to hone in on some specific details while ignoring others, even if some of those other details might be relevant to making an ethically defensible decision. In the case of researchers, the psychological context they bring to a decision situation will affect which details they focus on. It’s the details they may filter out that, if relevant, might affect the ethical defensibility of decisions they make.

In such cases, though, we typically aren’t aware that we’ve made some sort of ethical transgression. As Bazerman and Sezer (2016) argued, in cases where bounded awareness leads to ethically questionable behavior, the questionability of said behavior may only become evident if it produces some adverse effect that can be linked to the choices that were made. For example, unknowingly designing a study that conflicts with some element of ethical research practices might only become apparent if (a) an ethics review board flags it or (b) it results in some adverse consequence that is brought to the attention of the researcher. In such situations, our ethical assessment tends to be heavily grounded in outcome bias. If we perceive our decision to have produced no adverse effect, then we’re likely to conclude we made ethically defensible choices. And when it comes to the list of practices in Figure 1, bounded awareness could easily affect the ratings researchers provided.

But motivated reasoning can add an additional wrinkle to ethical decision-making. There is an abundance of evidence that most people consider themselves to be good people, and they’re motivated to maintain those views. And most people probably are goodish people, in the sense that they tend to act within ethical constraints (at least insofar as they are aware) much more often than they deviate from them.

There are times, though, when conflict can occur between our self-interests and the ethical pursuit of those self-interests, as I wrote about when discussing hypocrisy. And sometimes we reason our way into the pursuit of those self-interests at the expense of adherence to relational values or ethical rules (i.e., engage in motivated reasoning). But to do so, we need a mechanism to justify the pursuit of those self-interests. For that, we can turn to moral disengagement theory.

Moral Disengagement Theory

Albert Bandura originally introduced moral disengagement theory to explain the social-cognitive mechanisms that allow people to set aside internalized moral values to justify ethically questionable (and even indefensible) behavior. These mechanisms essentially provide the means to give ourselves a moral hall pass. Seven mechanisms were identified, ranging from moral justification (i.e., reasoning that our action somehow fulfills some moral obligation) to displacement of responsibility (i.e., blaming someone else for causing the behavior, such as a supervisor) to dehumanization (i.e., using dehumanizing language to justify unethical behavior toward some person or group).11

All eight of the practices listed in Figure 1 can lend themselves to the various mechanisms that lead to moral disengagement, allowing researchers to maintain a sense of moral goodness by excusing situations where they may not have consistently applied those practices.12 Additionally, although Bandura originally argued that moral disengagement was generally an a priori (before the act) requirement (at least for adults), these same mechanisms can be employed a posteriori (i.e., after the act) to help deflect responsibility for having missed or ignored ethically relevant information that produced an adverse effect (e.g., “It’s not my fault”).

Final Thoughts

To wrap things up, I want to return to Miller’s inference that the results are “especially surprising since scientists are regularly thought to be objective.” Although the belief that scientists are thought to be objective is certainly widely held and appears intuitively logical, as Reiss and Sprenger (2020) argued, “several conceptions of the ideal of objectivity are either questionable or unattainable.”

Now, there’s a difference, as Zaruk (2024)13 argued, between activist scientists—who “[start] with conclusions and adapt the evidence”—and credible scientists—who “[start] with evidence and adapt the conclusions.“ But that doesn’t mean credible scientists are objective. As Reiss and Sprenger (and many others) have argued, scientists bring values and biases into the scientific process. Although science provides methodologies that offer some modicum of control over a specific scientist’s ability to rig the research studies to achieve desired conclusions, these controls are imperfect and only useful to the degree to which scientists choose to apply them.

And when it comes to self-assessments, researchers aren’t any different than other people. They have the same self-serving biases as everyone else. And, so, it comes as no real surprise that, when evaluating their own and their field’s work, these same researchers will show the same biases we would expect average people to show.

References

1. The study was conducted by Lindkvist et al. (2024), for those who might have an interest in reading the actual study.

2. Although it may be unlikely, it is not mathematically impossible.

3. It’s also possible a lot of folks are lying, but that also seems highly unlikely. And with no evidence to support that conclusion, it’s best to make the inference I am making.

4. These results were originally produced by Svenson (1981). I know they’ve been replicated at least once (I think in 1983, but I can’t say for sure).

5. Ambuj Saxena. Are you "better than average" driver? LinkedIn. August 10, 2020.

6. Adam J. Campbell, Ph.D. Most Drivers are Better than Average. July 20, 2020.

7. The Zell et al. study is available on ResearchGate, so interested readers can check out the full results.

8. Something I wrote about back in 2020.

9. These were not the first authors to do so, but they were the first to offer a model of it.

10. This is actually a revision of the definition presented by Chugh et al. (2005). I personally find the revision to be a huge departure from the idea of boundedness, as it confounds the actual ethicality of behavior with people’s motivation to maintain belief in their general ethicality. The original definition was truer to Simon’s original claims about decision-making.

11. The website I linked to discusses all seven and in more depth than I can here, so feel free to take a deeper dive there.

12. Not to mention that some of them, such as numbers 6 and 8, are quite subjective.

13. David Zaruk. Defining Activist Science. Firebreak. May 1, 2024.