Fear

Fear of AI and the Digital Future

Should we be fearful about the future development of artificial intelligence?

Posted May 22, 2023 Reviewed by Devon Frye

Key points

- Experts debate whether the benefits of artificial Intelligence (AI) will outweigh the risks.

- Cultural tropes about "killer robots" and AI taking over may distract us from its real, more mundane risks.

- Congress may create legal guardrails, though there is worry that doing so may stifle innovation.

- Listening to concerns while accepting that AI isn't going away can help us find the safest path forward.

Artificial intelligence, or “AI,” has enormous potential in almost all areas. This nascent technology is heralded by many as a new frontier with unlimited potential. However, AI is one technology that produces fearful reactions and even calls for a moratorium on research and development in this area. Should we fear the unknowable future of AI?

Some experts are raising concerns about the risks of unrestrained AI development. Some claim that this technology requires new laws and agencies to oversee AI developers. Others argue that over-regulation will stifle innovation in this evolving technology.

Recently, Sam Altman, the CEO of OpenAI (the company that developed Chat-GPT), appeared alongside other artificial intelligence experts before Congress to consider new policies for regulating the AI industry. [1] Congress members were interested in how AI will make use of our data, how it may be programmed to influence or persuade us, and who owns the material created by AI.

Beyond these concerns, legislators are examining possible legal guardrails for AI technology. Calls for immediate regulation are driven by the fear that AI will eventually cause harm—even though the specific harms have not always been clearly articulated.

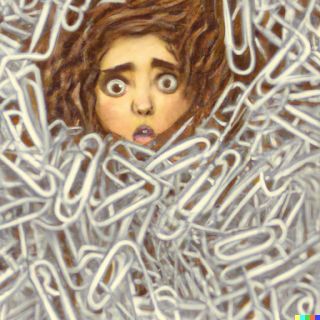

Buried in Paper Clips?

Philosopher Nick Bostrom of Oxford University wrote in 2014 about a thought experiment in which a super-intelligent AI is asked to start producing paper clips. Since the AI grows with experience (machine learning) this AI invents ever-improved methods to make paper clips and achieve its goal. The AI has unlimited motivation and tenacity; it never quits.

Soon, the “paperclip maximizer” AI appropriates resources from other systems and eventually devotes everything to making paperclips. Like the water-fetching brooms in Disney’s "Fantasia" (in the segment “The Sorcerer’s Apprentice”), the AI will not stop. We can’t turn it off because it will not let us shut it down—and so the world is inundated with paper clips! [2]

The paper clip story may exaggerate AI fears—succumbing to the “killer robot” notion that machines will take us over. A more reasonable fear is that AIs replace us at our jobs, or we fear that AIs will imitate us, creating “deep fakes” to steal our identities. Underneath is a fear that AIs will become too powerful. From “2001: A Space Odyssey” to “The Terminator,” there is much literary and cultural folklore about AI and its evil iterations overtaking humans.

Several authors have reviewed these potential problems with AI. Some suggest that future AI capabilities have been overstated and the fear is irrational. They first point out the many ways that AI is not even close to showing human-like levels of intellect, creativity, or sentience.

They further stress that technology and automation have always impacted jobs, yet workers have adapted, developed new skills, and created new careers. The next job trends might be for AI trainers, trouble-shooters, and repair technicians. These experts say that fears about AI taking over are mostly unjustified. [3, 4, 5]

Yet for as many who say the threats from AI are minimal, there are others who fear developing AI any further. Most agree that the sci-fi notion of AI computers taking us over is not the real problem, but there are more mundane, yet still dangerous, possibilities. [6]

In March 2023, over 1,000 technology leaders working with AI signed a letter warning that AI technologies present “profound risks to society and humanity.” [7] These experts suggest that research should be paused, and new regulations considered before any further development of AI bots like Chat-GPT.

They concluded that given the risks, “AI research and development should be refocused on making today's systems more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal”—certainly an aspirational goal, even if challenging to put to the test.

An over-focus on “killer robots” distracts us from the everyday reality that AI surrounds us; from our phones to GPS, from banking to social media, AI is everywhere and expanding. It is impossible to predict all the specific AI risk scenarios because we don’t know exactly how AI may evolve.

The positive potential for AI—from new drug discoveries to freeing up time spent on everyday tasks—offers enormous potential. Yet many people firmly believe that AI might eventually circumvent human control. That is scary, even as a possibility. Though the AI genie is out of the bottle, I believe we should listen to those who are fearful and be proactive in finding ways to safely develop and implement this technology, without stifling innovation.

References

2) Megan Courtman (2021). The Paperclip Apocalypse. https://crypticmeg.com/2021/05/07/the-paperclip-apocalypse/

3) Milton Ezrati - “No Reason to Fear AI.” Forbes. (April7, 2023). https://www.forbes.com/sites/miltonezrati/2023/04/07/no-reason-to-fear-ai/?sh=14c8a4e37ba6

4) Eva Hamrud (2021). AI Is Not Actually an Existential Threat to Humanity, Scientists Say TECH 11 April 2021 https://www.sciencealert.com/here-s-why-ai-is-not-an-existential-threat-to-humanity

5) Rene Buest (2018) Artificial intelligence is not a threat to human society. CIO https://www.cio.com/article/228227/artificial-intelligence-is-not-a-threat-to-human-society.html

6) What Exactly Are the Dangers Posed by A.I.? https://www.nytimes.com/2023/05/01/technology/ai-problems-danger-chatgpt.html

7) OPEN LETTER: https://futureoflife.org/wp-content/uploads/2023/05/FLI_Pause-Giant-AI-Experiments_An-Open-Letter.pdf